Google is shifting its AI coding agent, Jules, from web-first sidekick to a toolchain-native assistant with a command-line interface and a public API that slot into terminals, CI/CD pipelines or workplace chat. The push represents a significant shift in the race to integrate semi-autonomous coding agents into the places developers already work, rather than just their text editors.

CLI and API Designed for Real Developer Workflows

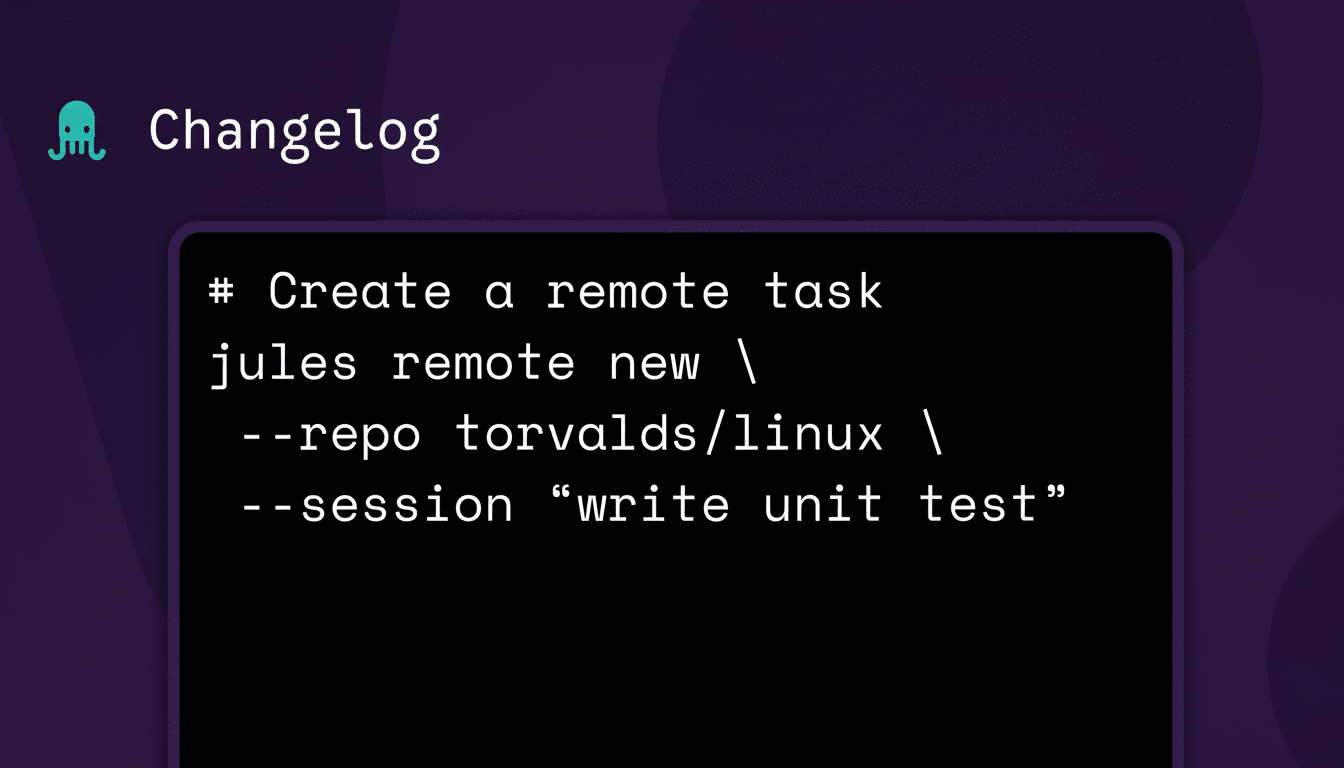

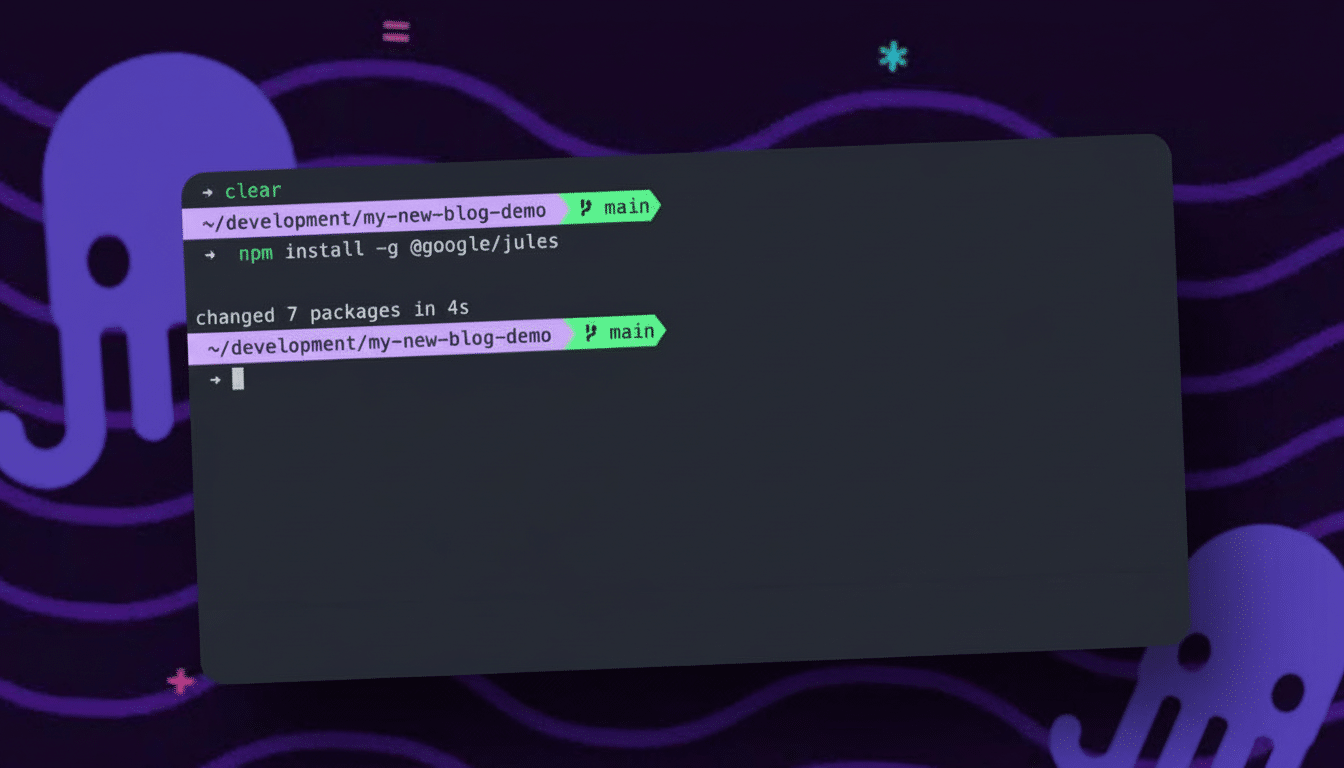

The new Jules Tools CLI allows developers to hand off tasks—such as scaffolding features, refactoring modules, writing tests or drafting PRs—all from the terminal. Instead of shuttling between a website and GitHub, the agent now executes plans end-to-end when authorized by a user, surfacing diffs and validations in context. Google’s developer advocates describe Jules as deliberately quieter than a regular code assistant: its purpose is to run independently after an alignment, not chat indefinitely.

The public API unlocks the ability for deeper integrations – think Jenkins or GitHub Actions steps that hand over flaky test triage to Jules, a Slack bot that spins up hotfix branches on demand, internal dashboards that automatically trigger agent-run migrations when everyone is asleep. Google product leaders say the aim is to reduce context switching, allowing teams to wire the agent into familiar routines where muscle memory has set in.

Recent additions further augment the ergonomics of the agent: a memory system for remembering preferences and corrections, a stacked diff viewer to make code review easier, image uploads so you can debug UI issues, and the ability to read and respond to pull request comments. These little edges add up when you’re asking agents to work across entire repos, not just autocompleting a single line.

From Autocomplete to Fully Autonomous Coding Agents

Jules is part of a wider shift in the industry from predictive coding to task-specific agents that plan, execute and validate. The Copilot Workspace for GitHub draws multi-step plans for implementation related to issues. Cody automations in Sourcegraph are meant for repo-scale refactors. Replit provides agents that execute jobs end-to-end in hosted sandboxes. Amazon’s CodeWhisperer grows from recommendations to more workflow widgets. On the model side, systems such as OpenAI’s o1 and Anthropic’s Claude 3.5 Sonnet specifically focus on chain-of-thought planning and introduce tool use—perfect soil for agent behavior.

The demand is real. Stack Overflow’s most recent Developer Survey found that the majority of professional developers already use AI tools regularly, and research from GitHub finds that on specific tasks, an AI assistant can reduce time-to-completion by more than half. Generative AI gains in popularity across markets; McKinsey’s new State of AI discovered that the adoption of generative AI within organizations is picking up pace across sectors. The vendor lesson is equally obvious: the value accrues not just to who writes the best suggestions, but who choreographs the workflow around code changes, tests and deployment.

Version Control And Platform Lock-In: Deploy!

Today, Jules does its business inside a GitHub repo, which jives well with network effects and developer muscle memory. But Google says that it’s actively looking into other hosts and version control systems, as well as situations where teams don’t necessarily need a VCS dependency. That kind of flexibility will be important to the enterprise running GitLab on-prem, the financial institution with heavy-handed egress policies or the startup that wants those sandboxes to only exist for a short period of time and not leak out.

Going wide of a single code host somewhat mitigates the exposure to platform lock-in. It promotes a plug-in architecture so that agents may open issues, create branches, run linters and raise PRs without vendor lock-in. For teams, it means no-compromise automation applied equally to their heterogeneous stacks—monorepos, microservices and legacy products—with no need to change how they manage source code.

Guardrails, Oversight, and Remaining Gaps on Mobile

Autonomy introduces oversight challenges. Jules is meant to stop and ask for help when it gets stuck, Google says — a helpful check to prevent silent failure. But supervision is tougher when workflows extend to mobile devices — for which native notifications are still a work in progress. Until richer alerting comes into play, teams may also want agent activity bound to the desktop environment, chat channels or their CI dashboards.

Security and compliance teams will pressure test this change. Advice from groups like OWASP and the OpenSSF points to threats such as prompt injection, insecure tool invocation, including secret leakage. For Jules to acquire enterprise trust, granular permissions, audit logs and policy controls will be table stakes—especially when agents begin to get write access to production-critical repos and deployment systems.

Pricing Signals And What It Signifies For Teams

Jules has gone out of beta with imposed limits and paid plans. The no-cost option maxes at 12-15 tasks a day with three running simultaneously and Google AI Pro and Ultra expand limits dramatically on per-seat subscription fees billed monthly. Framing usage in terms of “tasks” rather than tokens or raw compute is a shrewd move; it’s how developers want to think of outcomes, and it’s something managers can get their heads around for budgeting purposes.

With Jules on the scene in the terminal and pipeline, what that means in real terms is that Google is now competing to be part of your daily loop as a developer (hello PR, looks like you can come out to play with merge again).

The next battleground won’t be who has the smartest autocompletion snippet, it will be who black-boxes the most ownership of orchestration across repos, tests, and releases with the least friction and highest confidence. With a CLI, open API, and plans to decouple from any single code host out of the box, Jules just became a serious candidate for that position.