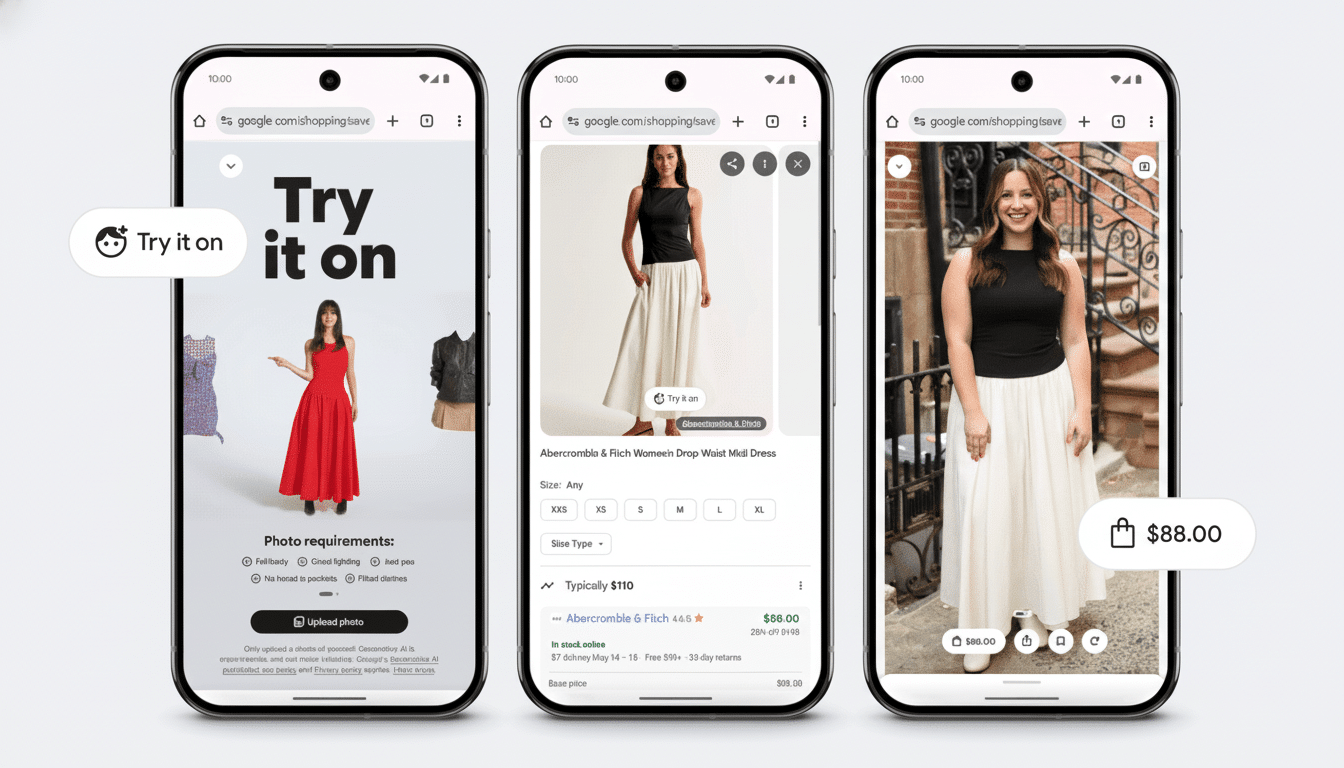

Google is expanding its AI-powered virtual try-on from clothes to shoes, allowing users to see shoes and sneakers on their own bodies right from Search and the Shopping tab. Instead of uploading “feet pics,” you send in a full-body photo, and Google’s model slips the pair you’re eyeing onto your feet so you can see what they’d look like before clicking Buy.

How the new shoe try-on works across Google

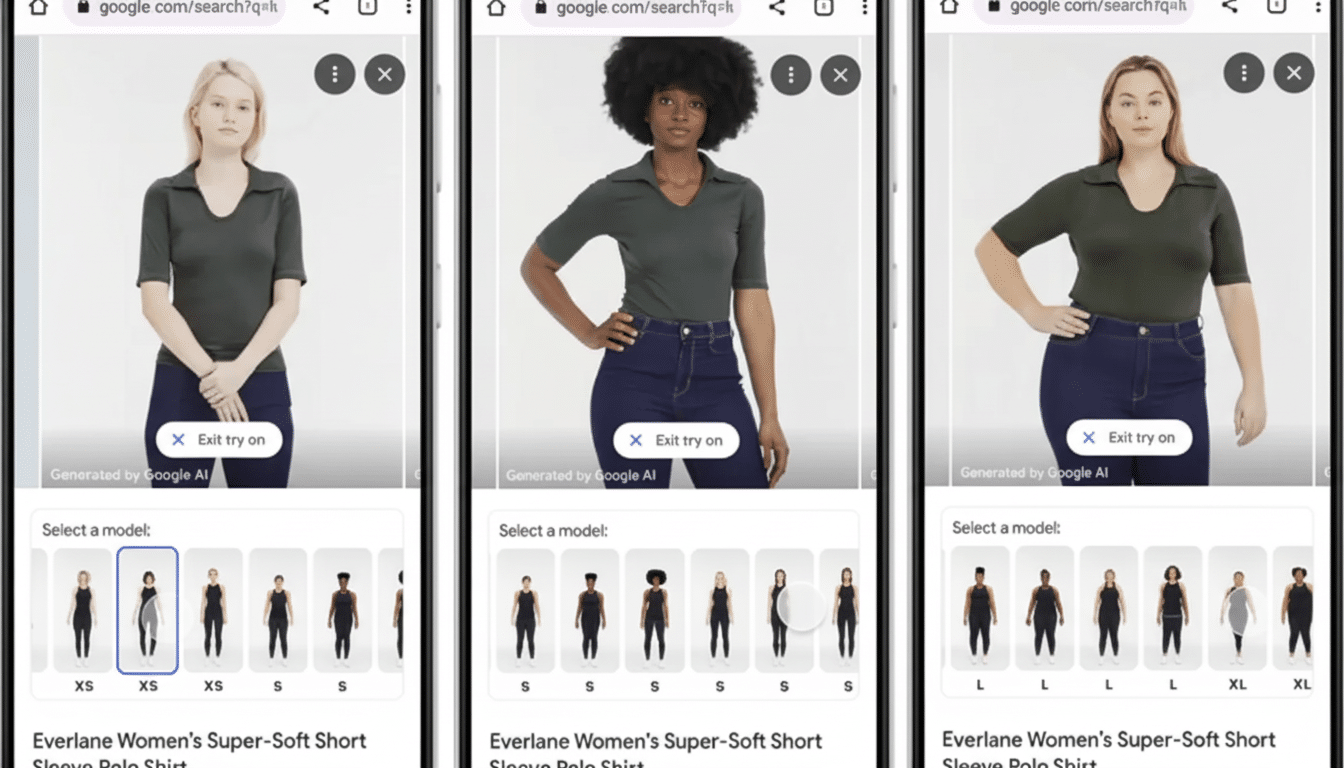

The feature is integrated into relevant product listings across Search, Shopping and Images. Tap Try It On, upload a full-length photo in good lighting and fitted clothing, and Google’s technology breaks your body into its component parts, finds your existing shoes and renders the new style in place. (They note it’s for appearance only, not sizing — so you can get a feel for vibe and color coordination, but you still select size in the usual way.)

From the Google demo you can rapidly flip through “recently tried” pairs on a carousel, compare looks in a swipe and share selfies with friends. The first few generations take a second or so, but they quickly speed up as the system caches your photo and body segmentation for reuse.

Why it’s a harder problem for realistic footwear

Shoes are more difficult to render realistically than swapping a T-shirt, for instance. The model also needs to deal with occlusion (pant legs and hems can obscure parts of shoes), perspective distortion in the lower region of the image and subtle signals such as shadowing or direct ground contact. Foot pose estimation is delicate — a slight angle mismatch can produce an unnaturally tilted sneaker. Google’s apparel try-on set the stage with accurate draping and lighting; footwear presents a whole new level of geometry and texture detail.

This is crucial, because fashion e-commerce suffers from a high rate of returns. Industry groups like the National Retail Federation have estimated that overall U.S. return rates are in the mid-teens, by percentage — which adds up to hundreds of billions of dollars in merchandise flowing back. Visual confidence helps reduce some of that friction. Both 3D and AR content has been shown to increase conversion rates by a significant margin compared to standard product pages, according to Shopify (and retailers frequently report lower return rates when shoppers have viewed realistic previews).

Doppl points to a 360-degree future for try-ons

In addition to Search and Shopping, Google is experimenting with a stand-alone try-on app called Doppl. Unlike a static photo workflow, Doppl generates a 360-degree avatar, so you can spin around and see how an outfit and shoes read from any angle. If this method sticks, anticipate more evergreen profiles — upload once, try on across brands — instead of siloed try-ons according to the product page.

The shoe expansion extends what Google already does with apparel try-on, which the company says encompasses more than a billion listings. Extending that capability to footwear makes Google a major player in the space of “fit visualization,” as sizing and comfort recommendations do still remain two separate challenges generally based on brand-specific data as well as consumer feedback.

Where you’ll see it across Search and Shopping

This feature is rolling out to additional markets in Search, Shopping and Images; Google said Australia, Canada and Japan are up next. Availability will come to some shoe listings first, with more inventory soon as sellers supply compatible product images and metadata.

For consumers, this takes the form of a Try It On button shown while looking at sneakers and shoes. For brands, it’s more about cleaning up product angles and consistent lighting on images so models can be properly retouched. Look for a flood of merchants coming online once ROI measurement proves out.

Privacy and accuracy considerations for try-ons

As the experience is based on a personal photograph, users will seek out clear information about what’s kept and for how long before it gets deleted, as well as whether their images are being used to train (or not train) such models.

Proper segmentation of garments is also important: overly baggy pants, long skirts or a messy background can introduce artifacts. Google recommends bright, uniform lighting and snug clothing for better results — good advice based on research in computer vision about segmentation and pose estimation.

The competitive picture for virtual shoe try-ons

Google’s push arrives in a crowded landscape. Amazon has tested virtual try-on for sneakers within its app, Snap has made AR try-on something of a staple for beauty and footwear partners, and athletic brands have been toying with size scanning and fit guidance. What sets Google apart, though, is distribution: adding try-on at the search layer is intent captured early in the process — before a shopper has chosen what outlet to buy from — and potentially getting high-intent traffic routed to merchants who are ready for it.

If virtual try-on for shoes takes its cues from the playbook of apparel — first style visualization, quickly followed by smarter recommendations — what may come next is a marriage of visuals with sizing intelligence that is underpinned by brand fit maps and synthesized through community reviews. The new Google feature does one thing well for now: It lets you see how those kicks will look on you, in your own photo, before they arrive at your door.