Google is rolling out Project Genie, an interactive experiment that turns plain-language prompts into explorable 3D spaces, effectively bringing “vibe coding” to open-world creation. Think of it as a sandbox where your description of a place, mood, and style becomes a live environment you can walk through—no traditional level editor required. There’s a big caveat, though: access is currently restricted to the company’s highest-tier AI Ultra plan, priced at $250/month.

What Project Genie Actually Builds Today

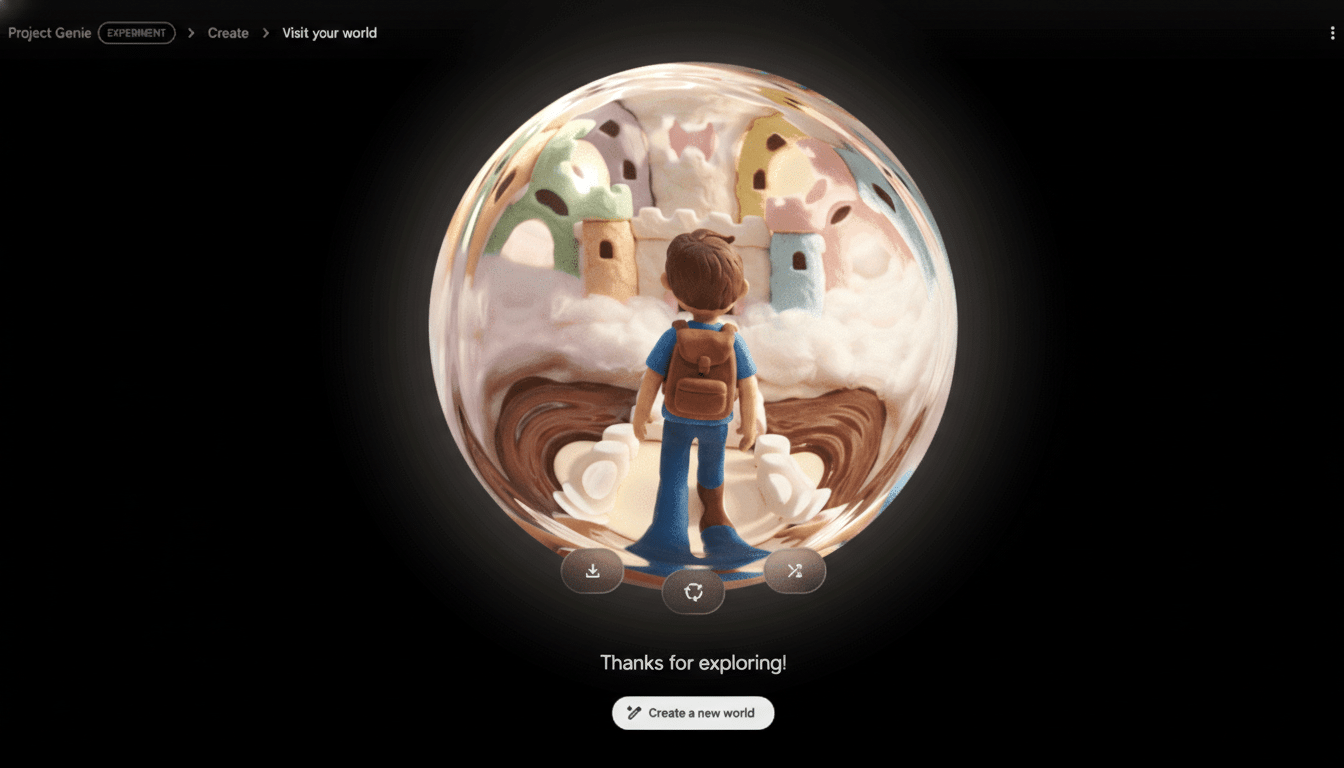

Project Genie builds on Google’s Genie research models that map text and media inputs to coherent virtual scenes. You describe the world—say, “sunset-soaked coastal ruins with lush moss and hovering lanterns, painterly style”—and Genie assembles geometry, textures, lighting, and ambience in minutes. You can guide the look with style directives and even upload reference media for inspiration, then refine with quick sketches before committing.

It doesn’t stop at scenery. You can generate an avatar—an object, animal, or person—and immediately explore the space from that perspective. Glide as a paper airplane, prowl as a cat, or stroll as a hiker. The system supports iterative remixing, so you can nudge the environment toward different aesthetics or introduce random variations to keep discovery fresh.

Vibe Coding Comes To Open Worlds With AI

“Vibe coding” is shorthand for designing experiences by describing intent, not by authoring assets or scripting behavior line by line. In practice, Genie turns high-level directions—tone, style, traversal feel—into the scaffolding of an interactive world. It’s the natural language counterpart to procedural generation, and it dovetails with a broader industry shift toward AI-assisted tooling seen in projects like Roblox’s generative code helpers, Unity’s Muse features, and Nvidia’s ACE for conversational NPCs.

Google’s own research has been trending this way. Work from Google DeepMind on generative interactive environments shows that world models trained on large video corpora can learn physics-like regularities and affordances. While early Genie demos focused on 2D gameplay from images, Project Genie expands the concept to navigable 3D play spaces and style control, edging closer to open-world prototyping without a conventional engine workflow.

Not A Full Game Yet, But Great For Prototyping

Don’t expect quest lines, combat systems, or finely tuned physics out of the box. Google acknowledges that collision handling, object interactions, and physical plausibility still need work. Right now, the appeal is exploratory: mood-rich locales that you can inhabit and tweak rather than complete games with progression or goals. For creators, that’s still compelling—fast previsualization, gray-boxing levels, and experimenting with art direction without the friction of asset pipelines.

The remix loop is arguably the killer feature. Because you can quickly iterate on style and structure, the tool behaves like a creative collaborator—offering “what if?” variations that are expensive to prototype in traditional engines. That makes it useful not only for hobbyists but also for studios testing themes or traversal layouts before investing in bespoke production.

Ultra-Tier Access And The Project Genie Cost Question

At launch, Project Genie sits behind Google’s AI Ultra subscription at $250/month, signaling hefty compute under the hood. That price tag clearly targets professionals, labs, and ambitious creators rather than casual players. Google hasn’t committed to broader availability, but if compute efficiency improves and safety checks mature, a more accessible tier seems plausible.

The economics matter. Generating high-fidelity, real-time 3D scenes from text is GPU-intensive, and usage spikes can drive up costs quickly. Cloud providers and engine makers are experimenting with hybrid approaches—local inference for lightweight tasks and cloud for heavy lifts—to reach more users without sacrificing fidelity or safety.

Why This Move Matters For Future Game Creation

The game industry is already probing generative workflows. Ubisoft has experimented with tools like Ghostwriter for NPC barks, Inworld AI has partnered with major studios on dialog systems, and surveys from the Game Developers Conference indicate that a substantial share of developers are testing generative AI in parts of their pipeline. Newzoo estimates the global games market near the high-$100 billions, and even small efficiency gains in prototyping or worldbuilding could be meaningful at that scale.

Project Genie’s promise is speed to imagination. If you can describe a vibe and get a playable sketch in minutes, the barrier between concept and experience shrinks dramatically. The risks—copyright concerns around training data, consistency of physics, and the potential to homogenize art styles—are real. But as a glimpse of how we might “speak” worlds into existence, Genie feels like a milestone.

For now, it’s an expensive playground with astonishing moments. If Google can harden the physics, broaden interactivity, and lower the buy-in, Project Genie could become the default way to mock up open worlds—then hand the results to traditional tools for polish. That’s vibe coding in action: less boilerplate, more imagination on the screen.