The race to own enterprise AI is raging at the interface, but the real power play is happening underneath. While Microsoft pushes Copilot across Office and Google embeds Gemini into Workspace, Glean is staking its claim on the intelligence tier that actually connects large language models to a company’s people, permissions, and workflows.

The Quiet Battle Beneath the Enterprise Interface

Enterprise leaders have learned that a slick chatbot is only as good as the context it can tap. Models are extraordinary pattern matchers, but they start life as generalists. Glean’s bet is that the durable moat is not the assistant’s personality; it’s the data graph that maps who knows what, where information lives, and how work actually gets done across tools like Slack, Jira, Salesforce, Google Drive, and Confluence.

- The Quiet Battle Beneath the Enterprise Interface

- Model Neutrality as a Strategy for Enterprise AI

- Connectors And Agents Do The Heavy Lifting

- Governance and Trust Win Real-World Deployments

- Funding Signals Confidence In The Substrate

- Can the Middle Survive Pressure from Platform Giants

- The Bottom Line: The Long Game Favors the Substrate Layer

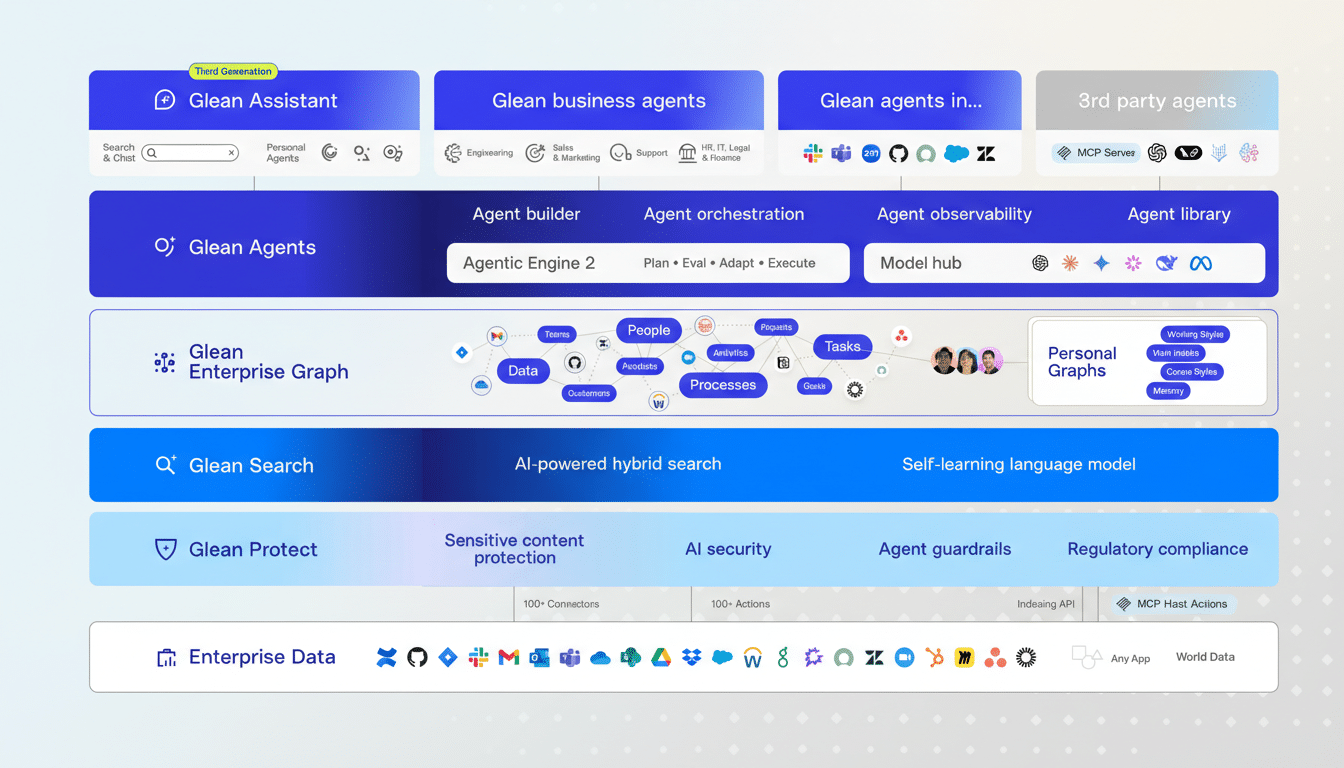

That is why Glean began as enterprise search and evolved into an “intelligence layer” that sits between ever-changing models and ever-sprawling systems. The familiar Glean Assistant is the on-ramp, but the retention story is the substrate: a permissions-aware index of content, identities, and relationships that can ground any model’s reasoning in company-specific reality.

Model Neutrality as a Strategy for Enterprise AI

Enterprises do not want lock-in at the model layer. Today’s best model for drafting a policy might not be tomorrow’s best for code review. Glean abstracts that volatility, routing queries to leading proprietary models such as ChatGPT, Gemini, and Claude, as well as open-source options, and blending them where it makes sense for cost, latency, or accuracy.

This mirrors broader cloud strategy. Flexera reports that the vast majority of enterprises run multi-cloud, and CIOs increasingly talk about “multi-model” resilience for AI. Gartner expects that by 2026, 80% of enterprises will have used generative AI APIs and models, up from single digits just a few years ago, underscoring the need for a switching layer as offerings proliferate.

Connectors And Agents Do The Heavy Lifting

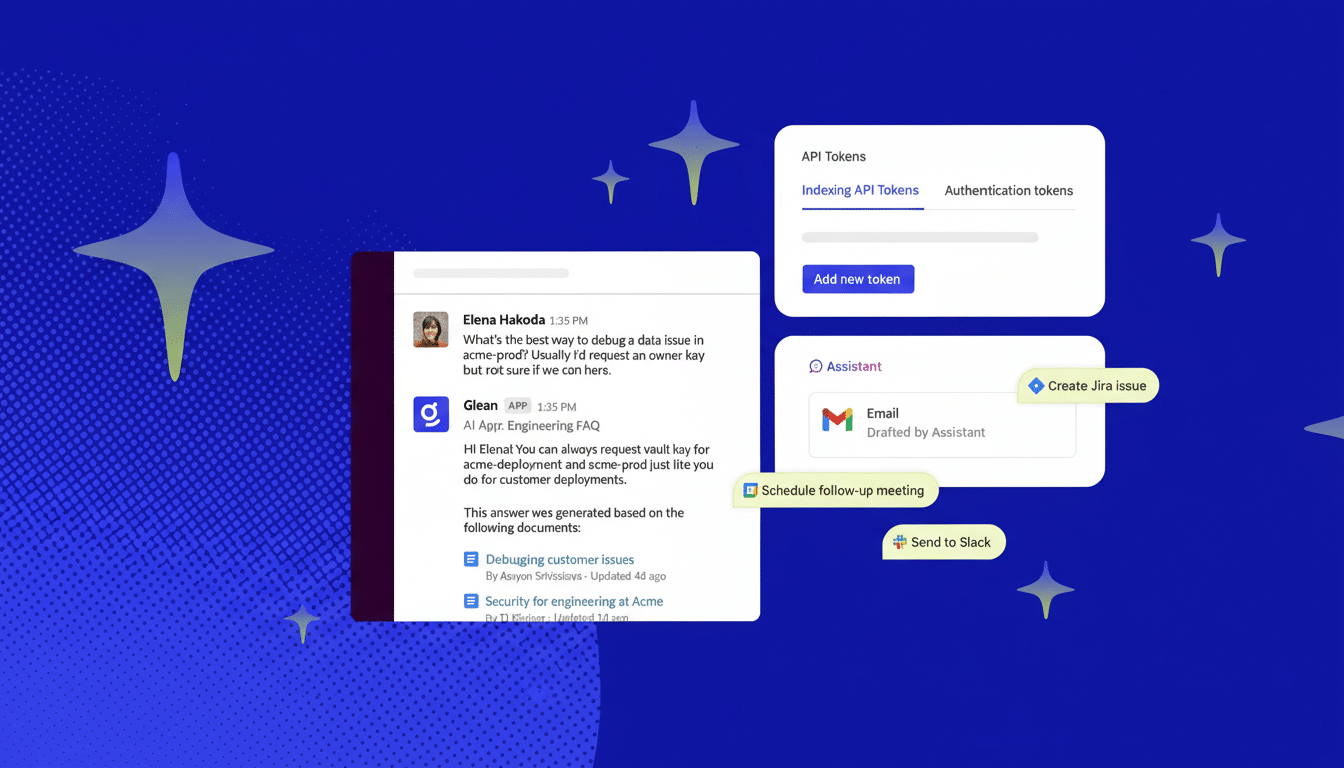

The connective tissue is only useful if it is action-oriented. Glean’s deep connectors ingest content and metadata, track usage signals, and preserve enterprise permissions end to end. That makes it possible to move beyond summarization toward agentic work: file a ticket in Jira, pull a redline from Google Drive, update a Salesforce opportunity, or draft a customer email, all without breaking role-based access.

Consider a practical flow: “What is blocking the ACME renewal, and what should we do today?” The system reconciles objects across Salesforce, references Slack threads where risk emerged, cites Jira bugs that delayed a key feature, and proposes next steps, each tied to a source and executed through the right system with the right permissions. The result feels like one assistant, but under the hood it is orchestration across dozens of APIs and policies.

Governance and Trust Win Real-World Deployments

Trust determines whether AI escapes pilot purgatory. Glean emphasizes governance-first retrieval, enforcing row- and document-level permissions so answers only reflect content the asker can see. To reduce hallucinations, outputs are checked against source material, with line-by-line citations that enterprise risk teams can audit. That combination—evidence-backed responses within existing access rules—is quickly becoming table stakes in regulated industries.

The economics favor the middle layer, too. IDC forecasts global spending on generative AI to reach well into the hundreds of billions within a few years, but much of that value will accrue to organizations that tame data fragmentation and compliance, not just to frontier model training. By aligning with that spend, Glean can scale without shouldering the massive compute burden of training foundation models.

Funding Signals Confidence In The Substrate

Investors appear to agree with the substrate thesis. Glean’s recent Series F brought in $150 million and lifted the company’s valuation to $7.2 billion, a strong endorsement for an infrastructure-centric approach at a time when many enterprises are still experimenting with where AI belongs in their stack.

Can the Middle Survive Pressure from Platform Giants

The open question is durability. If Copilot and Gemini gain deep, native access to the same enterprise systems, what remains for a neutral layer? The counterargument is the reality of mixed estates: many companies run Microsoft 365 alongside Slack, Salesforce, Atlassian, ServiceNow, and bespoke apps. A cross-suite brain that respects one set of permissions and policies is simpler to govern than multiple assistants each with partial context and different controls.

There is also leverage. A neutral intermediary lets buyers optimize for accuracy and cost across models, maintain portability as vendors shift terms, and instrument consistent security and audit. For CIOs whose priorities are risk, interoperability, and time to value, the substrate can be the difference between a flashy demo and an operating muscle.

The Bottom Line: The Long Game Favors the Substrate Layer

The land grab may look like a front-end fight, but the long game is about context, control, and composability. Whoever maps enterprise knowledge and permissions most faithfully will dictate which interfaces and models win inside the firewall. Glean is making a concentrated bid to be that layer—less visible than an assistant, but far harder to rip and replace.