It looks like Google is testing a major redesign of its Gemini AI app, from the minimalist chat window to a visually driven, feed-like product that nudges users toward more creative and research-oriented use cases. Signs of the overhaul emerged in a recent Android codebase reviewed by Android Authority, indicating Google is re-envisioning how people find out what capabilities Gemini offers. The company has not officially confirmed the change.

AI’s feed-first coming of age in the Gemini app experience

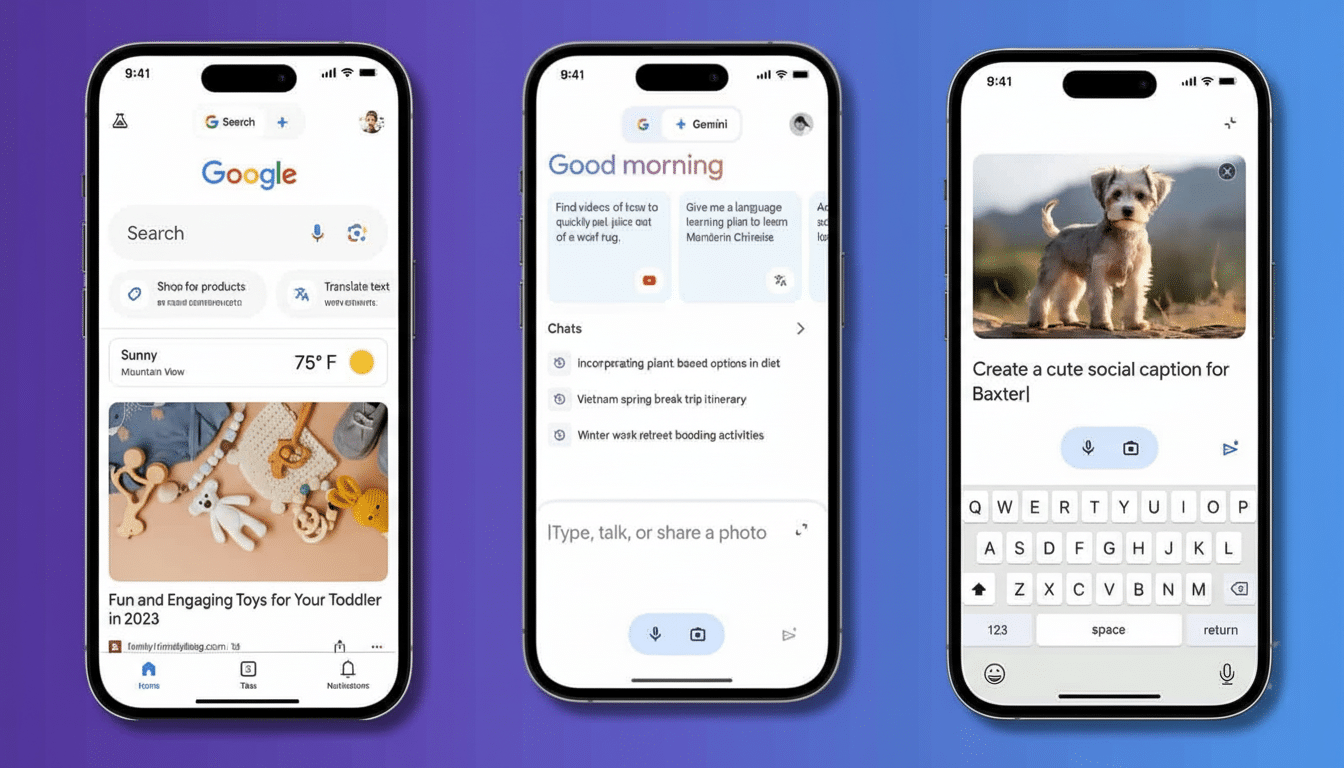

Rather than just give you an empty prompt like traditional search bars, the experimental home screen organizes quick-action buttons — like for generating images, starting “Live” voice conversations, or diving into more research — near the top.

- AI’s feed-first coming of age in the Gemini app experience

- Why a visual pivot matters for Gemini’s everyday use

- Positioning Against ChatGPT And Other Rivals

- Effects for Android devices and subscription offerings

- Privacy and safety considerations for a visual-first feed

- What to watch next as the Gemini app test expands

Below that, a scrollable feed of options serves up flash cards and photo-forward concepts to test — all but turning the app into an AI playground rather than a simple text box.

The prompt cards revealed in the teardown pushed creative, camera-adjacent tasks: transforming photos with space themes and old-school looks, storybook-style remixes from sketches, or quick ideas like a daily habit helper (short news catch-up). It’s a well-worn pattern in successful consumer apps: wipe blank-page anxiety away with suggestions that are tangible, enjoyable, and textually inviting.

Why a visual pivot matters for Gemini’s everyday use

For some users, a chat-only interface may be an uncertain foundation to start from — especially when the AI in question is capable of so many different things (and keeps learning how to do still more). If you’re tired of Google experimenting on you, consider Twitter or Facebook for better treatment — particularly if you already know that example-based onboarding leads to greater discovery and sign-up numbers. A tap-worthy prompt feed could flatten the learning curve for Gemini’s multimodal skills and raise the probability of users returning to use them on a daily basis.

The change also follows the reality of how people already use their phones: snapping photos and completing quick tasks, making short swipes. By meeting users in that mode, Gemini can also surface higher-value workflows — image generation, voice brainstorming, and long-form research — without needing a prompt at an expert level.

Positioning Against ChatGPT And Other Rivals

OpenAI’s ChatGPT app sports an intentionally minimalist interface and conversation-focused experience. Google seems to be betting that a more curated, visual experience will be appealing to a broader user base and better demonstrate the multimodal nature of Gemini. This is particularly salient as creative tools increasingly become a de facto introduction of AI to the masses, from artistic stylization of photos, storyboarding, or even lighter-weight video concepts.

Google also has a deep bench to power a visual-first Gemini: on-device Gemini Nano for fast tasks, server-side models for richer creation, and features like Gemini Live and Ask Photos, which the company announced over the last year. A design where those tools are one tap away might help the app feel less like a chatbot and more like a suite of AI utilities.

Effects for Android devices and subscription offerings

Assistant was recently replaced with Gemini as the native lay of the land on a plethora of Android handsets, sunsetting Assistant to give Gemini’s enviable genie slot instant access to more than three billion monthly active devices worldwide (Android has only just crossed this milestone according to developer disclosures from Google in recent months). A more live home screen could potentially raise session length, as well as clearer on-ramps to premium features.

If the redesign is universally rolled out, those who receive it will get a list of enhancements involving Google’s AI subscription that comes bundled with Gemini Advanced in the form of Google One AI Premium. To make those capabilities discoverable through prompt cards and shortcuts is a natural funnel for turning interested players into paying ones.

Privacy and safety considerations for a visual-first feed

A feed that is actively proposing photo-based transformations will bring up familiar concerns about data management. Google has focused on on-device processing for certain tasks with Gemini Nano and laid out guardrails around features such as Ask Photos. Transparent labeling, clear opt-in controls, and processing notices will be crucial to maintain this trust — particularly as regulators such as the FTC (in the US) and ICO (the UK’s data protection watchdog) begin to take closer looks at consumer AI products.

Content integrity will matter too. Such a design that privileges image/media creation should provide creative freedom while building stronger protections around watermarking, attribution cues, and boundaries on sensitive content. Those will be table stakes once they’re mainstream.

What to watch next as the Gemini app test expands

Google frequently introduces changes to its UI via graduated testing, so others may see different layouts before a wider release is issued. Key signals to watch:

- Whether the feed becomes the default entry point

- How aggressively the app personalizes prompt suggestions when you have enabled various features

- If iOS adopts similar designs

If the experiment works out, Gemini might feel less like an empty chat and more like a managed studio — one that helps steer people away from casual fiddling and toward productive workflows.

For a category that is still finding its way, that move from conversation to creation may be the unlock.

Google wouldn’t share specifics on timing or availability, but the direction is clear: Make AI physical, visual, and one tap away.