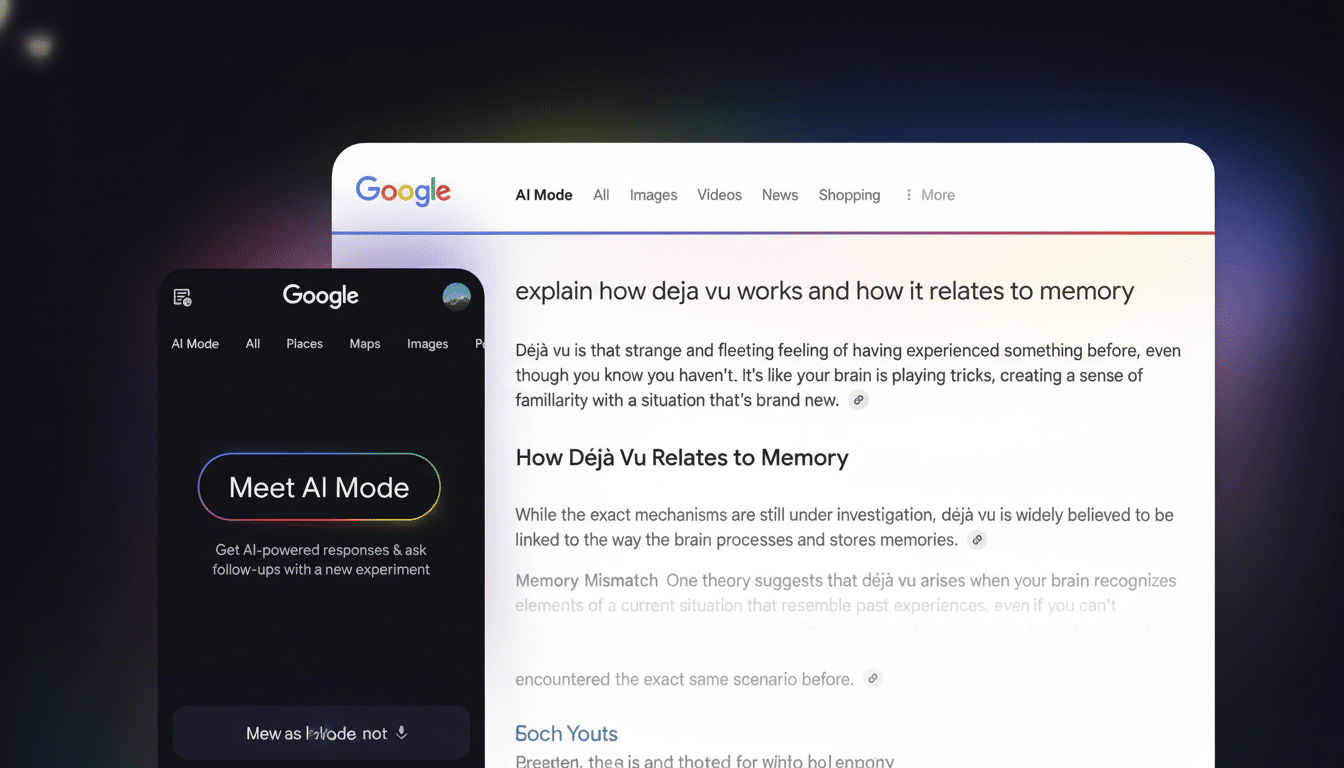

Google Gemini 3 updates are bubbling up inside AI Mode in Search — and here, the differences go beyond cosmetic. The company says users can expect faster answers, better reasoning, and a Generative UI that formats answers in the medium it believes works best for searching needs — whether that’s a graph, comparison table, animation, or even an interactive.

What Gemini 3 changes inside Google Search’s AI Mode

AI Mode is doing more of the legwork in the background. Rather than relying on a single pass, Gemini 3 can kick off more web runs to dig up confirming sources, combine information, and craft answers that are less ‘this-and-no-more’ style and more bespoke. This “agentic” behavior — decomposing tasks, asking questions, checking and sorting information — is meant to minimize shallow responses and the overload of open tabs.

Google says that will result in deeper, more focused search results. In the real world, that might mean asking for a plan to run your first half-marathon and getting a week-by-week schedule with mileage graphs and recovery notes included, or looking for e-bike classes by state and finding an interactive map collecting citations. What this project is about: quick and actionable.

This change is important because more and more, generative outputs are becoming prominent in Search. Google has prominently flagged AI-powered answers as it seeks to serve a broad user base, and its ranking and quality teams are focusing on techniques that cross-check claims and prioritize source attribution. Critics from the outside, such as Stanford HAI researchers, say retrieval-backed reasoning and transparent citations are essential to progress in building trust in AI search results.

Generative UI personalizes answers for the task

Instead of shooting every request into text paragraphs, Gemini 3’s Generative UI molds the result according to what was asked. Request a 4K TV shortlist that won’t break the bank and you’ll receive, almost immediately afterward, a tidy table comparison featuring most of the standout specs. Need a quick refresher on orbital mechanics? An explanatory animation will make the overview of orbits more interesting than just text. Planning a three-day Austin food tour? You may get an itinerary timeline with map pins and hours.

This method leverages AI’s power as a dynamic module rather than a static answer box. And it also sets a high standard for clarity: Visualizations can expose trade-offs and uncertainties that mere paragraphs obscure. According to analysts at Gartner, a visual and task-aware assistant can increase completion rates on complex requests, especially when users are comparing alternatives or in need of multi-step guidance.

Most important of all, the richer layouts are still dependent on the raw text. Look for links, citations, “expandable evidence,” and further reading. For publishers and brands, that means structured data becomes key, as do clear product specs — they’re the raw materials the UI can transform into rich, scannable views.

Smarter model selection and faster routing in Search

A lot of people are confused by model names and features. Gemini 3 fixes this by allowing AI Mode and AI Overviews to select the proper engine for a given task. Simple queries can be processed by lightweight models, and it uses more powerful ones for long-chain reasoning or when the system must reason across diverse information sources or content modalities.

The result is significantly less friction as well as more even latency. Under the hood, Gemini 3 can predict and prefetch related pages, cache partial results, and only escalate when necessary. For more complex requests — itinerary planning, deep product comparisons, or code walkthroughs, say — it can do extra retrieval steps as well without the user having to juggle model toggles.

Speed still matters. Google’s tuning of Search has traditionally targeted P95 latency scores, and Gemini 3’s routing should ensure that even as more and more web calls are necessary, rich answers remain fast. That balance — depth without delay — is likely to be what determines whether users are satisfied with AI Mode.

Access rollout, availability, and feature limits

The early access begins with US AI Pro and AI Ultra subscribers. Users can manually select “Thinking” in the AI Model list to get Gemini 3 thinking a bit bigger. More will be coming soon, Google says — although when that happens and the feature set becomes available to everyone, paid tiers will remain with higher usage limits.

That gated rollout is not a coincidence: the best models are by far the most compute-hungry, and Generative UI can be resource-intensive. Google will evolve limits and throughput as use patterns stabilize.

Why it matters for users and the open web

For users, the worth of Gemini 3 is simple: a smaller number of steps to a usable answer. For instance, a query such as “compare compact SUVs with best warranty and safety scores” can output a ranked table with scoring methodology, source links, and a shareable summary. That’s a whole lot more actionable than a list of blue links.

The consequences are complex in the web ecosystem. Generative results may lower click-through for simpler questions; however, more abundant in-source UI can also elevate credible publishers with increased prominence. Research from the Reuters Institute shows that users are eager to understand where information comes from, and if Gemini 3 continuously displays citations — and allows readers to drill down when they want to — there’s a chance it could help preserve valuable paths back toward original reporting and expert content.

Early takeaways and questions about Gemini 3 in Search

Gemini 3 locates AI Mode in Search as a full-on task assistant, not just an access layer. The added depth to retrieval, more intelligent model routing, and Generative UI feel like meaningful improvements. The big questions now are around durability and discipline: Can the system consistently keep answers anchored in high-quality sources? And can it produce rich results at scale without performance hiccups? If the early access is any indication, then everyday search is about to get a lot more capable.