A fresh hint within the software for Samsung’s Galaxy Ring indicates the smart ring could act as a hands-on controller for future Android XR glasses. A new string has been uncovered in the Galaxy Ring Manager app called “Ring gesture for glasses,” so it seems that Samsung is looking to bridge its wearables forward, possibly into spatial computing — without making us flail our hands around in the air.

What the Galaxy Ring’s code reveals about XR control

APK sleuthing on the latest Galaxy Ring Manager update turned up one lone but telling reference to glasses-specific gestures. In and of itself, the line says nothing about functionality, timing, or supported devices. Combine that with Samsung’s public acknowledgment that it is working on AI-equipped Android XR glasses with Google and eyewear partners Warby Parker and Gentle Monster, and the puzzle pieces start to make sense: the ring appears set to be a private input device for head-worn displays.

- What the Galaxy Ring’s code reveals about XR control

- Why a smart ring is a practical controller for XR

- Possible ring gestures and how they might be used

- How Galaxy Ring gestures could work under the hood

- What this could mean for Android XR’s input ecosystem

- Proceed with caution: code hints aren’t product promises

Samsung already offers a double-pinch gesture on the Galaxy Ring for simple phone commands like taking photos or silencing alarms. Previous patent applications have described more extensive cross-device interaction, such as controlling outside screens and moving data between devices. So it makes a lot of sense that there would be a gesture set targeted at glasses.

Why a smart ring is a practical controller for XR

XR lives for fast, dependable input. It can feel like magic, but camera-based hand tracking is sensitive to lighting, occlusion, and fatigue. And a controller that you wear on your fingers offers a firm feeling of the tactile without the hassle of bulky controllers. Vision Pro is based on eye and pinch interactions from Apple. Meta blends touch, voice, and hand tracking on Quest and its camera glasses. A smart ring falls in the sweet spot: low-profile, always-on, and socially acceptable in public spaces.

Market momentum supports the move. XR’s resurgence is anticipated as platforms age and wearability in everyday life gets better, according to industry followers. (For the record, smart rings are picking up because of day-long battery life and a health-tracking ethos that augments rather than replaces smartwatches.) Marrying the two categories could result in a sticky hardware conundrum: the glasses for immersive apps; keep the ring on so they’re easy to use.

Possible ring gestures and how they might be used

If Samsung expands on its existing gesture lore, anticipate basic, learnable inputs that reduce accidental activations:

- Double pinch to select or shoot content in glasses UI.

- Pinch-and-hold to drag, resize, or pin windows in space.

- Triple tap for contextual activity (dismiss notification, recent app, open apps list).

- Gentle rotation triggers for volume control or fast scrolling on long pages—no swipes required in the air.

Privacy is another angle. There’s nothing subtle about making a big show of pinching the seal on your baguette at a café. And for accessibility, stationary ring-based input can decrease dependency on accurate hand detection, an aspect that changes depending on the lighting and skin tone.

How Galaxy Ring gestures could work under the hood

The Galaxy Ring uses Bluetooth Low Energy, which is ideal for low-power, low-latency gestures. Sub-100 millisecond input round-trips are also possible with optimized connection intervals and on-device event detection, and should suffice for snappy XR interfaces. Samsung may decide to layer device discovery and continuity across the Galaxy ecosystem, in which case the ring could automatically shift focus from phone to glasses as you slide the frames on.

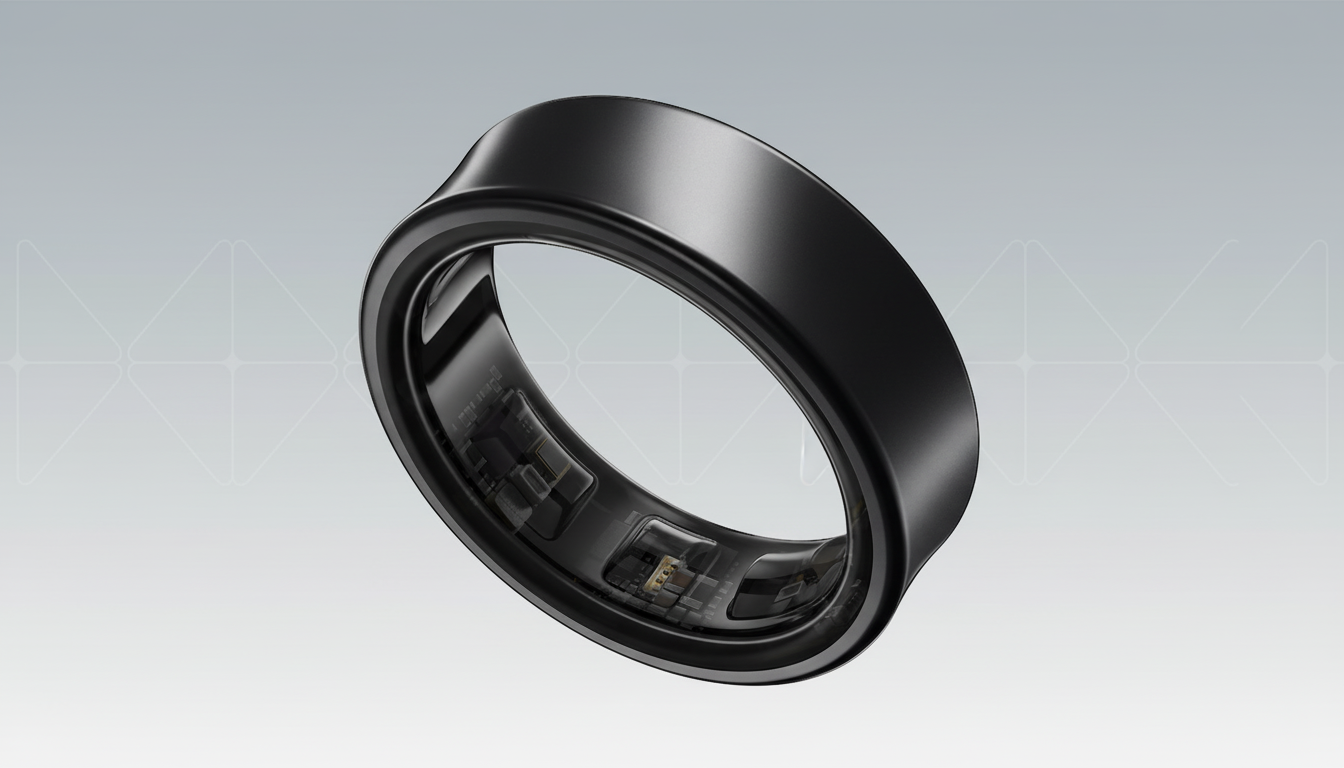

Sensors that are already used for activity tracking — accelerometers and gyroscopes — can be repurposed to identify micro-motions, such as pinches and twists. Patents also detail combining sensor data with machine learning to reduce false positives. Although the current version of the ring doesn’t specifically tout ultra-wideband (try saying that five times fast), it’s part of Samsung’s larger device ecosystem that does support UWB, and may one day assist with spatial anchoring or pointing should future versions of the ring incorporate it.

What this could mean for Android XR’s input ecosystem

By standardizing Android for XR, Google provides hardware partners with a shared baseline of inputs, sensors, and app framework. Should Samsung manage to deliver a slick, ring-as-controller experience that’s available indoors, outside, and on the go with almost no friction thanks to dedicated hand controllers (or bad tracking), it sets an interesting stage for the platform: a wearables-first control model.

There’s a commercial upside too. Packaging or marketing the ring with glasses, for example, could boost attachment rates in both sectors. Little quality-of-life features — instant photo capture from your glasses with a finger pinch, fast navigation by a twist — sometimes edge people toward wearability more than big, sexy specs.

Proceed with caution: code hints aren’t product promises

As with any app teardown, features seen in code may never see the light of day as experiments, already-removed placeholders, or subsequent reworking completely change their functionality. The string is a statement of intent, not a ship date. But the strategic justification is valid, the ecosystem partners are lined up, and Samsung already has a functioning gesture vocabulary on deck with the ring. If the company delivers, the most useful controller for Android XR glasses might be the one you hardly notice is even on your finger.