Apple is making its own hardware part of the show. Producers will integrate four iPhone 17 Pro units into a live production of Friday Night Baseball on Apple TV+ between the Boston Red Sox and Detroit Tigers at Fenway Park next week, offering an up-close view that won’t require replacing the main broadcast cameras.

One phone will go inside the Green Monster in left field, another into the home dugout; others will be mobilized among fans.

A note on the footage will make clear to viewers which feed is being shown, so that there is no doubt that a shot was taken with an iPhone.

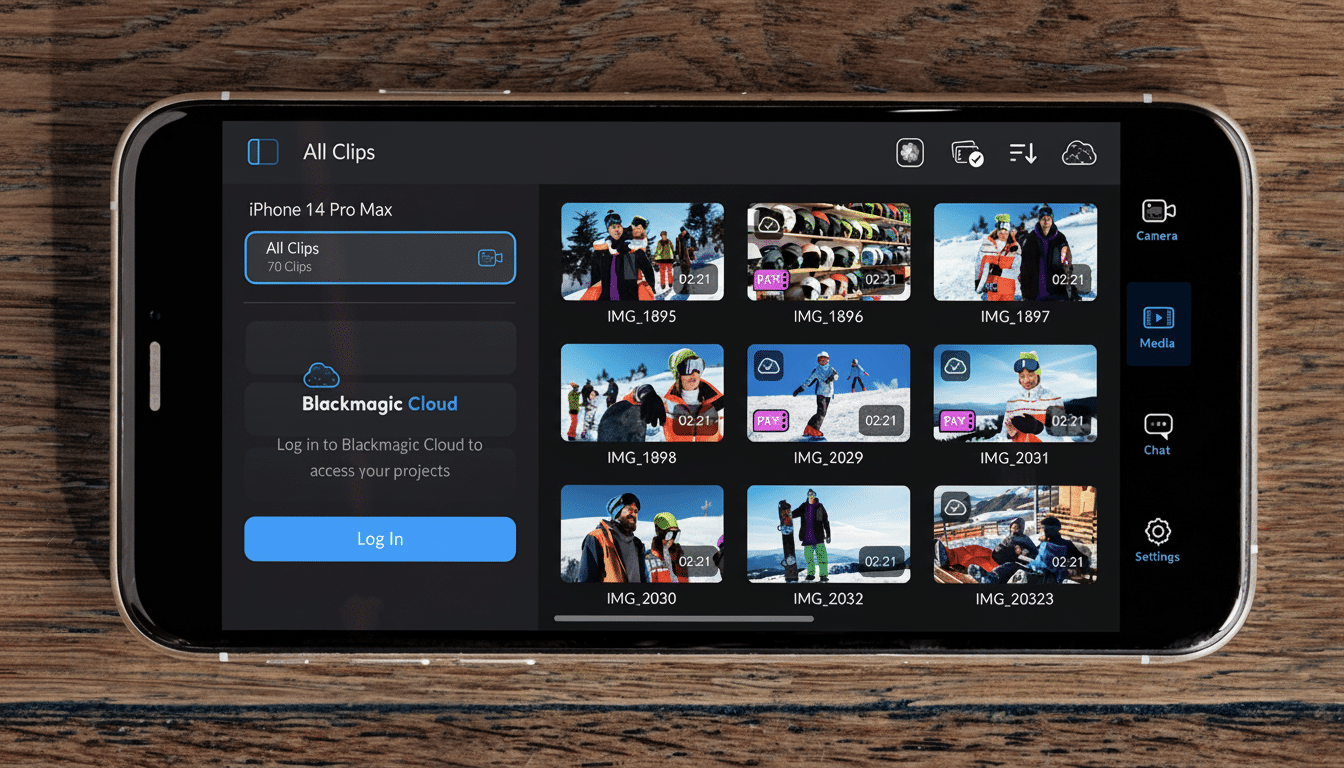

The phones will be operated through Blackmagic Design’s pro camera app and will film in 1080p at 59.94 frames per second, mirroring the production’s current cameras to make clean switching and seamless replay integration possible.

Why Apple Is Getting Into The Phone-Game

Live sports is a game of angles. By contrast, traditional broadcast rigs are great at long-lens action and reliable coverage, but they can rarely squeeze into tight spaces or go where fans are quite like a pocketable camera can. iPhones give Apple the close-to-the-ground angles — inside a hole in the wall, inches from the bat rack, or in the middle of a thrilling frenzy during celebrations — that shoulder-mounted camera systems can’t do easily, if at all.

Specialty “beauty cams” and shallow–depth-of-field mirrorless setups have been enlisted by networks in recent seasons to give shows a more cinematic flavor. Sports Video Group has detailed how those shots increase emotion without detracting from the main broadcast. What Apple is delivering falls in the same spirit: get great, compact, stabilized sensors that can help augment the story without trying to do play-by-play with a big glass lens on the sideline.

The iPhone 17 Pro’s Place In The Live Workflow

Counts matching the industry-standard 59.94p frame rate do matter. It maintains motion cadence in line with hard cameras and makes it easier to play back on replay servers, where off-speed footage can stutter. Shooting 1080p also makes sense: most live baseball programming is delivered in 1080p HDR or 720 SDR, and shooting 1080p from a phone keeps scaling and latency to a minimum.

Feeds from the Blackmagic app are sent back into the production. The skinny on production costs: camera shading is largely handled as usual, with default 40 or 50 Mbps production formats for shader control and images with broadcast paint values.

Phones don’t offer genlock, but modern switchers frame-sync every input, so iPhone sources can be cut live like any old camera.

Look for strong house stabilization and quick autofocus to do a lot of heavy lifting in table or handheld positions. That combination is great for reaction shots in the dugout or rapid pans to cover someone nabbing a foul ball, when agility trumps brute optical reach.

What Viewers Will See During the Game Broadcast

The production team will choose when to cut to iPhone angles selectively — think quick, intimate moments: the pitcher’s quiet focus between innings, a manager glancing toward the bullpen, or the kinetic energy of Fenway’s bleachers. An on-screen label will indicate when the feed is coming from an iPhone 17 Pro to make sure the experiment is clear.

This broadcast is also Apple’s final Friday Night Baseball game of the season, part of a rights deal that runs through 2028. According to MLB, the telecast will reach fans in 60 countries, and it will not be subject to regional blackouts for Apple TV+ subscribers.

A Strategic Showcase for Apple and MLB Collaboration

This move represents for Apple a production test and product demonstration before a global audience. The iPhone 17 Pro’s multicamera system, computational imaging, and pro-grade controls are being put through the wringer — one of the toughest environments in media: a live, unscripted sports broadcast where camera agility and reliability meet head-on.

With more camera positions available for MLB, valuable stories could be told and fans would be further engaged. The league has very much encouraged experimentation in field-level views the last few years, and trade organizations like SMPTE and NAB Show have demonstrated how smartphone-class sensors are now more practical (as opposed to impractical) with proper color management and sync in broadcast pipelines.

A Quiet Earlier Trial Offers Lessons for Broadcasters

Apple has shoved iPhones into a Friday Night Baseball telecast for the San Francisco Giants and Los Angeles Dodgers with no formal fanfare. Hush-hush tests like these are a typical part of broadcast engineering: prove the path, gather latency and color data, then scale up with more prominent placements and clear viewer labeling.

What Comes After, If It Works for Live Baseball

If integration goes well — clean cuts, cool moments, and not too much artifacting — you can anticipate seeing more mobile units serenely parked in specialty locations throughout parks, from bullpen benches to concourse ramps.

The cost of adding an extra angle goes down, and the editorial possibilities increase.

None of this supplants the spine of sports coverage: broadcast lenses, RF cameras, super-slow-motion, and beefy replay. But as Apple and MLB further their relationship, perhaps the iPhone’s place will change from novelty to reliable specialty camera, deserving a permanent spot on the shot card alongside the jibs and robos.