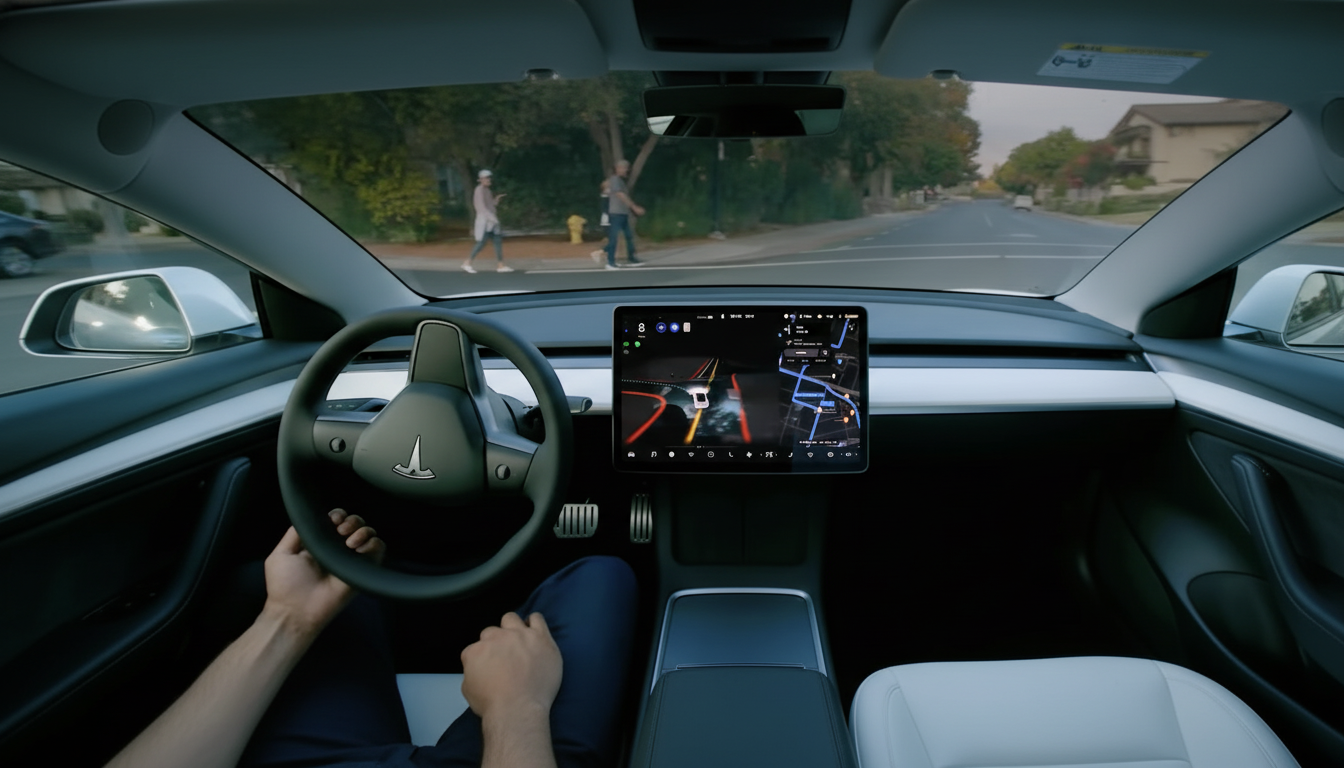

Auto safety regulators inquire about Tesla’s Mad Max setting. U.S. auto safety regulators have requested information from Tesla about its recently released Mad Max driving profile, a setting in its Full Self-Driving software update that seems to prioritize faster speeds and more aggressive lane changes. The National Highway Traffic Safety Administration has acknowledged that it is taking the step, which is a routine part of its process that could eventually lead to a full-blown defect investigation if safety risks are detected, Reuters reported.

The agency repeated its assertion that drivers are still responsible for following traffic laws, even when advanced driver-assistance systems are in use. The name, by itself, does seem eyebrow-raising, associated as it is with a film franchise known the world over largely for being hell on wheels — a rather unfortunate association to make when talking about an advanced safety system.

What Tesla’s Mad Max Mode Does in Real-World Driving

Tesla’s release notes, as cited by enthusiast publications that follow software updates, describe Mad Max as a new speed profile with top target speeds and more aggressive overtaking than the existing Hurry setting. In real-world terms, that means the system will attempt faster overtakes and cruise at a faster speed when conditions are favorable.

Owners have already posted videos on social networks of cars configured for Mad Max zooming along well above posted limits and blowing through stop-controlled intersections. In one widely shared clip, a Tesla veers up to 79 miles an hour on a road posting a speed limit of 50; in another, the car stops momentarily but not for long before proceeding. Although anecdotal, these are examples that get to the heart of regulators’ question: does the software make it easier or more likely to commit a traffic offense?

An Escalation Amid Continued Scrutiny of FSD

The NHTSA investigation arrives as Tesla confronts wider scrutiny of its driver-assistance capabilities. Tesla in early 2023 issued a recall for some 363,000 vehicles featuring the FSD Beta to fix behaviors that may result in running stop signs, going through intersections without waiting, or speeding. Later in the year, the company introduced an over-the-air fix affecting some two million vehicles to make Autosteer’s driver monitoring and alerts more robust.

NHTSA’s precedent of issuing a standing order to require crash reporting for Level 2 systems has regularly led to Tesla disclosing an outsized share of incidents, but it reflects as much the size of the company’s fleet and its always-connected telemetry as any comparative risk. Still, the data demonstrates how minuscule design decisions in partial automation can carry outsized real-world consequences at scale.

In a separate action, the California Department of Motor Vehicles has since 2022 been fighting Tesla’s marketing of Autopilot and FSD, arguing that branding could suggest greater autonomy is possible than what the technology can offer. Indeed, safety advocates, including the Insurance Institute for Highway Safety, have cautioned that names and user interfaces that promise more than they can deliver can lead to misuse and ultimately inattention on the road.

What Investigators Will Examine in Tesla’s Mad Max

When NHTSA begins a conversation with a manufacturer, it typically seeks thorough documentation of how the feature functions, operational limits, and safeguards. For Mad Max, central questions will include how speed limits are determined and enforced, whether the system can be programmed to exceed posted limits, how it deals with stop signs and traffic lights, and what driver-monitoring standards apply when its software tries out aggressive lane changes or quick acceleration.

Investigators will also evaluate whether the design could pose an unreasonable risk to safety — NHTSA’s threshold for finding a defect — even if the person in the driver’s seat remains legally responsible. The potential outcomes run from taking no action to a formal preliminary evaluation, an engineering analysis, and ultimately a recall, if warranted, that is done over the air.

Why the Name and Tuning of a Driving Mode Matter

Speed and passing are among the most weighty decisions a driver-assistance system might make. With research consistently demonstrating that higher travel speeds dramatically elevate the severity of crash outcomes, the accumulation of even small amounts of risk across a high-volume fleet can be problematic. In that light, a feature designed to encourage faster driving with branding reminiscent of cinematic chaos may draw attention from both safety regulators and policymakers.

There’s also a human-factors aspect to this. Partial automation can reduce workload in predictable conditions but may also erode vigilance, particularly if the system seems to feel confident in pushing the envelope. A driver’s attention requires careful consideration of how instructions are given. Aggressive profiles go the other way.

Design Best Practices for Engaging a Driver

- Clear language

- Conservative defaults

- Unmistakable handoff cues

The Stakes for Tesla and Drivers as Scrutiny Rises

Should NHTSA find that Mad Max substantially increases the risk of violations or near-collisions, Tesla may be forced toward software updates, tighter speed-governing parameters, or deletion of the profile. Its over-the-air updates are its own version of fail-fast, but each high-profile inquiry ratchets up pressure on its overall safety case for FSD and for how it talks about it.

For drivers, the immediate conclusion has not changed: no matter which mode is being used, eyes on the road and hands ready are crucial. It’s still easier to die on U.S. roadways — over 40,000 times a year, in fact — so the little choices that matter in both choosing from and tuning the nameplate stack up for cause. Regulators are telling us the line between driver assistance and recklessness will be patrolled — particularly when it seems like the software is ready to cross it.