Federal auto-safety regulators are opening an investigation into Waymo after reports that its driverless vehicles have failed to stop for school buses, one of the most high-stakes tests to date of what autonomous cars can handle on American roads.

NHTSA Opens Probe After Bus Violations Are Reported

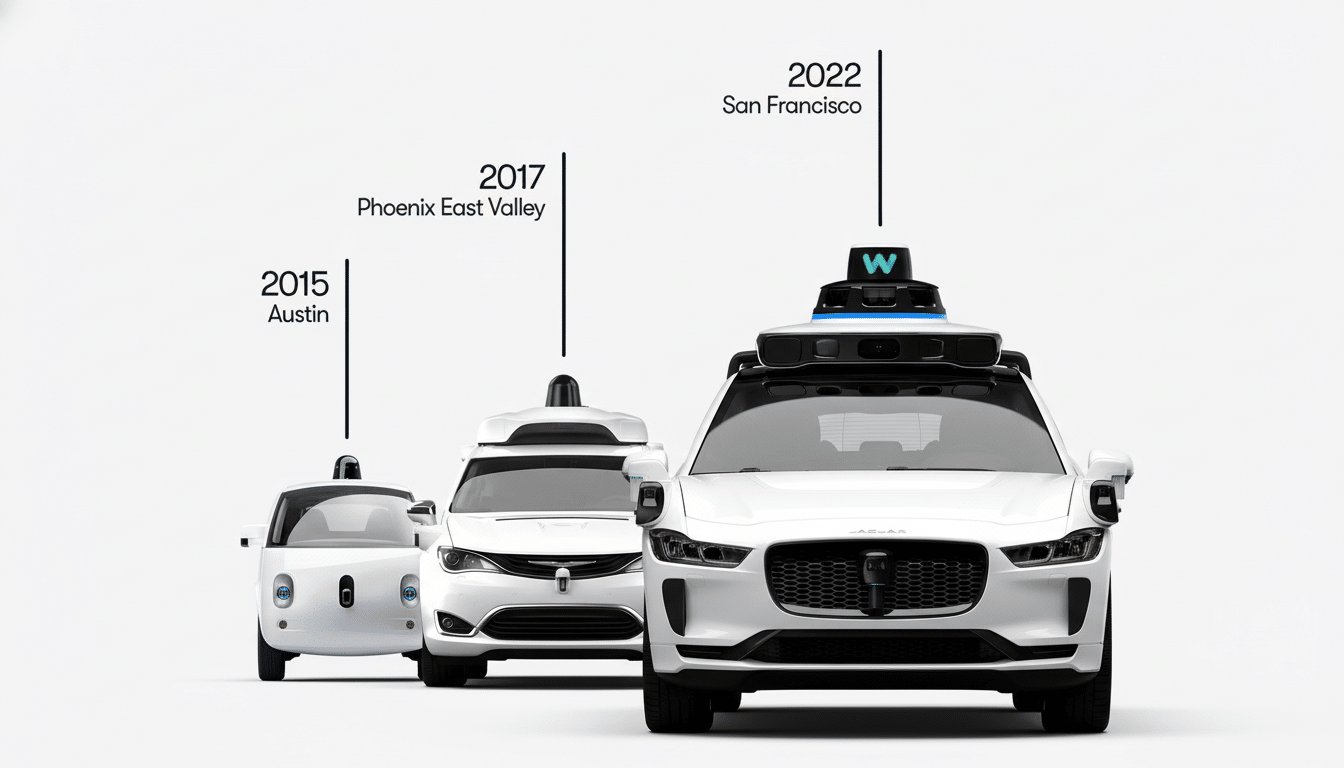

The National Highway Traffic Safety Administration said that it had requested information from Waymo about several incidents in which the company’s self-driving taxis were suspected of passing stopped school buses with extended stop signs and flashing red lights. According to Reuters, at least 19 such incidents have been recorded since Waymo launched public rides in Austin, Texas.

Regulators had already been scrutinizing Waymo’s performance at traffic controls, including a video-recorded episode on an Atlanta street with a vehicle swerving to avoid a loading school bus. The new query is asking for more technical information on how Waymo is able to see a school bus, interpret its stop-arm signals and apply geofenced rules restricting the behavior of its vehicle during school hours.

NHTSA’s Office of Defects Investigation can raise inquiries, demand software updates or call for recalls if it finds safety defects. Most of these autonomous-vehicle probes conclude with voluntary fixes, but the scrutiny is mounting as self-driving services migrate toward more complicated urban environments.

Austin School District Asks Waymo To Pause At Bus Times

The Austin Independent School District has requested that Waymo temporarily halt operating its robotaxis in time for morning pickups and afternoon drop-offs, after multiple officials and a bystander reported one close call in which the student crossed just before an autonomous vehicle moved past a stopped bus and through a crosswalk where other cars had stopped.

Waymo says it pushed a software update designed to better detect and respond around school buses. But district officials say several incidents took place after the update that prompted them to question whether the fix was reaching all of the places it needed to go. It remains unclear whether Waymo has made any changes to the service because of those requests. TechCrunch reported that Waymo believes its modifications have made it safer, but the company has not committed to restricting service hours as demanded by the district.

What The Law Says, And Why AVs Have a Hard Time With It

Every state has a law that drivers stop when a school bus puts out its stop sign and turns on flashing red lights, with few exceptions such as to keep the goddam wheels turning on divided highways. It’s completely clear in Texas law that motorists are required to stop until the bus starts moving again, its driver indicates it’s safe to proceed or the lights quit flashing. The penalties are steep, with fines and license consequences possible for violations.

Buses are a challenging edge case for automated systems. The vehicle has to perceive and classify a bus from various angles, make inferences on whether the lighting is amber (approaching stop) or red (active stop), localize the stop arm — possibly partially occluded in traffic — and interpret roadway context that includes rules for oncoming lanes and exceptions for divided roadways. It also needs to cope with dynamic scenes: children darting unexpectedly into lanes, crowds of pedestrians on corners and other drivers who might suddenly come to a halt or pass where they’re not allowed.

“The stakes are high, according to school transportation professionals. An annual national survey conducted by the National Association of State Directors of Pupil Transportation Services consistently tabulates hundreds of thousands of instances in which drivers are suspected to have violated stop arm laws, based on a single day’s worth of observation, which extrapolates to tens of millions for a school year. Human-driven or not, running through school buses is one of the more high-stakes vehicular blunders.”

What Waymo’s Technology And The Regulatory Stakes

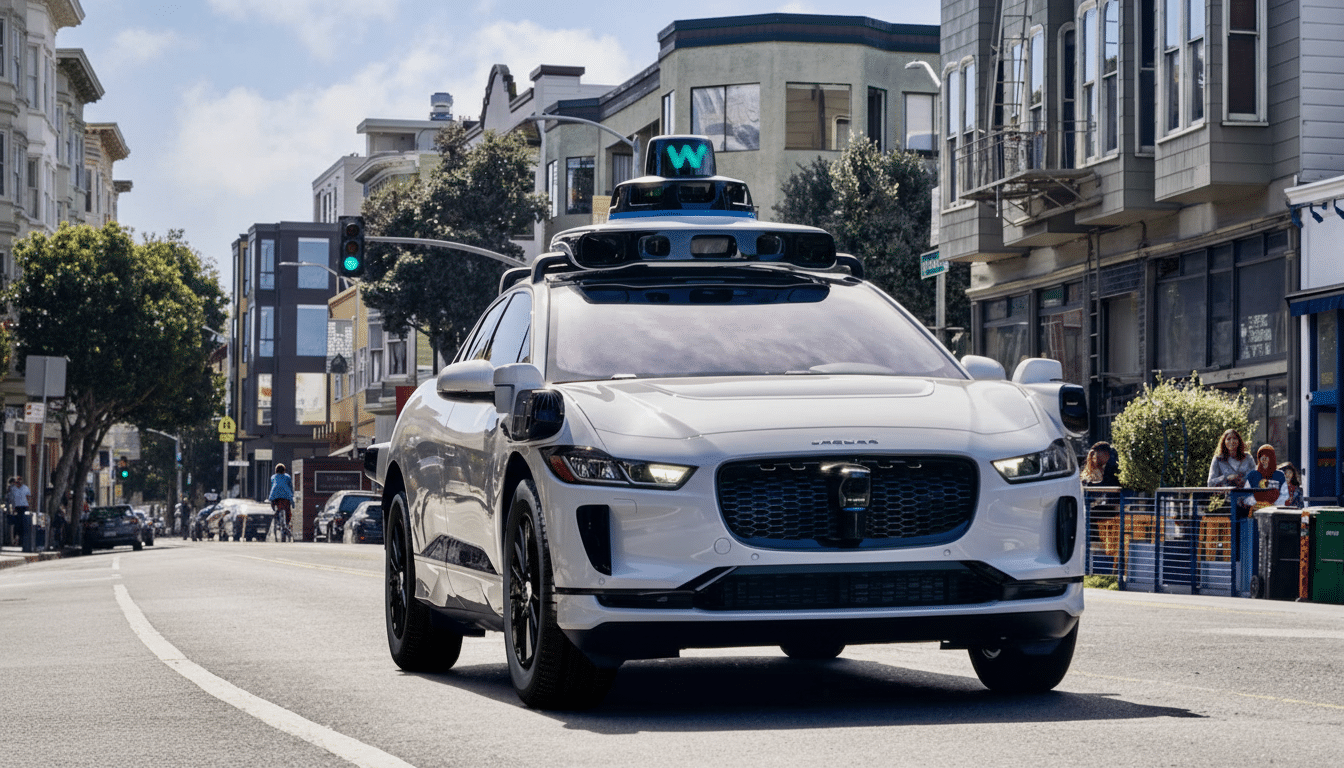

Waymo’s vehicles have a fusion of lidar, radar, cameras and mapping to be able to see the road and forecast human behavior. The company claims to have embraced a layered approach to safety, including policy-based driving rules and over-the-air updates to deal with new risks as they are discovered. The bus abuse examples mentioned in this article raise a question of whether rules-based logic should be more tightly linked to visuals and frequent/rare-event logic during routine school operations.

Regulators will look for evidence that Waymo’s software now consistently treats an extended stop arm as a hard stop condition, has the ability to discriminate divided-road exceptions and if it behaves conservatively around children and crosswalks. Investigators would usually ask for offloading records, event video, SDID (system design intent documentation) and the validation of findings to ensure performance is meeting expectations.

The wider sector of self-driving vehicles is watching closely. A determination that school-bus compliance still isn’t trustworthy could result in operational restrictions around schools, tighter geofencing, or mandated software updates for entire fleets. And if Waymo shows strong performance after its update, that would help make the case to regulators that software-based solutions could quickly cure these rare but deadly safety failures.

What Comes Next in the NHTSA Investigation of Waymo

NHTSA’s investigation is still in the fact-gathering stage. The agency can open an engineering analysis if the concerns remain, but it may also close a case if it believes that the risk is addressed. And until then, riders, parents and school officials in Austin will be waiting for real-world evidence on the pavement: robotaxis that come to a complete stop at the first sight of a school bus extending its sign — and wait as long as it takes for children to cross the street.