Three veterans of Google’s moonshot lab are building an AI that quietly dials down the volume in your life, allowing you to have better conversations — and investors are betting millions of dollars that it works.

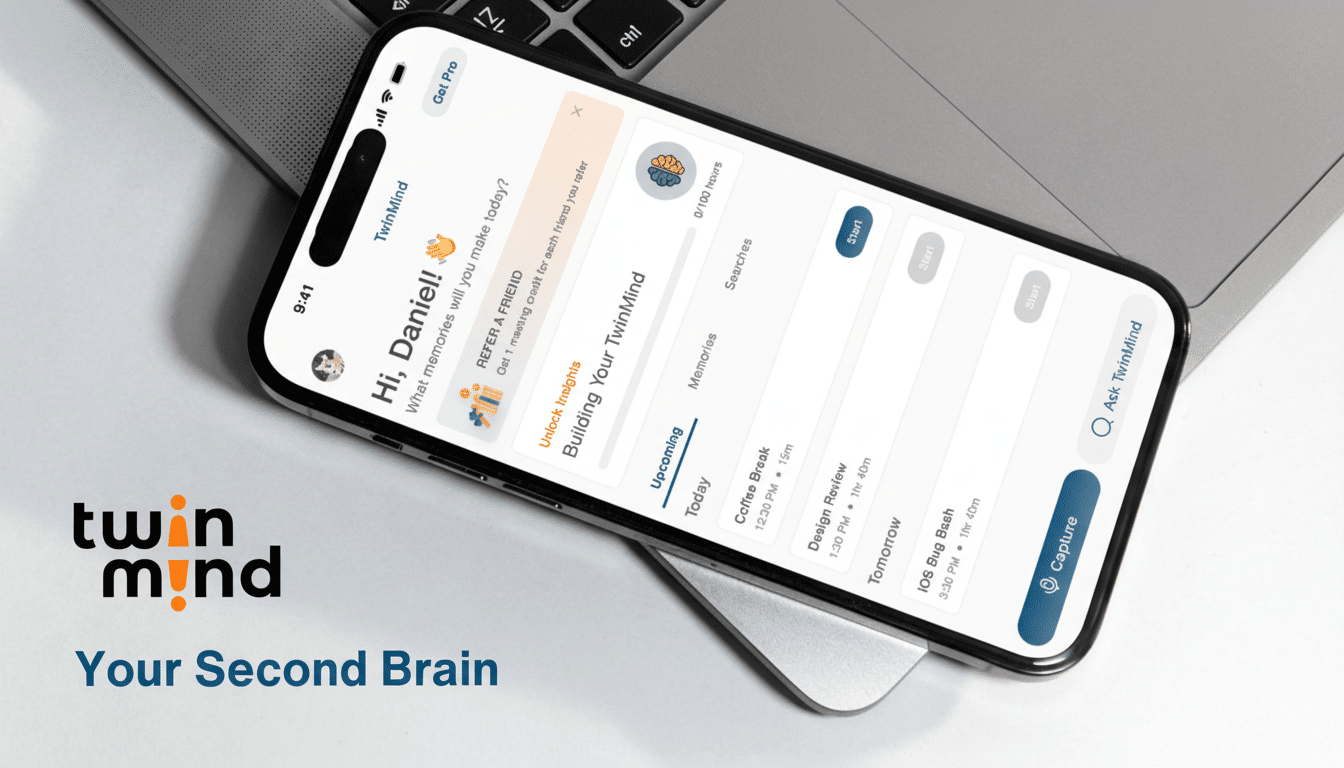

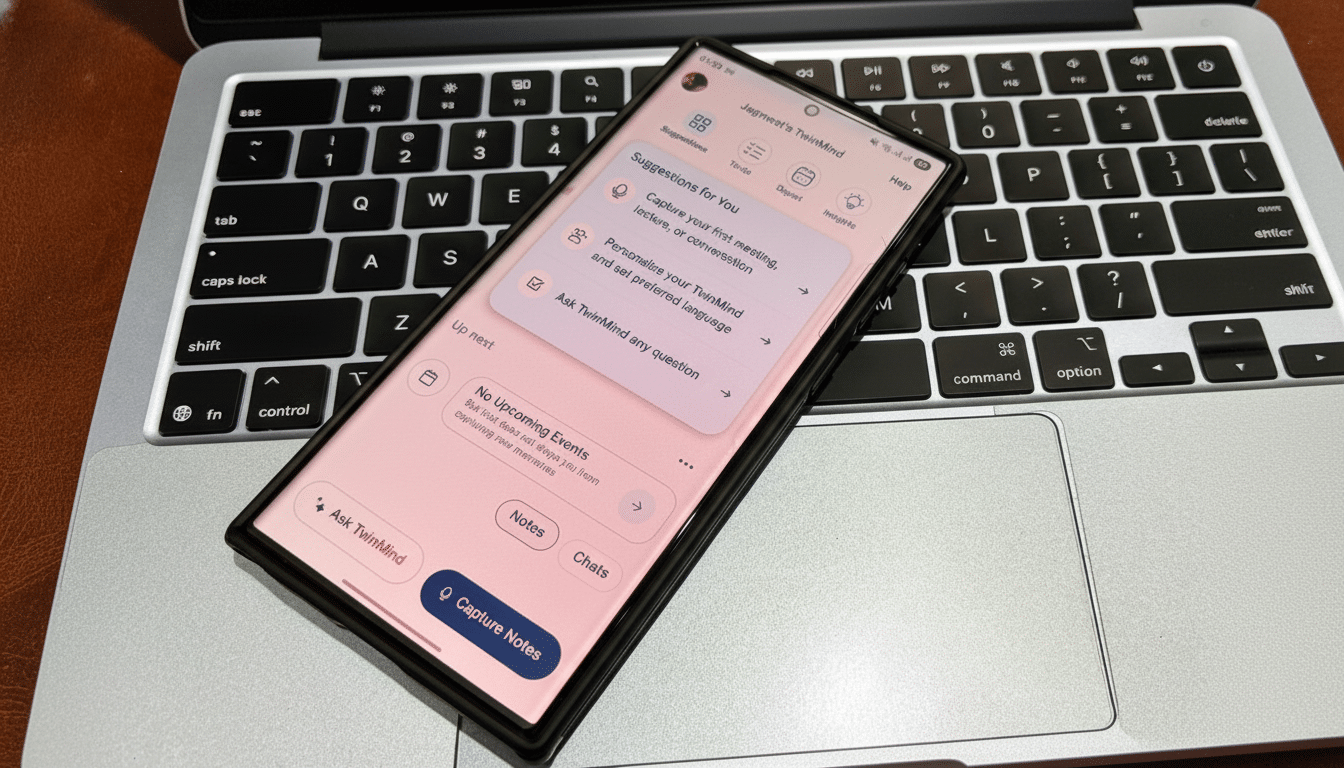

Their start-up, TwinMind, aims to be a “second brain” that listens (with permission), grasps context and helps turn spoken moments into memory, tasks and answers that can be searched.

The seed round is for $5.7 million and is led by Streamlined Ventures, with participation from Sequoia Capital and other backers, such as Stephen Wolfram. The company is starting on Android and iPhone, and also rolling out a new speech model and developer API.

An always-on memory layer for your life

TwinMind is a background service that listens to ambient speech to build a personal knowledge graph. The hallway chat, the meeting, the lecture, the passing thought all become structured notes, to-dos and, the moment you type it up, an answer you can query again later. The app is able to transcribe on device in real-time and can also run on continuous mode for around 16-17hours and live translate in over 100 languages.

To make ambient recording work with a lock screen on iOS, the team developed a low-level service in pure Swift that can run natively in the background — a technical route that many cross-platform competitors cannot easily take. That’s a major difference from meeting-specific tools like Otter, Granola and Fireflies, which often only fire up in scheduled calls as opposed to regularly throughout the day.

The system is designed by co-founders Daniel George (CEO), Sunny Tang and Mahi Karim in 2024. George came up with the idea after running his life in back-to-back meetings at a bank job and prototyped “a script that transcribed the audio he was recording locally and fed the transcript and summaries into a large language model.” The prototype rapidly absorbed his project to the point of writing usable code; transforming it into a phone-first app was the next logical step.

Context beyond the microphone

A Chrome extension adds digital context on top of it all. With vision AI, it can “read” open tabs and decipher content from email, Slack and Notion to add to the user’s knowledge graph. The company itself even utilized that tool internally, ranking more than 850 internship applications by scraping resumes and profiles across tabs.

TwinMind had more than 30,000 users, with about half using the app monthly, according to the company. 20-30% also have the chrome extension installed. Though the United States is incubators’ biggest market, the app is growing in India, Brazil, the Philippines, Ethiopia, Kenya and throughout Europe. Professionals are barely dominant in users, while students and personal users follow.

Privacy by design — within practical limits

TwinMind emphasizes that it doesn’t train models on users’ data. Audio is thrown away on the fly; only text transcriptions are stored locally; cloud backup is opt-in. The company frames its approach as a step beyond the cloud-heavy note-takers, focusing on-device processing and user control.

Always listening does raise difficult issues still. Consent laws differ depending on where you live, and industry groups like the Electronic Frontier Foundation have warned that ambient capture can be dangerous without proper safeguards in place. But the trend line is clear: speech recognition error rates keep plummeting, and the latest edition of the AI Index from Stanford HAI lists the multilingual transcription and diarization quality saga as one of spectacular and rapid improvement — perfect for the widespread, low-friction capture we’re talking about from the technical and economic side.

A new speech model and a game for developers

To supplement the app, TwinMind also came up with Ear-3, a speech model that supports over 140 languages, said to have a 5.26% word error rate, and a diarization error rate of 3.8%

It’s a tempered mix of open-source systems trained on curated, human-annotated media such as podcasts and movies. Ear-3 runs in the cloud at $0.23 per hour, API; the app falls back to on-device Ear-2, when offline.

The company is also introducing a more expensive $15 monthly Pro tier with a bigger 2 million-token context window and 24-hour support. The free version retains unlimited transcription and on-device recognition, a daring move that aims to gain mass adoption, then charge for premium features and enterprise API usage.

Backers, pedigree and what’s next

TwinMind has a post-money valuation of about $60 million, and the team numbers 11 people. Hiring includes design to help the experience evolve and business development to scale the API. George has an interesting background that includes Google X, earlier academic work on deep learning for gravitational-wave astrophysics with the LIGO collaboration (he was unusually fast to complete his PhD and then did a stint with Stephen Wolfram’s research lab; for Wolfram, that’s all come full circle with the first startup investment that he has made).

The big-picture thesis is simple enough: if AI can see your real-world and digital life play out in real time, it can anticipate demands and answer questions with markedly less prodding. Analyst firms that track ambient computing and agentic AI have identified this as a defining shift for productivity tools, though it will depend on the companies getting privacy, battery life and accuracy right in everyday use.

Whether TwinMind will become the default “memory layer” for professionals and students will depend on execution — and trust. But with a near-$6 million seed, a multilingual stack, and a device-first architecture, the company has very much laid a marker down in the race to build a working second brain.