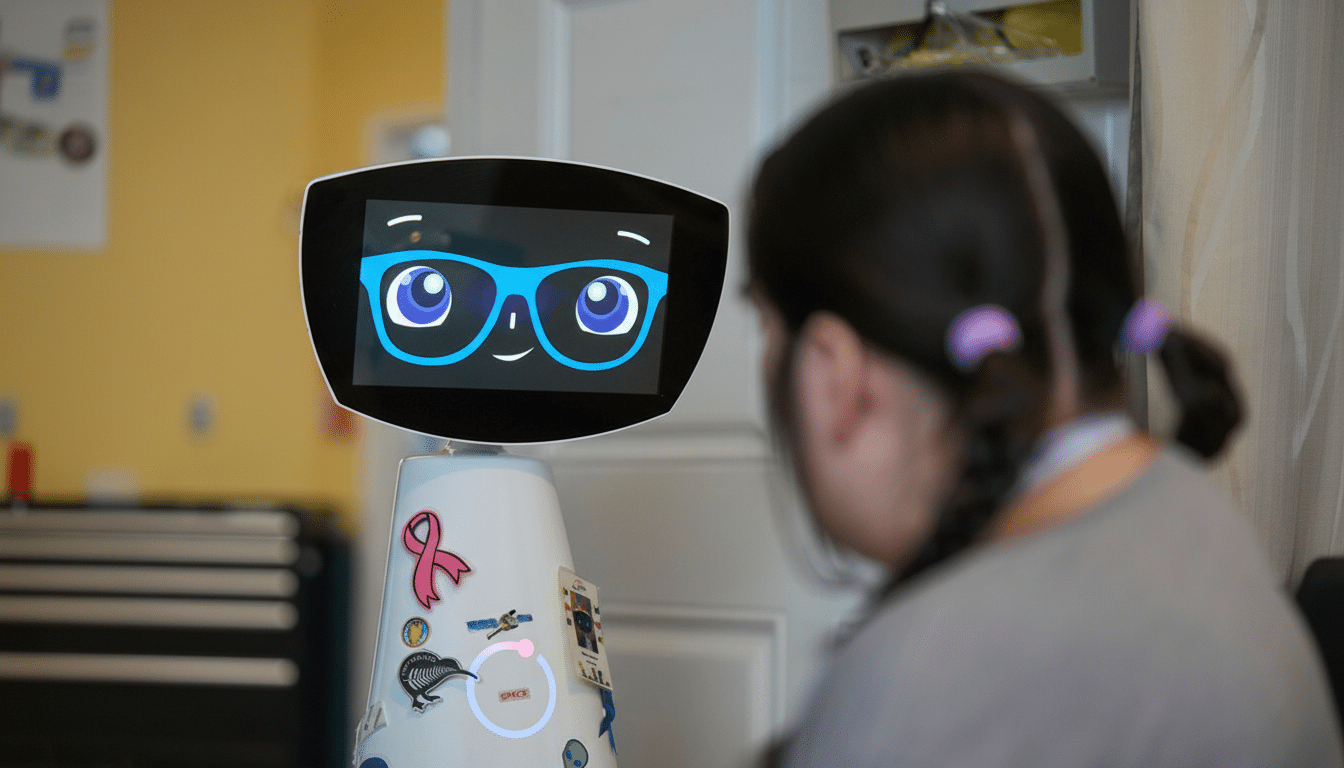

Robyn, an empathetic AI companion built by former doctor Jenny Shao, is launching in the U.S. with a very specific promise: to be supportive and emotionally intelligent — but never a replacement for a clinician.

The iOS app joins a crowded market of AI companions and generalist chatbots, but stands out with a safety posture informed by medicine and memory architecture that’s designed to more closely mimic the way people create and recall personal context.

Why a Doctor Developed an Emotive AI Companion

After watching how patients’ cognition and mood have been thwarted by isolation during the pandemic, Shao left her Harvard medical residency. Her shift reflects a larger public health concern: The U.S. Surgeon General has said that widespread loneliness is associated with worse cardiovascular, cognitive and mental health outcomes — and the World Health Organization recently made social connection a global priority.

Robyn’s design is informed by Shao’s academic background in memory science. She had been in Nobel laureate Eric Kandel’s lab, helping to explain how experiences are encoded biologically. From those principles, Robyn appropriates a method of remembering user-specific details — preferences, patterning, triggers — without going into clinical diagnosis or treatment.

How Robyn Works: Onboarding, Memory, and Guidance

Onboarding is more like reflective journal-writing than account creation. New users impart personal goals, stress reactions and preferred tone. Then those discussions build a lasting emotional profile. Request a better morning routine, and Robyn will push on habits, nudge you toward less early screen time and customize suggestions based on how you deal with friction.

As the relationship grows, the app raises insights like your “emotional fingerprint,” attachment style and common self-critique patterns. The system’s “emotional memory” is designed to make future guidance seem more attuned — like that friend who remembers what usually sets you off or calms you down. Unlike other chatbots, Robyn rejects off-mission requests — scores of the game, need to calculate or know trivia — redirecting conversations back to personal well-being and relationships.

Guardrails of Safety, Boundaries of Ethics

Shao is clear that Robyn is not a therapist, coach or clinician and shouldn’t be used as one. In these cases, Robyn focuses on safety first and redirects the user to crisis resources or suggests that they seek help in person right away. The product’s position resonates with an advisory from the American Psychological Association about steering clear of positioning AI as a replacement for mental health care, and with the NIST AI Risk Management Framework, which underscores having a well-specified scope and harm mitigation.

The team is also hoping to reduce anthropomorphism — ascribing human intent to AI. An open and honest delineation, a refusal to play the romantic role and some cautious boundaries on intimacy. It’s a big differentiator in a category that has been tainted by lawsuits and public backlash — including tragic incidents linked to unsafe interactions and bad escalation protocols.

Market Context and Differentiation Among AI Companions

Robyn falls between two extremes: broader, more powerful general models like ChatGPT that are capable of doing all manner of tasks but don’t have a sense of long-term emotional continuity, and companion tools designed to model particular people or things, such as Replika or Character.AI. Artificial intelligence in which entertainment and social simulation sometimes override guardrails. The company is betting that a narrowly focused, emotionally literate assistant — grounded in safety — will appeal to users looking for help, not fantasy.

Privacy will be the Adele song of trust. Robyn is not a health care provider who must initially comply with HIPAA, but mental health-adjacent apps have been the subject of enforcement for violating sensitive information under the FTC’s Health Breach Notification Rule. Creating the trust that will be necessary to do so will require transparent data practices, plain-language policies and external audits that ensure the safety claims are true.

Funding Plan and Business Model for Robyn’s Launch

The company raised $5.5 million in seed financing from M13 and others, including Google Maps co-founder Lars Rasmussen, early Canva investor Bill Tai, former Yahoo CFO Ken Goldman and X.ai co-founder Christian Szegedy. The company has built an emotional memory architecture for learning and nudges, and a set of clinical sensibilities, investors have said.

Robyn is a paid product: $19.99 per month or $199 per year at the outset. The team has expanded from three in the past few months, and the app is currently only available on iOS in the U.S., though wider platform plans are suggested but not confirmed. The pricing positions Robyn at the premium end of the wellness category, indicating that it’s prioritizing depth and reliability over ad-supported scale.

What Success Looks Like for Robyn and Its Users

For Robyn to matter, it’s going to have to prove real-world results: kinder social exchanges, healthier habits and early-disarming of crisis alerts — all without overreaching into clinical territory. Independent assessment, red-team testing and collaboration with academic factions could transform its posture to one where safety is a matter of proof on the ground rather than good intentions.

The bigger story is cultural. But as AI companions creep into our daily lives, the question is not whether machines can simulate empathy but if they can do so empathetically — responsibly catalyzing human connection. Robyn’s medical origins and institutional feel, not to mention its tiny scope, suggest a strong possibility that it can — if safety, privacy and humility are still nonnegotiable.