AI has crossed from experiment to infrastructure, and your IT playbook has to catch up. Gartner projects that by 2026 more than 80% of enterprises will be using generative AI in production, up from under 5% in 2023. That shift changes how you design systems, guard data, manage cost, and prove value. Here are eight updates leaders are pushing into their 2026 roadmaps to survive—and thrive—in the AI era.

1. Stand Up an AI Governance Backbone for Scale

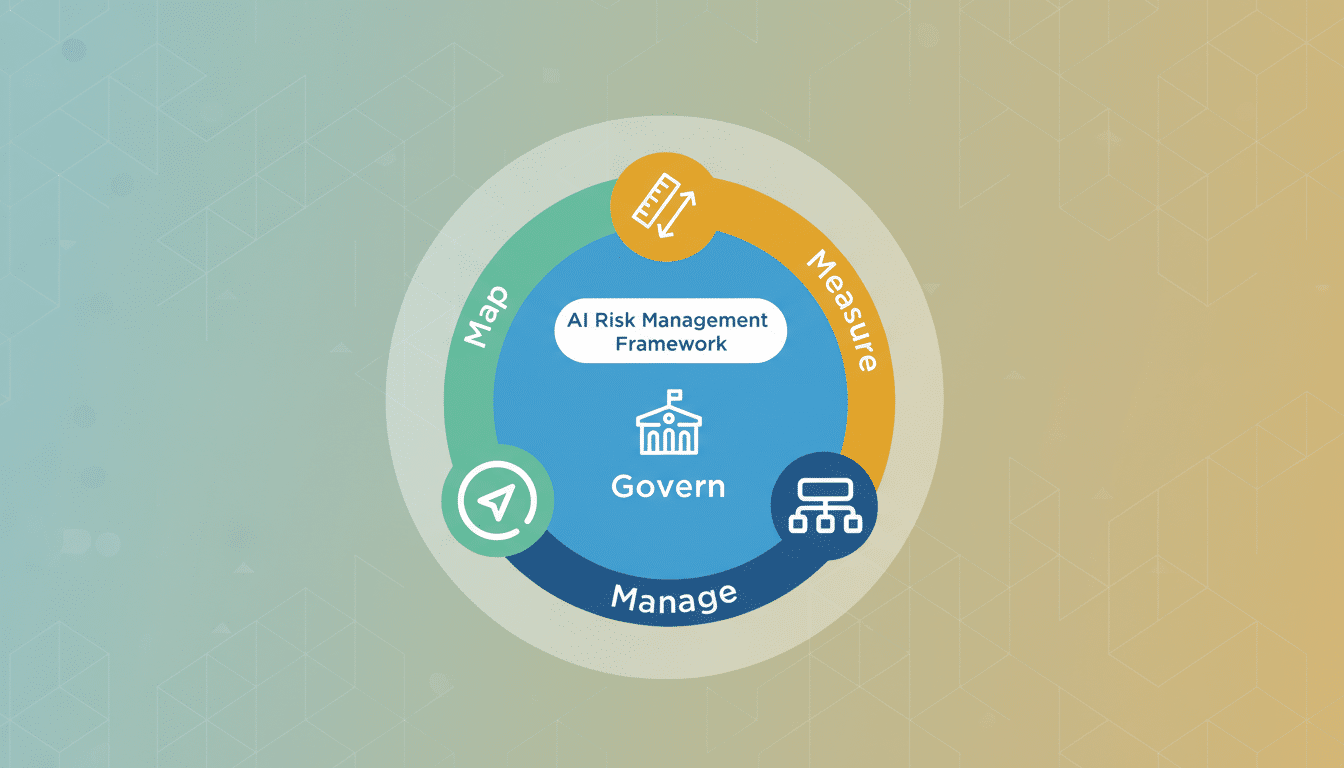

Move governance from committee to capability. Establish an AI steering group with product, security, legal, data, and operations. Codify risk tiers for models and uses, with approval workflows and audit trails. Align controls with the NIST AI Risk Management Framework and ISO/IEC 23894 so documentation and assurance scale across teams.

- 1. Stand Up an AI Governance Backbone for Scale

- 2. Modernize Data For RAG And Responsible Use

- 3. Put AI Security And Trust At The Edge

- 4. Instrument Models With LLMOps And Evals

- 5. Make AI FinOps A First-Class Discipline

- 6. Upgrade Workforce Policy And Skills At Scale

- 7. Rewrite Procurement For Model And Cloud Risk

- 8. Move From Pilots To Production With ROI Proof

Plan for regulation before it arrives. The EU AI Act’s phased obligations and the US executive actions on safe AI will make data provenance, transparency, and incident reporting non‑negotiable. Bake those into policy and tooling now to avoid rework later.

2. Modernize Data For RAG And Responsible Use

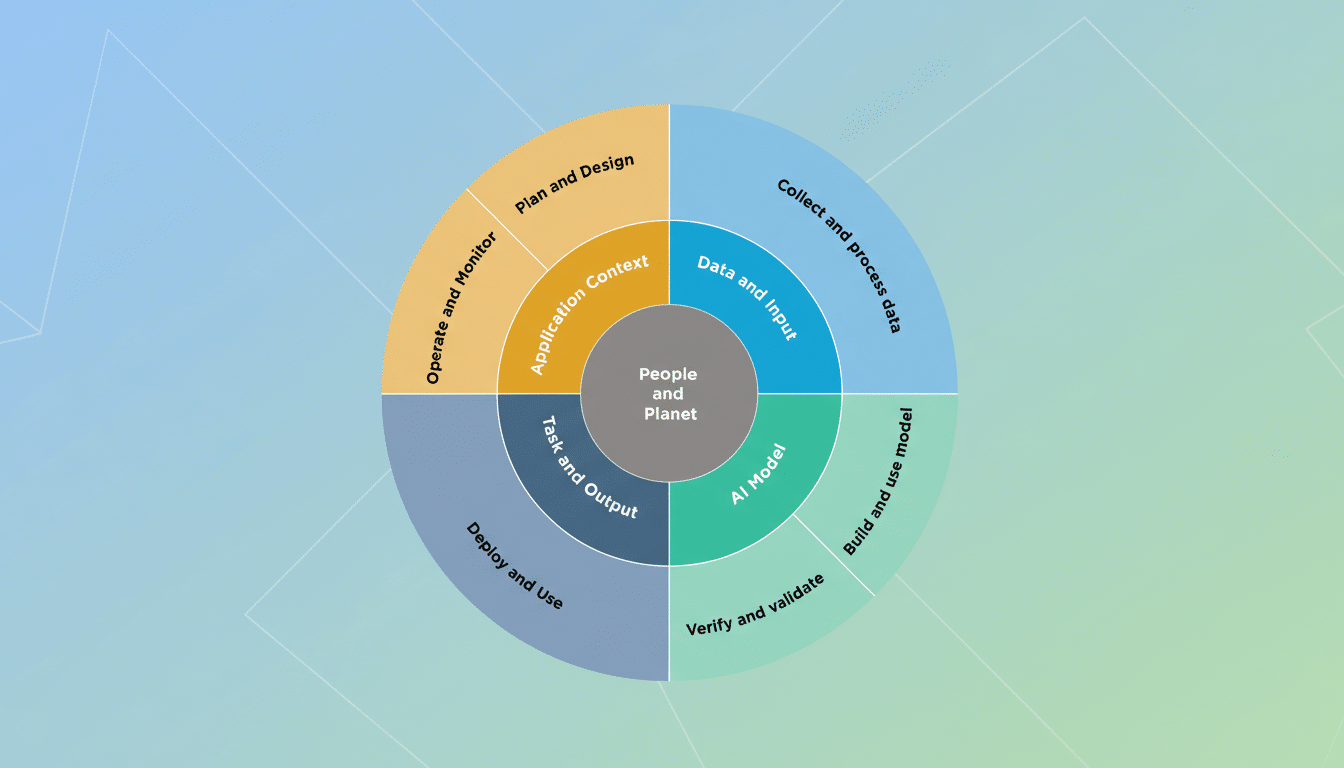

AI is only as good as your data plumbing. Introduce data contracts, lineage, and quality gates for sources feeding models. If you use retrieval‑augmented generation, treat your vector databases as sensitive stores: classify embeddings, monitor drift, and enforce retention and deletion policies for PII and regulated content.

Synthetic data can boost coverage but requires validation. Create fairness and bias checks using representative cohorts, and publish data cards so model consumers understand limits and obligations.

3. Put AI Security And Trust At The Edge

Extend your threat model to prompts, plugins, and model supply chains. The OWASP Top 10 for LLM Applications and MITRE ATLAS outline risks from prompt injection to data exfiltration and model poisoning. Hardening basics include input/output filtering, egress control, secrets isolation, model and dataset attestation, and signed artifacts in your MLOps pipeline.

Don’t skip red teaming. Establish adversarial testing with jailbreaks, toxicity, and privacy leakage scenarios, and gate releases on measurable risk thresholds. Several regulators now expect documented red‑team exercises for high‑impact AI.

4. Instrument Models With LLMOps And Evals

Traditional APM won’t tell you if a model is hallucinating. Implement LLM‑aware observability: prompt and template versioning, trace IDs across retrieval and generation steps, real‑time guardrail hits, and human‑in‑the‑loop feedback. Maintain golden datasets and offline benchmarks (e.g., MMLU, toxicity, factuality) tied to business metrics like resolution rate or handle time.

Set SLOs for quality, latency, and cost. Netflix‑style canarying and shadow deployments for models reduce blast radius when you swap providers or weights.

5. Make AI FinOps A First-Class Discipline

AI without cost controls becomes a blank check. Track unit economics per use case: cost per 1K tokens, per retrieval, per successful outcome. Build usage guardrails, caching, and prompt compression. Route by SLA—small models for low‑risk tasks, larger ones only when needed.

Coordinate with the FinOps Foundation’s guidance to forecast GPU spend, secure committed discounts, and monitor energy impacts. McKinsey estimates generative AI could add $2.6–$4.4 trillion in value annually, but only if the spend curve is governed as tightly as performance.

6. Upgrade Workforce Policy And Skills At Scale

Your people are already using AI. Microsoft’s Work Trend Index found that roughly 75% of knowledge workers report using AI at work, and many bring their own tools. Publish a clear acceptable‑use policy, require privacy‑safe defaults, and offer role‑based training—from secure prompt patterns for analysts to LLM application design for engineers.

Stand up an AI Center of Excellence to share patterns, reusable components, guardrails, and evaluation results. Recognize new roles: AI product manager, model risk lead, and LLM platform engineer.

7. Rewrite Procurement For Model And Cloud Risk

Vendor due diligence must cover more than uptime. Demand transparency on training data provenance, safety testing, and model update cadence. Require IP indemnification, prompt leak protections, data residency controls, and incident reporting SLAs. For open models, evaluate license terms, community health, and your capacity to patch and fine‑tune safely.

Create exit plans. Multi‑model routing and abstraction layers limit lock‑in and let you shift across providers or deploy on‑prem if economics or regulation change.

8. Move From Pilots To Production With ROI Proof

Endless proofs of concept are a trap. Prioritize use cases with measurable outcomes—faster case resolution, higher sales conversion, or fewer manual steps. GitHub reported developers completed coding tasks 55% faster with AI assistance; set similar baselines and run A/B tests to prove impact in your environment.

Design for human oversight and phased automation. Start with assistive workflows, then graduate to partial and full automation as confidence scores and evaluation metrics meet thresholds. Report benefits and risks side by side to earn stakeholder trust.

The bottom line: AI changes the operating model of IT. Update the playbook now—governance, data, security, observability, cost, people, procurement, and delivery—or risk chasing yesterday’s rules while your competitors industrialize tomorrow’s capabilities.