Discord is rolling out a handful of Family Center upgrades that will give parents and caregivers more insight into how teens are using the chat and voice platform, an update that comes after several lawsuits and growing concern around youth safety online.

The updates add some pragmatic controls and weekly activity snapshots, rather than the opening up of message content, a balance Discord said it hoped would educate parents but not morph the platform into a surveillance system for teenagers.

What’s New in Discord’s Family Center for You Today

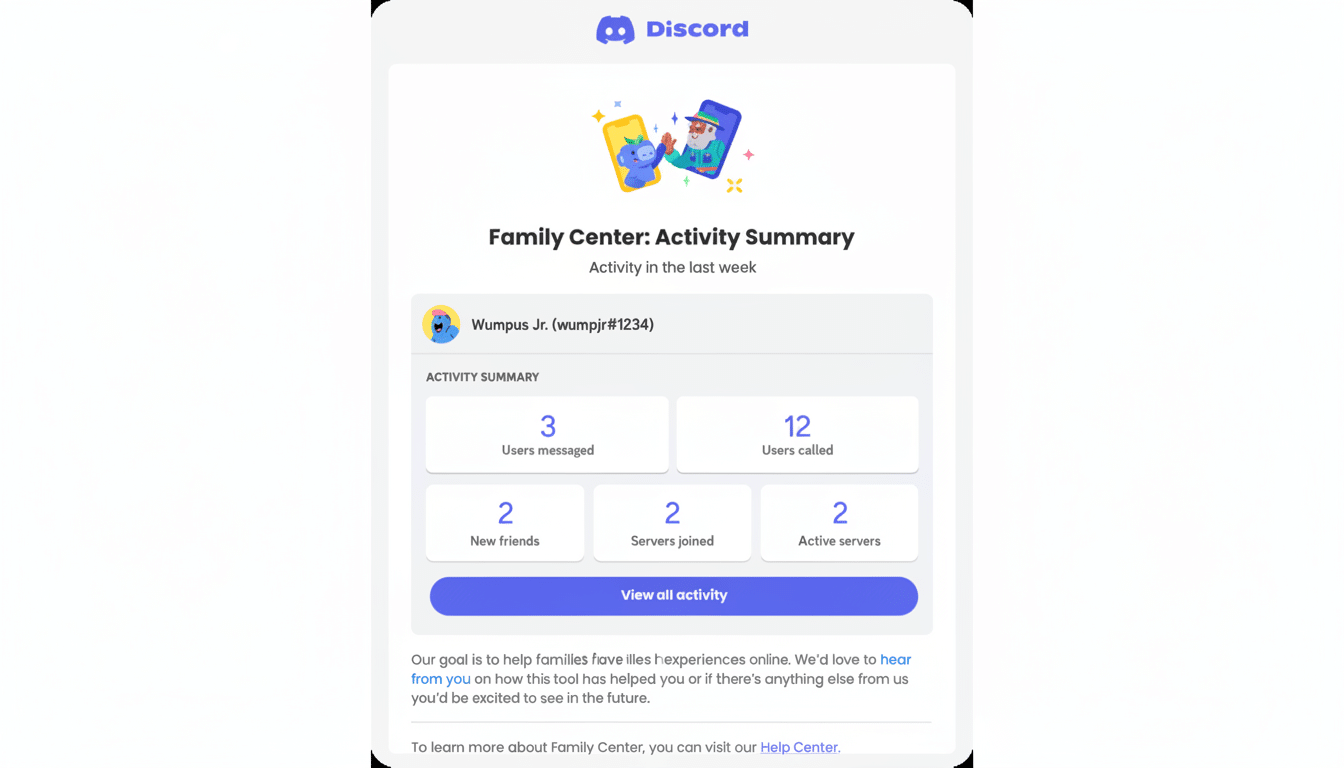

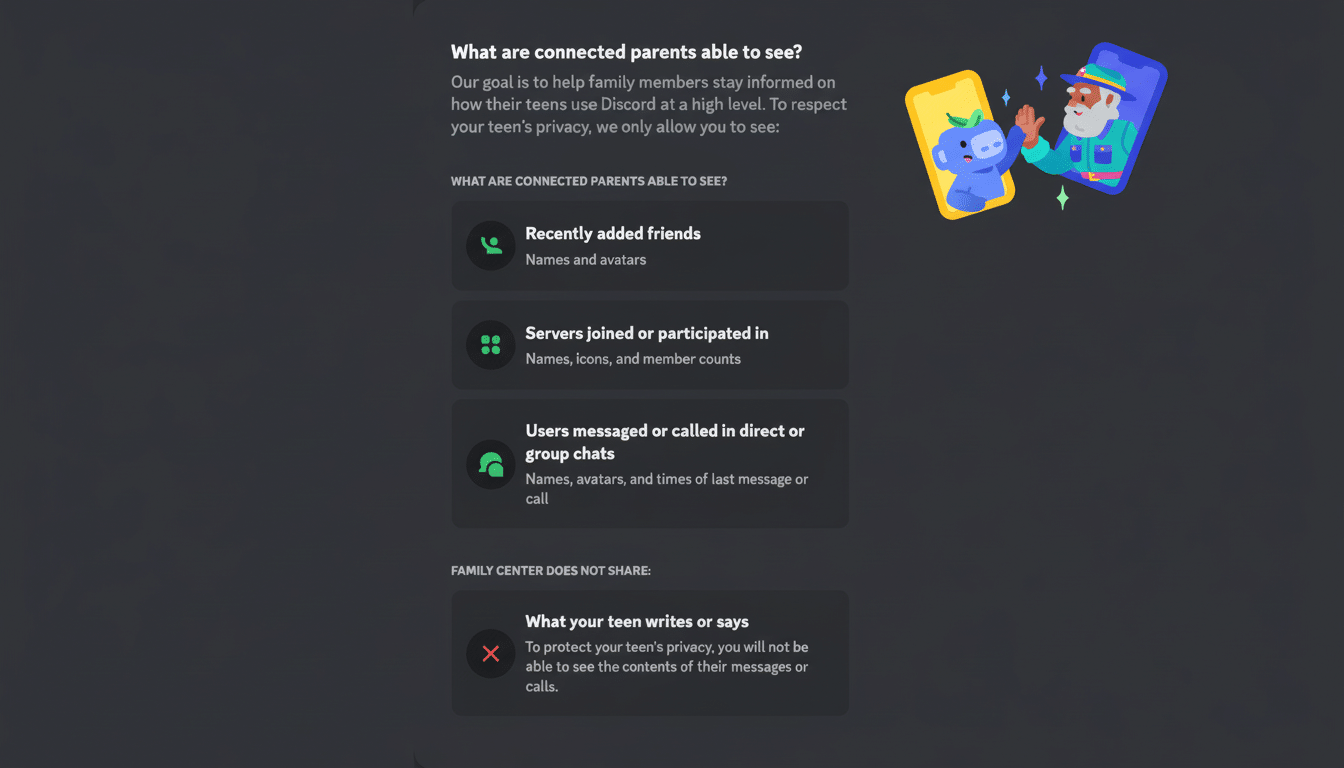

Parents and guardians with access to the teen’s account can now view the five people the teenager messaged or called most frequently, a list of servers that the teen was active in on Discord, their total voice minutes, messaging intensity via video calls, and a record of recent transactions. These are metadata views, not chat transcripts.

The dashboard is necessarily short-term: activity is shown for the preceding seven days. If a week is missed, it resets in the in-app view. Families who prefer a longer trail can turn to the emailed summaries from Family Center, which have evolved into a searchable archive.

This is also where two safety switches come in: A teenager can elect to notify a parent when filing reports on suspect content or rule breakage, and guardians can impose filters for sensitive content as well as limits on direct messaging. This is especially true if you set your DMs to Friends Only instead of enabling messages from all server members.

Why Discord Is Making the Move Now on Teen Safety

The update comes amid legal pressure and a harsher regulatory atmosphere. Recent lawsuits filed by Dolman Law Group name Discord among other platforms and claim that they do not adequately prevent grooming and harassment of minors. While the facts of these cases will be determined in court, the filings highlight the industry’s risk exposure.

There are also troubling reports from safety groups, which have reported surging numbers of online exploitation cases. The National Center for Missing and Exploited Children said its CyberTipline received over 36 million reports in the latest full year — a reminder that detection and swift reaction are non-negotiable protections across social apps.

Regulators are forcing the issue. The U.K. Online Safety Act and the E.U.’s Digital Services Act require platforms to assess and abate youth risks, and prove their measures work. In the meantime, neighboring platforms including Roblox have rolled out age verification for teens and better parental controls, raising the bar for youth-focused cross-sections of gaming and live chat.

How the Tools Work in Practice for Parents and Teens

Family Center connects a teen’s account to a primary account (a caregiver’s account) using an opt-in pairing process — usually a QR code or link that the teen agrees to share.

After pairing, parents can view a weekly summary: who the teen interacted with most, where they spend their time, and aggregate call minutes. Think of it as a pulse check, not a dossier.

For many families, the data that would be most meaningful is change over time. A precipitous jump from a handful of minutes of voice time to hours a day, or the movement to new servers with new and unfamiliar communities, can be valuable conversation fodder. The new DM setting is also practical: limiting messages to friends cuts back on strangers’ reach without isolating real social activity within school or hobby servers.

Importantly, Discord still regards Family Center as being about activity signals and not content reading. That approach is consistent with advice from youth-safety advocates, who caution that secret surveillance can undermine trust and drive teens to alternative accounts or platforms.

Privacy Trade-offs And Expert Perspectives

Experts generally support clear, opt-in supervision combined with open family agreements. Common Sense Media, for instance, recommends that parents prioritize transparency and use controls to establish limits but not try to monitor every communication. The new report-notification option follows that model and lets teens flag concerns while staying in the loop.

At the same time, metadata is revealing. Detecting the top five contacts and high-traffic servers may reveal social patterns that a teenager had thought private. Families should have conversations about what will be reviewed and why, and can establish expectations about when features like DM restrictions would be turned on or changed.

What To Watch Next as Discord Expands Family Center

Discord says it is working on additional safety measures and has already been testing age-verification systems in countries like the U.K. and Australia. Facebook says it has no age lower than 13, but that is only as good as users’ self-reported dates of birth (as with most platforms).

Adoption and outcomes will be the ultimate test for the overhauled Family Center. And if weekly summaries and tighter DM controls (which restrict contact with unknown adults) help to minimize contact from adults who are new on the scene or intervene earlier when things get problematic, expect metadata-first dashboards like this one to become a social chat app standard.

For now, the message is clear: Discord is trying to meet parents where they are — offering sharper visibility, a few small control levers, and some nudges toward regular conversation benefits — while keeping teens’ day-to-day chats out of direct view.