Discord will begin requiring age verification worldwide in the coming weeks, shifting all accounts into a teen-appropriate mode by default unless a user proves they are an adult. The move tightens access to sensitive features and content, and underscores how mainstream social platforms are racing to build stronger guardrails for younger audiences.

What Changes for Users Under Discord’s New Policy

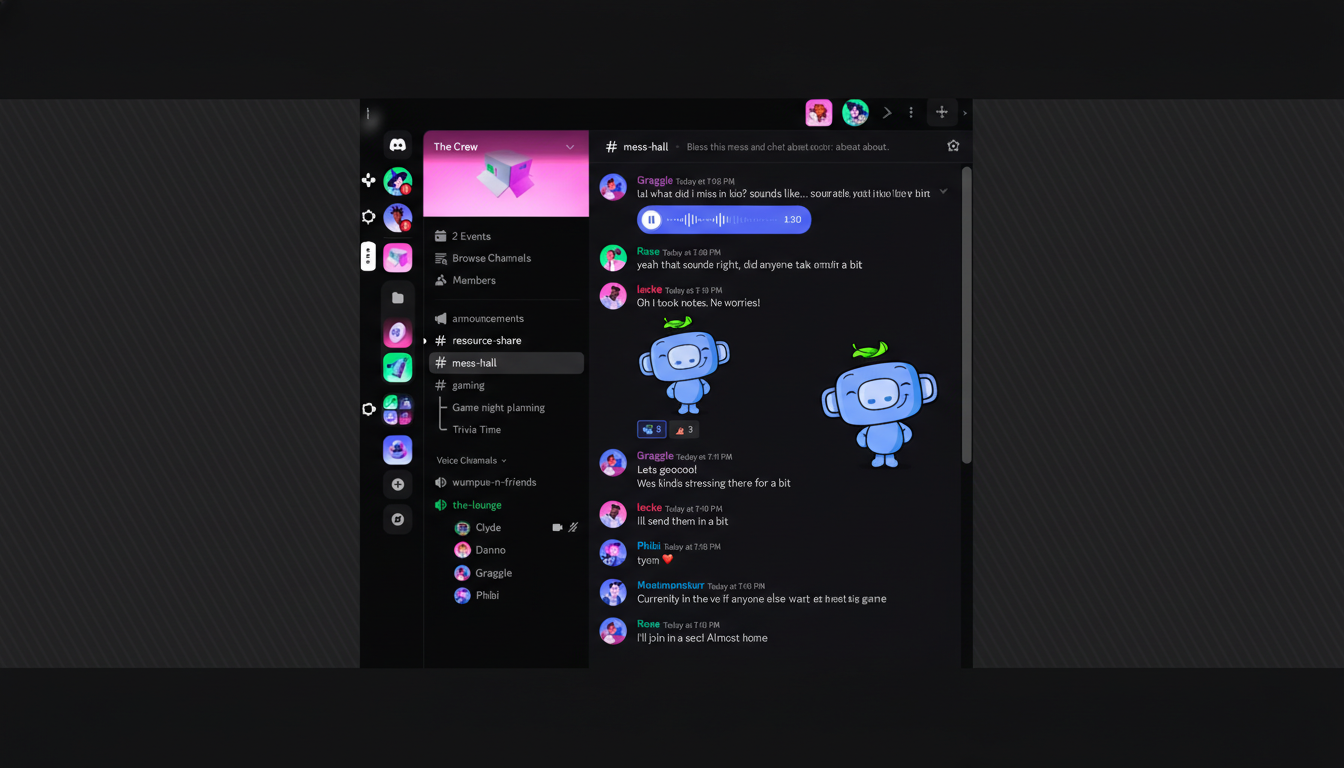

Under the new policy, unverified accounts are placed into a stricter experience designed for teens. Sensitive media remains blurred unless the account owner verifies adulthood, and only verified adults can enter age-restricted channels and servers or use certain commands that platforms often reserve for mature audiences.

Direct messages from people a user may not know will funnel into a separate requests inbox by default, and only verified adults can loosen that filter. Friend requests from unfamiliar accounts trigger an extra warning screen. Voice participation also tightens: speaking on stage in community events will be limited to verified adults.

Some settings that could expose younger users to unsolicited contact or mature content will be locked behind age checks. Verified adults retain the flexibility to modify these preferences; teens will see more protective defaults that limit reach, visibility, and interaction.

How Age Verification Works on Discord Globally

Discord is introducing two initial pathways to confirm age. The first is a facial age estimation that uses a short video selfie to estimate whether a user is an adult. The company says this estimation is processed on-device and the video selfie does not leave the user’s phone. The second option asks users to submit a government-issued ID to vetted vendor partners for a one-time check.

Discord notes that some accounts may be asked to complete more than one method when extra assurance is needed to place a user in the right age group. The company says IDs handled by third-party vendors are deleted quickly after verification. This “tiered assurance” model reflects industry guidance that higher-risk features merit stronger checks.

Safety Goals and Privacy Trade-offs in Discord’s Plan

Discord frames the shift as a safety-first redesign: make the teen experience the default, then let verified adults opt into more permissive settings. Savannah Badalich, Discord’s head of product policy, said the teen-by-default approach builds on existing safety architecture and that the company will keep collaborating with outside experts and policymakers to support teen well-being.

The approach also revives a perennial debate: age checks improve protections but add sensitive data flows that must be secured. Late last year, Discord disclosed that a third-party vendor used for age-related appeals was breached, exposing data for roughly 70,000 users, including ID images in some cases. Digital rights groups have long warned that age assurance can create new targets for thieves, even when a platform limits data retention. Accuracy is another concern: facial estimators must handle diverse ages, skin tones, and lighting conditions without bias.

Independent transparency reports will matter. Clear documentation of verification error rates, appeal outcomes, and data deletion timelines can help regulators and civil society validate that the system protects teens without over-collecting personal information.

A Push Driven by Evolving Global Rules and Laws

The global rollout follows age checks Discord introduced in the U.K. and Australia, two markets pushing platforms hardest on “age assurance.” In the U.K., the Online Safety Act tasks Ofcom with enforcing child-safety duties of care, including proportionate age checks for higher-risk features. Australia’s eSafety framework similarly presses services to reduce youth exposure to harmful content and contact. Across the EU, the Digital Services Act requires stronger protections for minors and bans targeted ads based on profiling to children.

Other platforms are moving in parallel. Roblox has introduced facial verification for some chat features, reflecting its young audience. YouTube has deployed age-estimation technology to better steer teens into age-appropriate experiences. Regulators increasingly expect “reasonable certainty” of a user’s age for mature spaces, and teen-first defaults everywhere else.

Impact on Communities and Moderators Across Discord

Server owners should anticipate more explicit gating for age-restricted channels and features. Expect an initial wave of access requests and verification questions as long-time members encounter new prompts. Clear server rules and labeled channels will reduce confusion; moderators may want to pin guides that explain which spaces require adult verification and why.

For parents and caregivers, the changes strengthen Discord’s safety net alongside tools like Family Center and DM filters. The teen-default posture reduces the risk of unsolicited contact and exposure to mature content out of the box, while providing pathways for older teens to unlock features only after confirmation.

Why It Matters for Discord’s Global User Community

Discord hosts vast communities spanning gaming, education, fandoms, and professional groups. Moving the entire ecosystem to teen-by-default with adult-only unlocks is a consequential shift for a service with well over 150 million monthly users. If executed carefully—with transparent data handling, clear appeals, and strong vendor oversight—it could become a reference model for balancing youth safety with privacy in real-world social platforms.

The company says the rollout begins in the near term and will apply to both new and existing accounts. Verified adults will retain flexibility to adjust controls; everyone else will get a safer baseline by default. The test for Discord, and the industry, is whether these systems can scale globally without eroding user trust.