OpenAI’s latest models are being integrated directly into Databricks’ data and AI stack in a multimillion-dollar, multiyear deal aimed at catalyzing enterprise adoption of generative AI. That integration covers the Databricks Lakehouse and its agent-building environment Agent Bricks, making OpenAI a first‑class choice for workloads that live next to governed corporate data.

The collaboration makes OpenAI’s latest models, such as GPT‑5, first‑class citizens in Databricks that can be used natively via both SQL and APIs. Databricks users can pipeline prompts, ground outputs to consider retrieval from enterprise data, and fine‑tune models within the platform’s security and governance constraints. The company is essentially pre‑paying for usage: If customers fail to use $100 million worth of OpenAI capacity over that term, Databricks assumes the cost.

It’s a high‑conviction bet that model quality and tight data proximity will determine which platforms win the enterprise AI race. IDC predicts that generative AI will dominate more than $140 billion in spending in 2027, with Gartner staking out that the vast majority of enterprises are going to be tapping GenAI APIs by mid‑decade. Databricks wants to be the place where that spend lands.

Why This Is Important for Enterprise AI and Governed Data

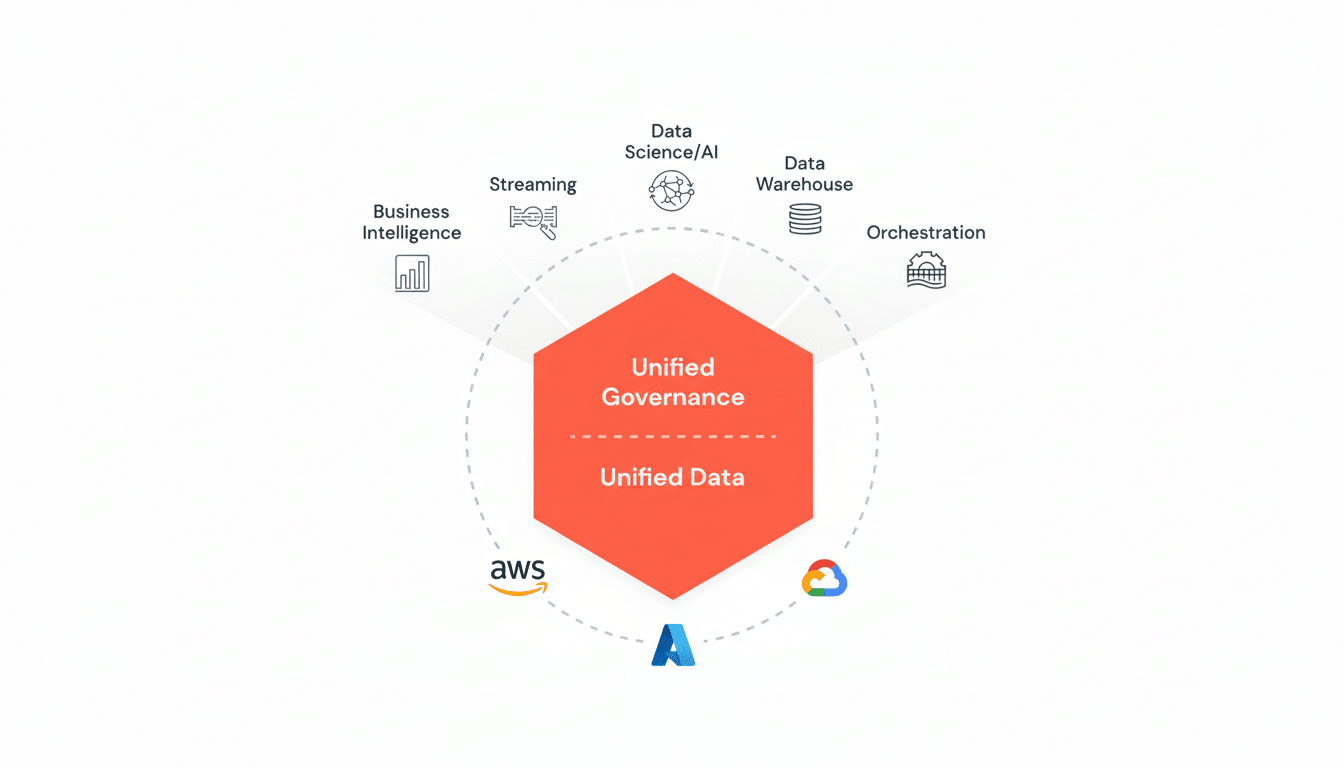

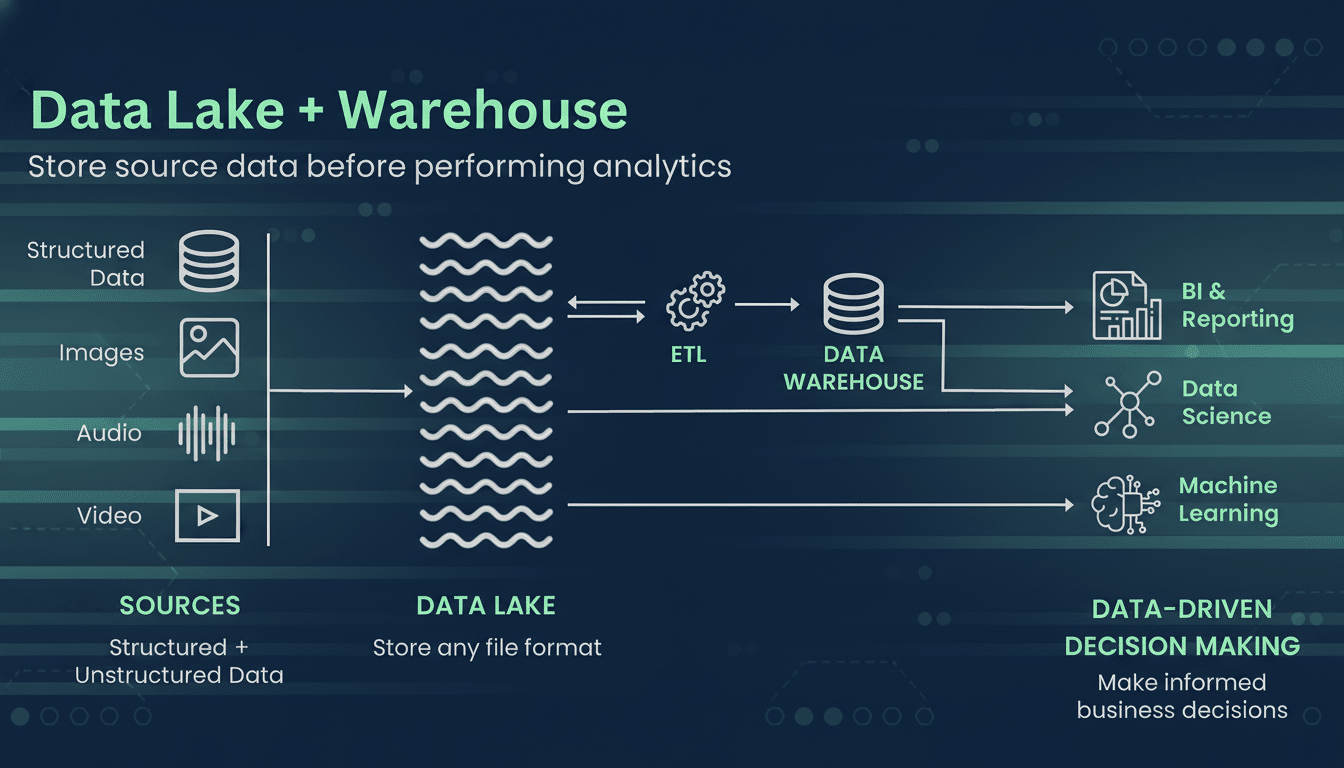

Enterprises want generative AI where their data resides. Driving sensitive data off‑board to external endpoints invites cost, compliance, and latency issues. By enabling OpenAI models to run in the Lakehouse environment, and integrating them with Unity Catalog governance, Databricks minimizes data movement, centralizes access control, and simplifies auditability – features which are often demanded by financial services, healthcare, or public sector customers.

OpenAI gets distribution to thousands of data‑rich customers and a more predictable revenue stream to fund its burgeoning compute footprint. Databricks adds to its complement of premier models that can get customers out the door shipping production‑grade assistants for analytics, code generation, and knowledge retrieval without cobbling together disparate tools. As OpenAI’s COO has said in different settings, getting more advanced models closer to secure corporate data will shorten the distance between pilot and impact; this deal is a testament to that.

How the Databricks-OpenAI Integration Works End to End

Agent Bricks enables teams to build AI agents on top of their data through orchestration, vector search, and guardrails. With OpenAI in the mix, users are free to use GPT‑5 as a flagship model or choose from a catalog that includes Databricks‑hosted open‑weight options like gpt‑oss 20B and gpt‑oss 120B; choice of model is surfaced through SQL for analysts and APIs for developers, making the feature broadly accessible without being tied to any tooling.

Crucially, Agent Bricks features evaluation and observability: teams have the ability to benchmark accuracy, latency, and cost per task and fine‑tune or switch models as needed. That tackles a longstanding failing point that early proofs of concept can’t get into production due to unpredictable performance. With built‑in A/B testing against enterprise‑custom models and retrieval‑augmented generation patterns, outputs can be constrained and tested prior to production‑quality scaling.

Example use cases include a global bank implementing a customer‑service agent surfaced by internal policy documents; a pharmaceutical company conducting literature review and trial summarization with human‑in‑the‑loop approvals; or a manufacturer offering natural language access to quality and supply chain data. Every app gains the same benefits by being able to keep data residency, lineage, and permissions in the Lakehouse while invoking high‑performing models.

The Economics and Competitive Setting for Data and AI Platforms

The commitment is similar to a take‑or‑pay agreement: OpenAI gets paid up front for a minimum level of revenue, while Databricks assumes utilization risk in return for lower unit pricing and guaranteed capacity. The company entered into a similar agreement with Anthropic back in March, suggesting a portfolio play — give customers best‑of‑breed models while cutting out consumption at platform scale.

The move ramps up pressure in the data‑AI platform market. AWS Bedrock, Google Vertex AI, and Snowflake Cortex all woo the enterprise with curated model hubs and governance. Databricks’ pitch is data gravity plus open choice: Run proprietary models as needed (e.g., OpenAI for top‑tier quality) or run open weights when price, control, and/or customization matters most — all without leaving the Lakehouse.

For buyers, the math remains pragmatic: real task accuracy is all, total cost per outcome when it’s not just tokens, and ensuring compliance artifacts — PII handling, model lineage, policy enforcement — are first‑class. McKinsey predicts generative AI has the potential to contribute $2.6 to $4.4 trillion in additional annual economic value, but that means you need a great MLOps strategy, not just access to models…

What Customers Should Watch as the Databricks-OpenAI Deal Rolls Out

Three signals will indicate adoption velocity.

- Pricing transparency: committed consumption is only useful if teams can predict costs across models, vector stores, and retrieval.

- Governance depth: to appease regulators, unified policies, red‑teaming, and content filters should be configurable by domain and region.

- Portability: the ability to replace models or mix them — OpenAI, Anthropic, open‑weight models — without requiring applications to be rewritten would help guard against lock‑in.

If Databricks can deliver on these fronts, the OpenAI integration could be a step toward reducing the gap between data and deploying AI agents, recasting the Lakehouse as the default home for enterprise GenAI workloads.

And if usage exceeds the $100 million floor, both the platform and its customers will have proven a model‑driven approach to AI that values performance and proximity over hype.