Databricks chief executive Ali Ghodsi says software-as-a-service isn’t dying, but the part users touch is. As the company disclosed a $5.4 billion revenue run rate, up 65% year over year with more than $1.4 billion tied to AI products, Ghodsi argued that large language models will soon push traditional app interfaces into the background.

The growth milestone arrived alongside fresh capital: Databricks finalized a previously announced $5 billion raise at a $134 billion valuation and secured a $2 billion debt facility. The war chest underscores a simple message to customers and rivals alike—AI is not a side bet; it’s the plan.

AI Interfaces Are Poised to Obscure Traditional SaaS

Ghodsi’s contention is not that enterprises will rip out systems like Salesforce, ServiceNow, or SAP. It’s that the user interface—the dashboards and buttons that defined the SaaS era—will become invisible as conversational AI and agents execute tasks through natural language, APIs, and automations.

For two decades, the “moat” in enterprise apps included hard-won expertise: the admins and power users who knew every workflow screen. Once the interface is language, that moat shrinks. People will ask the system to file a ticket, adjust a quota, or analyze a cohort—and the agent will do it, regardless of which product sits beneath.

Systems of Record Will Endure Beneath AI Interfaces

The back end isn’t going away. Core databases that safeguard sales pipelines, HR files, and financials still do the heavy lifting, and they’re difficult to migrate at scale. Foundation model providers are not trying to be those databases; they’re aiming to replace the human-facing layer on top.

Expect more agent-to-app connections through secure APIs, event streams, and plug-ins. Gartner and Forrester have both advised that conversational interfaces will become a primary design pattern for enterprise applications, reflecting the shift from click-driven workflows to intent-driven execution.

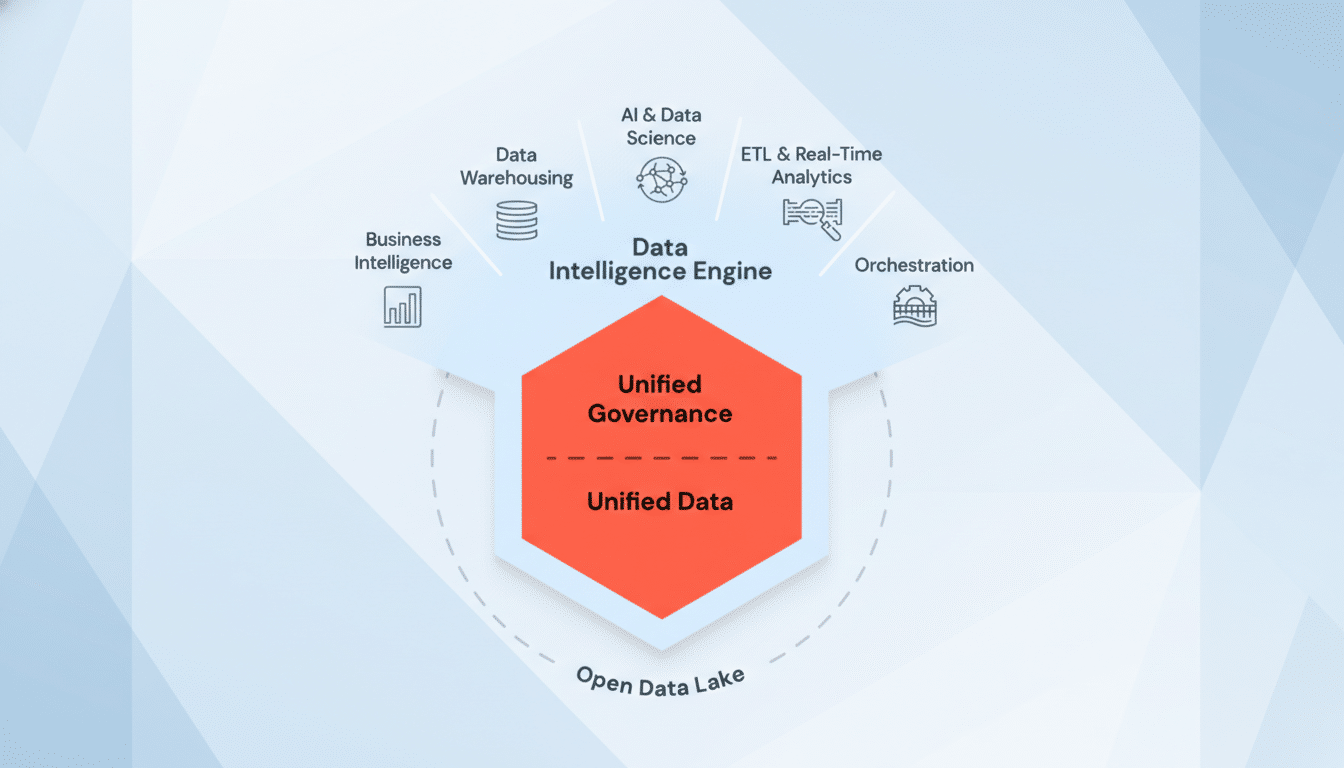

Genie and Lakebase Show Early Product-Led Pull

Databricks points to Genie, its LLM-driven interface, as a catalyst for platform consumption. Instead of crafting SQL or requesting a bespoke report, a manager can ask Genie why compute spend spiked last week, or what’s driving churn in a particular region, and get a narrative answer with cited data.

The company is also leaning into AI-native infrastructure with Lakebase, a database designed for agents. In its first eight months, Lakebase generated roughly twice the revenue the Databricks data warehouse produced at the same age—early and imperfect comparisons, but notable momentum for an agent-first product.

Rivals are steering the same way. Snowflake has pushed LLM-powered services for its data cloud, Microsoft is threading Copilot across Fabric and Dynamics, and Google is fusing Gemini with BigQuery. Open-source models such as Llama have lowered the barrier to embedding conversational logic inside products, as long as governance, lineage, and security controls stay intact.

Follow the Money Behind Databricks’ AI Bet

With billions in fresh equity and a credit line, Databricks signaled it won’t rush to public markets. The company wants to be insulated if capital tightens again. Investor appetite for AI remains intense, aided by McKinsey’s estimate that generative AI could add up to $4.4 trillion in annual economic value across functions from customer service to software engineering.

Still, valuation gravity will test execution. Revenue tied directly to AI products already tops $1.4 billion, but the bigger prize is converting AI-driven usage into durable, high-margin platform spend while keeping costs of inference, fine-tuning, and data movement in check.

What This Shift Means for Today’s SaaS Vendors

The takeaway for incumbents is stark: make the interface optional. Ship LLM-first experiences, open rich APIs and events for agents, and expose granular permissions and audit trails so enterprises can trust autonomous workflows. Measure value by outcomes and task completion, not by seat counts alone.

Winners will also refactor data layers for retrieval-augmented generation, unify metadata for governance, and align pricing with AI usage patterns. Gartner, IDC, and others have noted that data quality, lineage, and security will decide whether AI upgrades scale beyond pilots. That work is unglamorous—but it’s where competitive advantage lives once the UI disappears.

SaaS won’t vanish; it will recede. If Ghodsi is right, the next era belongs to vendors whose products behave like reliable plumbing beneath fluent, trustworthy AI—where the question matters more than the menu, and differentiation moves from clicks to outcomes.