A new data engineering course bundle is drawing attention for its straightforward promise: lifetime, subscription-free access to practical training in Python, Pandas, NumPy, Databricks, Spark ETL, and Delta Lake for $34.99. Rather than scattering skills across random tutorials, the seven-course package is structured to help learners build real-world pipelines and analysis workflows that employers actually use.

What the $34.99 data engineering bundle includes

The curriculum opens with modern Python fundamentals and numerical computing techniques in NumPy—vectorization, broadcasting, and memory-efficient arrays—so learners can write fast, readable code. It then moves into Pandas for cleaning, joining, reshaping, and analyzing datasets, including the kinds of pitfalls that trip up beginners (think chained assignment or misaligned indices).

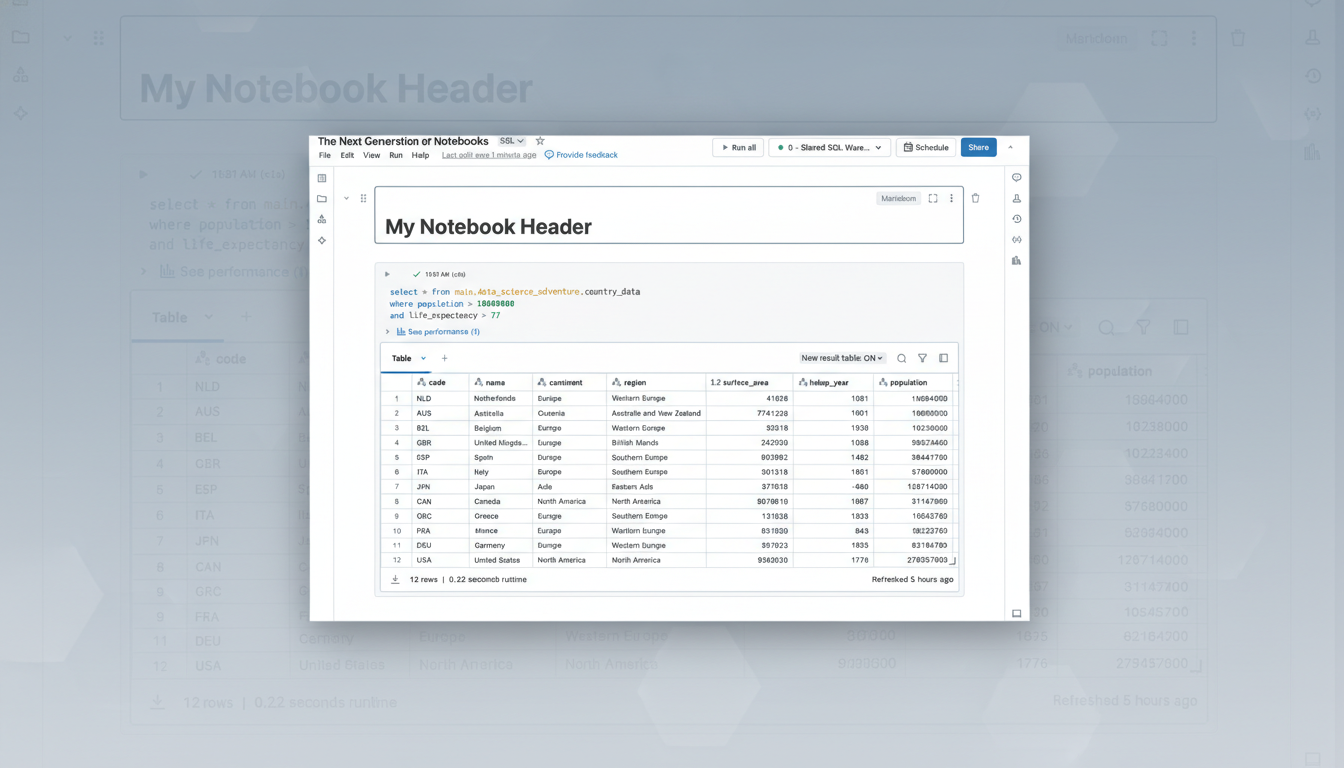

From there, the training shifts to exploratory data analysis and machine learning, applying models to real datasets to surface patterns, validate assumptions, and visualize results. The capstone of the bundle focuses on production-grade data engineering: Databricks notebooks and Jobs, Apache Spark ETL patterns, incremental ingestion, schema evolution, and reliability features delivered by Delta Lake, such as ACID transactions and time travel.

The package is device-friendly and comes with lifetime updates, so learners can revisit modules as tools evolve. The emphasis is on hands-on labs—building pipelines that ingest raw files, transform them for analytics, and deliver outputs ready for dashboards or downstream machine learning.

Why Databricks and Apache Spark skills matter for data jobs

Databricks has emerged as a standard platform for unified analytics and AI, with enterprises consolidating data warehousing and data lake workloads in Lakehouse architectures. Apache Spark remains the engine of choice for large-scale ETL, streaming, and feature engineering, powering workflows at companies known for heavy data operations.

Employer demand backs this up. The World Economic Forum has consistently listed data and AI roles among the most in-demand jobs for the coming years, while LinkedIn’s skills reports show Python, SQL, and data engineering among the fastest-rising technical competencies. The US Bureau of Labor Statistics projects double-digit growth in data-centric roles this decade, with data scientists alone expected to expand by around 35%—a signal that applied, pipeline-oriented skills continue to command attention.

Crucially, hiring managers look for more than syntax knowledge. They want candidates who can design resilient pipelines: handling late-arriving data, deduplicating records, optimizing joins, partitioning tables, and enforcing quality controls. By centering Spark and Delta Lake, this bundle steers learners toward that production mindset.

How it compares to monthly learning platform subscriptions

Many learning platforms rely on recurring fees, often $30–$60 per month or several hundred dollars per year. At $34.99 for lifetime access, this bundle is a comparatively low-risk option for learners who prefer to own material outright and progress at their own pace. The single track also reduces the cognitive load of curating a study plan, a common stumbling block that leads to “course hopping” with little to show in a portfolio.

Another advantage is coherence. The tools covered—Python, Pandas, NumPy, Databricks, Spark, and Delta Lake—are complementary. Learners can start small (a Pandas-based analysis) and scale the same logic to Spark for larger datasets, then persist results in Delta tables for reliability and downstream consumption.

Who will benefit from this bundle and what you can build

This path fits career switchers entering analytics, data analysts stepping into engineering, or software developers rounding out their data stack. Expect to build end-to-end projects—ingesting CSV and JSON files, performing transformations, implementing incremental upserts, and publishing clean Delta tables that analysts can query or feed into BI tools. A strong portfolio project might showcase a Spark ETL job orchestrated in Databricks Jobs, with data quality checks and versioned outputs to illustrate reproducibility.

For those pursuing certifications, the bundle aligns with common objectives of entry-level cloud data engineering exams and Databricks associate-level credentials, offering the practice needed to translate concepts into working systems. While it is not a substitute for official prep, it provides meaningful repetition across real workflows.

What to know before you start this data engineering path

You’ll get the most from the material with basic Python under your belt and a willingness to run notebooks regularly. Expect to work with a cloud environment—Databricks offers accessible options—so you can execute Spark jobs, manage clusters, and explore Delta Lake features like time travel and optimized writes. Plan to document everything in a public repository; hiring teams increasingly use GitHub portfolios to evaluate real-world competency.

One pragmatic tip: pair the coursework with small, meaningful datasets that matter to you—public health stats, logistics feeds, or open city data. This turns abstract lessons into domain knowledge, which is often the difference between a passable demo and a compelling interview story.

Bottom line: at $34.99, this subscription-free bundle offers a focused, project-driven path into data engineering. If you’ve been waiting for a practical way to turn Python skills into production-ready pipelines, this is a timely place to start.