Lawmakers are set to question Discord, Reddit, Twitch and Valve about whether their platforms have hosted or contributed to the shooting of conservative commentator Charlie Kirk. The House Oversight leadership has sought for the companies’ top executives to testify at a hearing on online radicalization, an indication of new pressure to examine how social and gaming platforms detect, deter and disrupt extremist activity.

Investigators are continuing to build a portrait of the suspect’s online footprint, and state officials have said the individual continued to be uncooperative. Still, congressional leaders say that the largest platforms need to account for how their systems, policies and product designs can be weaponized — and what they will do to stop it.

Why these four platforms are being targeted

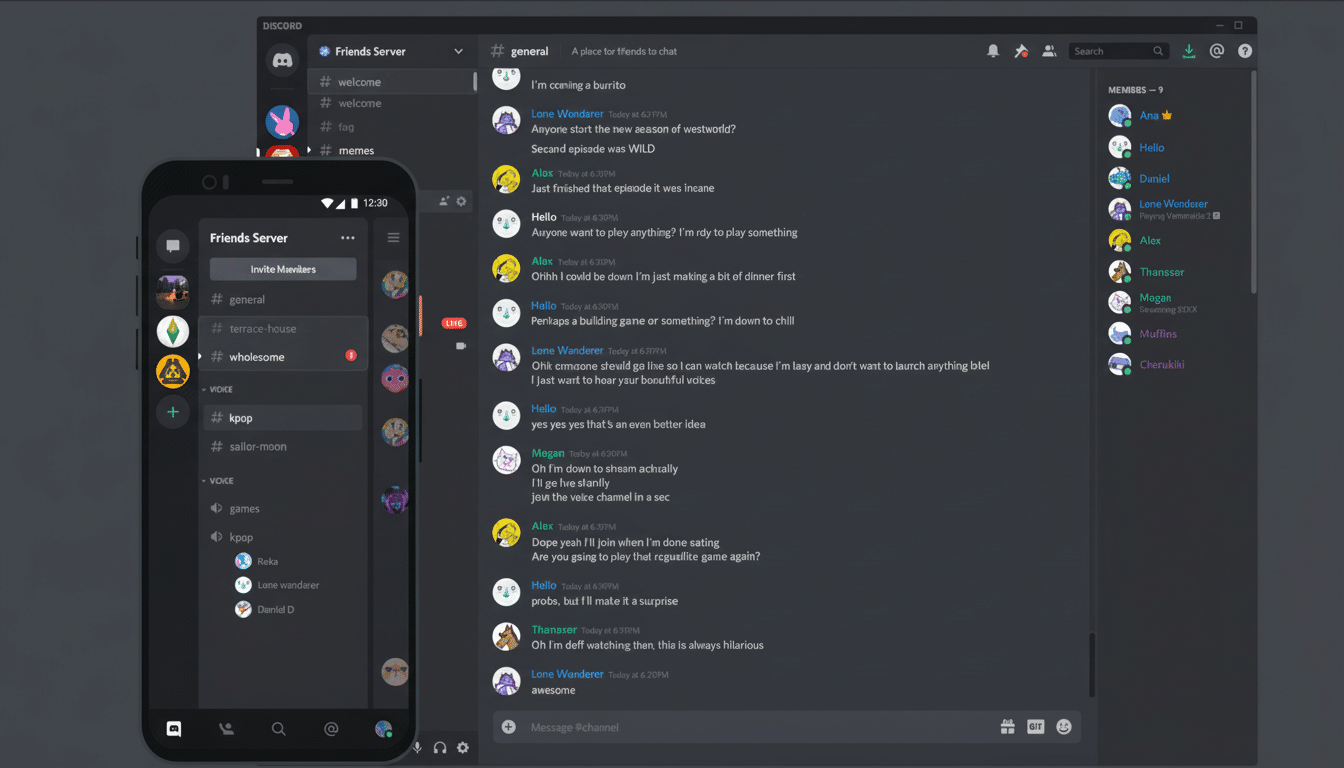

Discord houses permanent group chats and private servers that can fester at the fringes of moderation — a model that has emerged in previous high-profile investigations. Leaked messages of organizers of the 2017 Charlottesville rally, later published by activists, revealed how closed chats coordinate real-world action. In the wake of the 2022 Buffalo supermarket shooting, investigators reviewed the suspect’s diary-like Discord logs, illustrating how the platform has served as a planning and broadcasting hub for some perpetrators.

Reddit’s community structure is a two-edged sword: volunteer moderators can work to uphold norms well, but (as with open subreddits that are networked) so too do they facilitate radicalization pathways. And the company has quarantined and banned communities it says break rules on hate and violence, such as when it pushed r/The_Donald into obscurity in 2020 — actions that members of Congress are likely to point to as they poke holes in whether current enforcement is enough.

Meanwhile, Twitch is at the heart of the livestream problem: speed. The gunman in Buffalo would stream the attack on Twitch; even though the platform cut off the feed quickly, copies spread to other sites within minutes. Live video collapses the response window, raising difficult questions about proactive detection and good-behavior carrots as well as lower-latency moderation tools and protocols for coordinated takedowns across platforms.

Valve’s Steam platform has been less frequently in Congress’s crosshairs, but researchers and watchdog groups have routinely identified extremist symbols and glorification content in Steam profiles, groups and workshop items over the years. Steam, as one of the world’s largest PC gaming ecosystems, similarly raises the broader issue of moderating vast user-generated spaces in which content that can be harmful and innocuous fandom can mix together.

The questions that are likely to loom over the hearing

Lawmakers are likely to press on five fronts: recommendation systems, private or semi-private spaces, real-time video risk, cooperation with law enforcement and transparency.

First, recommendation engines and discovery mechanics — feeds, search results, “related communities” features and creator suggestions — can inadvertently funnel users into more extreme content. Members will look for specific proof of guardrails, in the form of downranking, friction prompts or explicit blocks on some queries and links.

Second, enforcement is challenging in private servers and invite-only groups. What tone does the government want to strike in confronting such harmful coordination without infringing upon legitimate private speech? Be prepared for pointed questions about automated signals, user reporting flows and thresholds for server takedowns.

Third, the threat from live video is still acute. Congress will probably look at whether such platforms as Twitch use pre-stream checks, behavior signals or geofenced triggers for serious incidents and how fast they can inform peers to seize reuploads elsewhere.

Fourth, law enforcement coordination is a longstanding flashpoint: platforms need to address credible threats quickly, and yet face scrutiny for balancing due process and privacy. Members will inquire to what extent companies escalate reports of imminent harm, their average response time and the legal processes they need.

Finally, transparency. Although such firms issue enforcement reports, the detail of and access to those reports vary widely. Policymakers will probably ask for more granular data on how much extremist content is removed, how often it reappears and how such policy changes affect the actual world.

How platforms claim they police extremism

Discord cites dedicated teams focused on safety, server-level tools such as AutoMod and partnerships with groups that monitor violent extremism. The company has previously said it can take down entire servers, not just individual posts, when it observes orchestrated harm.

Reddit emphasizes its tiers of enforcement: sitewide policies banning hate and violence, rules at the level of individual communities and volunteer moderators who wield authority backed by processes for mediation.

Quarantines, which restrict reach and add warnings, are an intermediate step before bans.

Twitch has been spending on proactive detection and creator tools, like Shield Mode, which enables streamers to quickly harden their channels during harassment campaigns. The company has also emphasized its rapid-response mechanisms to cut off streams showing real-world violence and work with industry partners on using hash-sharing that would prevent reuploads.

Valve’s user guidelines call for an end to hate and do not allow the glorification of real-world violence, with its Steam platform also offering profile restrictions as well as user reporting. But critics argue that the firm reveals relatively few specifics about how enforcement plays out, a deficiency that certain lawmakers eager for uniformity across the sector may have their eye on.

Context: the wider online harm landscape

Research from civil society highlights the scale of the challenge. The Anti-Defamation League has long reported on harassment and extremist content in the world of online gaming, calling out to larger, social-by-design platforms being ripe for bad actors. The Department of Homeland Security’s threat assessments have similarly cautioned that violent extremists use mainstream platforms to recruit, raise money and exchange operational information.

Companies are not the only players Congress is considering, however; it is weighing broader policy levers — stronger transparency obligations, crisis-response protocols across platforms and clarifying how existing liability protections should be applied when algorithms promote harmful content. There will be challenges to any legislative changes debated, but the hearing is shaping up as a test of whether voluntary measures have kept pace with real-world risk.

It’s not so much whether these companies can ever truly stamp out extreme misuse on their platforms — they cannot — but rather if you’re going to design them and enforce against it in a way that minimizes the likelihood an online thing leads directly to an offline one.

For families searching for answers after the Kirk shooting, that’s more than an academic distinction.