AI is transitioning out of pilot projects and into profit engines, but the bigger story may be how leaders are boosting revenue without benching their teams. Executives across industries are now rebranding AI as a copilot—something that can lift humans up rather than replace them with an autopilot. The change is not just philosophical—it’s associated with better results, quicker adoption, and less risk of unforeseen consequences.

McKinsey projects that generative AI could contribute $2.6 to $4.4 trillion a year in economic value, yet the firms realizing that value are those coupling models with human judgment, domain expertise, and transparent governance.

In MIT and Stanford research on AI in customer support, there was a 14% productivity lift on average—an increase of more than 30% for the most inexperienced workers—when workers used AI as a mentor rather than an enforcer. That’s the model: enhancement at scale.

1. Get People In The Picture Right From Day One

Design decision rights before models go live. Map when humans need to double-check outputs, when AI can operate independently, and when things should escalate. Leverage risk tiers: low-risk content support can be automated, for example, but pricing, compliance, or safety decisions may require humans-in-the-loop signoffs. This engenders trust and speeds up ramping because teams know where they belong.

Real-world example: Colgate-Palmolive developed an in-house AI hub with compulsory training, guardrails, and a global network of AI ambassadors. Workers test assistants in a controlled sandbox, exchange best use cases, and hold people responsible for the results. The result is a way to try new things more quickly without sacrificing control.

2. Upskill for Roles and Responsibilities in the AI Era

Successful programs invest in the workforce as much as they do models. Educate frontline teams on designing prompt strategies, data literacy, and critical thinking about AI outputs. Innovate in roles—model owners, AI risk leads, red-teamers, and UX researchers—to keep the system usable, safe, and aligned with business goals.

World Economic Forum’s Future of Jobs report projects significant skill shifts by the middle of the decade, with most workers requiring retraining. This pays off in real terms: as shown by GitHub’s research, developers equipped with AI coding assistants get their work done up to 55 percent faster, with the most significant benefits going to those who are new. The same story plays out in marketing, finance, and operations when teams become adept at co-creating with AI.

3. Build Guardrails And Data Foundations

AI scales at the pace of your governance and data quality. Use the NIST AI Risk Management Framework and ISO/IEC 42001 management standard to codify policies for privacy, fairness, security, and accountability. Create model registries, human-in-the-loop plans, and mechanisms for responding to incidents early on.

On the data front, that means investing in a clean, cataloged corpus with well-founded lineage and access controls. Use synthetic data and anonymization in sensitive scenarios, and have red teaming test for bias and jailbreaks. Robust foundations minimize rework, decrease compliance risk, and foster safe reuse of components across teams.

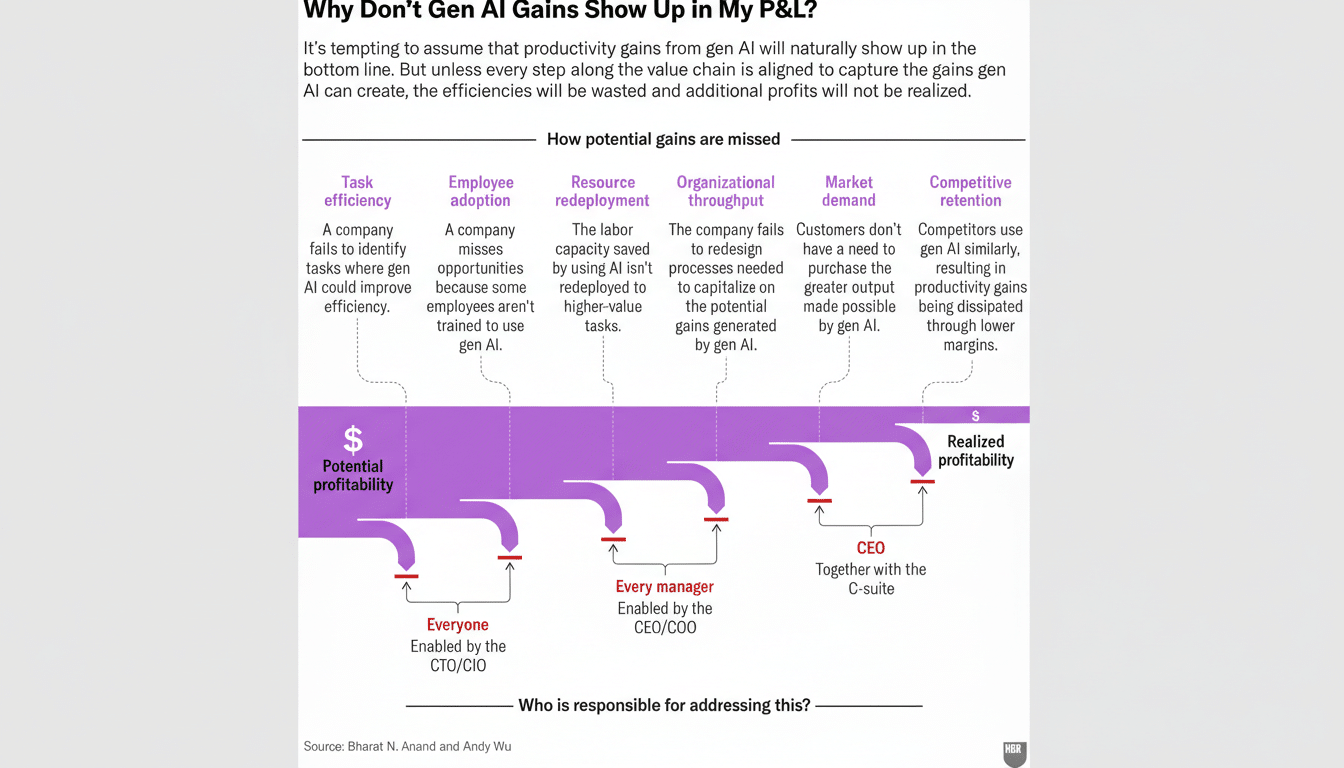

4. Target Use Cases That Have Measurable ROI

Start with where AI can magnify human strengths and connect directly to P&L. Typical high-impact opportunities include pricing/promotion analytics, demand forecasting, customer service augmentation, creative testing (marketing), and supply chain optimization. Set baselines and track impact with easy-to-read stats like revenue lift, cost per ticket, time-to-insight, and error rates.

Take logistics: UPS’s perennial efficiency drive shows that algorithmic routing with a dash of human operational know-how can shave miles, fuel consumption, and emissions while raising on-time performance.

In customer service, the MIT/Stanford study demonstrates that AI guidance leads to shorter resolution times and higher levels of customer satisfaction—especially when human agents ultimately make subjective calls on edge cases.

5. Scale via Platforms, Not One-Off Pilots

Pilots don’t shift the needle unless they scale. Construct a common AI platform—APIs, reusable prompts, feature stores, monitoring, and evaluation pipelines—so teams can deploy new use cases quickly and with confidence. Treat successful solutions as products with roadmaps, SLAs, and owners, not experiments that are bound to disappear.

Build communities of practice and internal marketplaces in which employees publish use cases, prompts, or components others can adopt. One model is the ambassador model, which many companies pair with light “model cards” that record purpose, limitations, and human-review steps. This is how you avoid a dozen unconnected tools and build resilience instead.

What Good Looks Like: People-First AI Done Right

People-first AI is also not slower; it’s more intelligent. It combines controlled experimentation, relentless skill-building, and clearly focused business objectives. Workaday, risk-averse organizations that hardwire human oversight, invest in training, and scale through platforms convert AI from novelty to growth engine—while keeping accountability where it belongs. The next round of winners won’t be those with the most models, but those whose people know exactly what to do with them.