AI code review startup CodeRabbit has raised a $60 million Series B that values the two-year-old firm at $550 million, underscoring investor belief that automated review will be a standard part of modern software delivery. The company says it is adding revenue at a pace of approximately 20% month over month and has topped $15 million in annual recurring revenue.

The new funding was led by Scale Venture Partners and NVentures, the venture arm of Nvidia, with participation from returning backers like CRV. That brings CodeRabbit’s total funding to $88 million, and helps put the platform among one of the better-funded independent players covering AI-assisted code quality.

A fast-rising bet on AI code review adoption

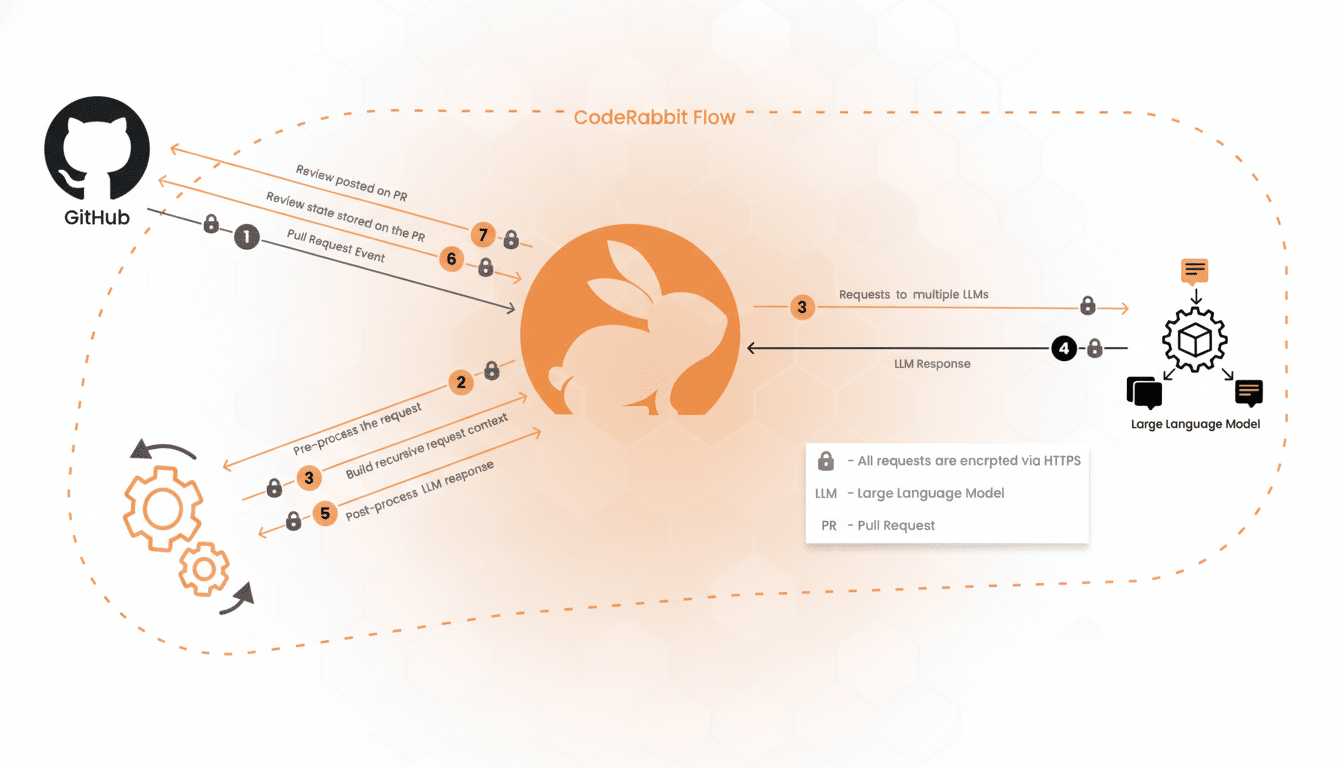

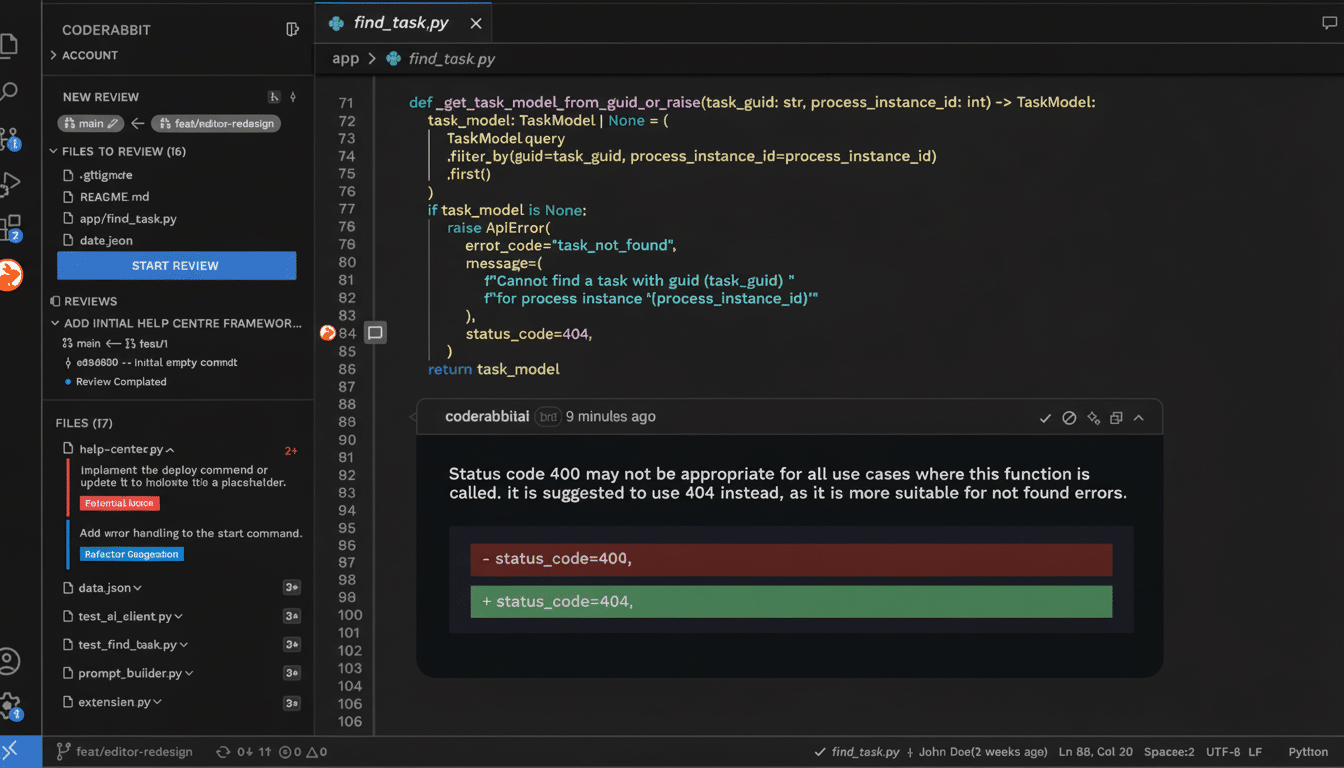

CodeRabbit’s pitch is straightforward: as AI coding assistants crank out more and more lines of code, the bottleneck has moved from writing to reviewing. Use a pull request and buffer in time to allow for feedback and code review from senior team members who can act as sounding boards on how best to approach software design problems. The startup’s model takes in context of a team’s codebase, reviews your pull requests, flags up defects and risky patterns… then suggests specific fixes.

Customers are said to include Chegg, Groupon and Mercury as well as over 8,000 businesses. Rounding up to $30 per seat, per month provides a back-of-the-envelope math of tens of thousands of active seats — and a seven-figure ARR company. The company says teams can reduce manual code-review burden by up to 50 percent, which should help senior engineers spend more time working on architecture, performance and security work rather than line-by-line triage.

The timing coheres with developer behavior. The majority of professional developers are now using AI tools in their workweek, according to surveys from both Stack Overflow and JetBrains. GitHub’s findings indicate that AI suggestions can significantly increase throughput, but that they can also add subtle bugs if you don’t look at them. That mix — more output, uneven reliability — is fertile territory for specialized review systems that understand a repository’s history, its conventions around coding and how risky the dependencies are.

Investors’ take on the market for AI code review

The pricing of the round effectively values CodeRabbit at over 30 times current ARR, a premium multiple that is common for high-growth, infrastructure-like AI companies. Scale Venture Partners has invested in developer productivity platforms previously, and NVentures’ involvement means broader strategic interest from the AI hardware and tooling ecosystem.

The company’s founder and CEO, Harjot Gill, is a serial entrepreneur who sold his last application observability startup Netsil to Nutanix and his previous company, FluxNinja. That background — heavily in reliability engineering — aligns neatly with what ails code review at scale: slipping regressions, performance budgets not being enforced and security holes readily sneaking in pre-merge.

How it compares with competitors in this space

Competition is heating up. Graphite recently landed a big Series B round led by Accel, while Greptile has also been in discussions for further funding with Benchmark. General-purpose AI development platforms like Cursor and Claude Code also include reviewing capabilities. CodeRabbit’s bet is that a dock worker of a reviewer, optimized for repository-level context and policy enforcement will outcompete these bundled assistants over time — particularly on large codebases with gnarly dependency graphs.

It will be a matter of precision and trust. Developers have little patience for noisy tools; continued use rests on reducing the number of false positives and providing actionable, diff-friendly recommendations. External research has warned about security pitfalls with LLM-generated code — studies by Stanford and MIT found higher vulnerability rates without expert review — so vendors who can claim to reduce bug escape rates and mean time to merge will have an advantage.

What the funding enables for CodeRabbit’s growth

Prepare for investment in three vectors: deeper codebase understanding, enterprise readiness and ecosystem integrations. That presumably includes more advanced semantic analysis and memory of repository history, on-premises or VPC deployment options for regulated industries, and tighter hooks into GitHub, GitLab and CI/CD pipelines to translate review insights into automated guardrails.

For companies evaluating AI review tools, the KPIs should remain unchanged: review cycle reduction, post-merge defect rate decrease and cost savings on senior engineers’ time with time tracking. If CodeRabbit’s figures claimed — a near halving of human review load — hold across complex, polyglot monorepos, the R of ROI becomes much stronger, faster.

The larger point is obvious: while AI speeds up the process of generating code, companies are reinvesting in quality gates and governance. With a new investment and traction rising, CodeRabbit is aiming to be one of those core layers in that emergent stack — less about writing code for developers, and more about ensuring what’s shipped is done so correctly, securely and maintainably.