Claude Code was never supposed to become a product. It started as a scrappy prototype within Anthropic, and it has gone on to change the way engineers plan, write and ship software. With a wide connection to the web rolling out to subscribers now, agentic coding is going from specialist tooling to something you can reach on anything with a laptop or phone — without needing six commands in a terminal.

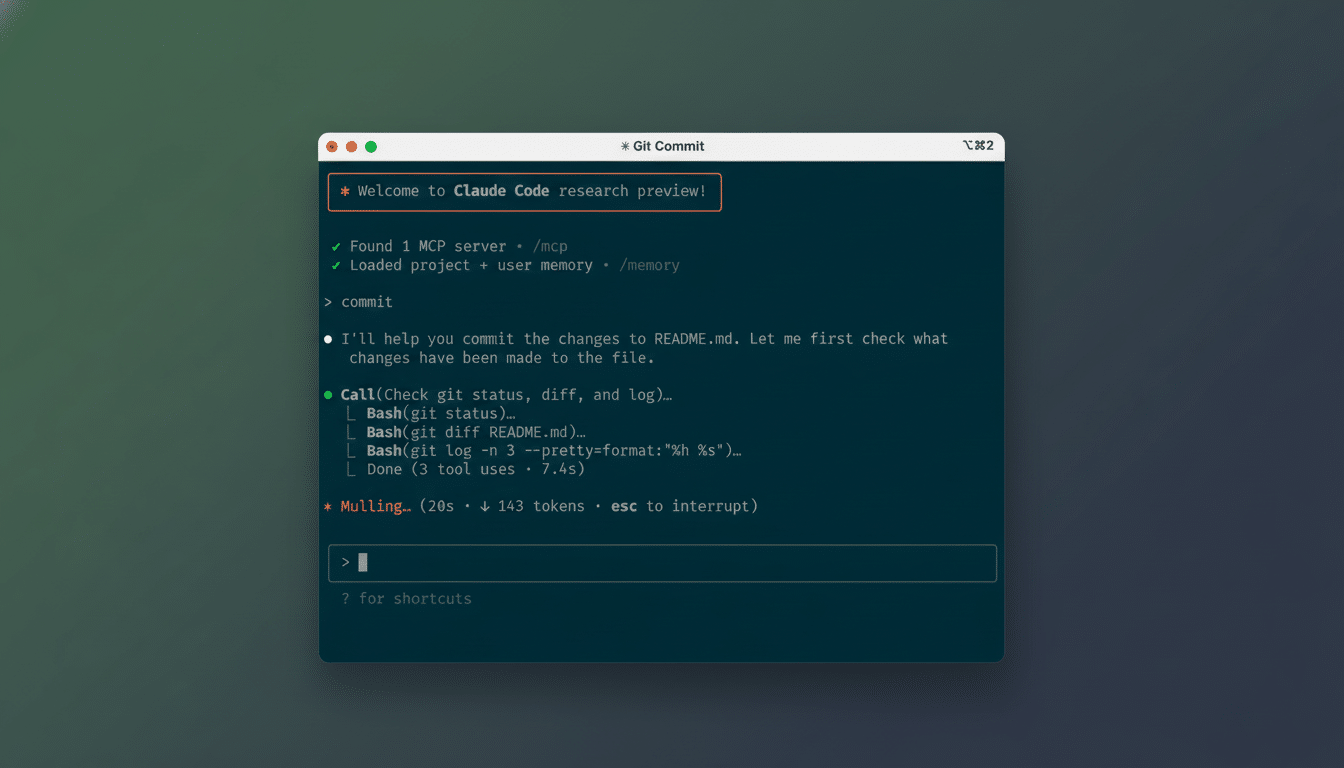

Unlike older-generation “autocomplete” assistants, Claude Code can set a goal, select tools, edit files, run tests and keep going until the work is done. That transition — from suggestion engine to autonomous collaborator — is also why an accidental lab project now sits on the desks and stand-ups of engineers.

From Lab Experiment to Engine Room Every Day

Adoption inside Anthropic, according to the company, exploded from day one. The team cites internal metrics showing a majority of employees rely on it daily, with near-ubiquitous weekly use. That kind of stickiness is uncommon for developer tools and it’s an even more telling shift: the agent wasn’t just a fad; it has become the new normal workflow for many tasks.

Outside of Anthropic, other companies such as Salesforce, Uber and Deloitte have used Claude Code for anything from bug fixes to cross-repo refactoring tasks to routine code reviews — even spinning up tasks in systems like Asana. The appeal is speed and low friction: engineers can line up work from a browser in the morning and review diffs at their desks later on, shaving cycle time without adding meetings.

What Agentic Coding Truly Entails in Real Use

“Agentic” is more than marketing. In practice, this means the model does tool use and tool persistence: it can open files; run linters and tests; call external APIs; adjust its plan (retrying or compromising); iterate until some threshold is met. In a publicly posted case study with Rakuten, Claude Code was able to code on for seven hours straight — far from one-shot prompts or suggestions line-by-line offered by earlier tools.

None of this excuses teams for oversight. Anthropic and many other clients have a human in the loop for every deployment that lands, with agents effecting their first pass of review and having a human make the decision. The move is consistent with more general research: GitHub has found that AI pair programming can greatly accelerate task completion while leaving plenty of room for human judgment, and McKinsey Global Institute estimates a substantial portion of software work can be automated or sped up with generative AI. The rewards are real, but so are the risks — hallucinated code, dependency sprawl and security misconfigurations — so we need sandboxing, permissions and audit trails as a must.

Why Web Access Changes the Stakes for Coding Agents

If Claude Code becomes available in any old browser, it lowers the barrier for anyone who is near engineering. PMs, analysts, and leaders that don’t live in the terminal can now orchestrate tasks, triage issues and review diffs with a tool they’re used to. That has implications for onboarding as well: Anthropic says new hires, including managers who haven’t written code in many years, are productive starting on day one with an agent at their side.

This isn’t making everyone into a software engineer, but it’s expanding the pool of contributors. The pattern follows previous waves of low-code tools: experts still set the architecture and guardrails, but more people can get involved with the flow of work. The browser is merely the on-ramp.

Adoption, Competition, and Those Numbers

The public release of Claude Code sent the market into a lurch. OpenAI is further along with its coding agents, Microsoft’s Copilot is zooming through the stack, and Replit’s agent-centric approach keeps tiptoeing toward end-to-end automation. The pace of “describe it and ship it” development is being accelerated across platforms by healthy competition.

Enterprises are about effects, not demos. Internal briefings and industry reporting suggest double-digit gains in developer velocity, shorter lead times and increased review coverage when toil is handled by agents. Surveys of professional developers on Stack Overflow reveal growing enthusiasm for adopting AI assistants, and DORA-style metrics (lead time to change, change failure rate, deployment frequency) are emerging as the scoreboard for AI-enabled teams. The best results come when organizations pair agent-based models with rigorous testing, tracing and rollback policies.

Where It Goes Next for Agentic Coding and Teams

The short-term roadmap is more autonomy and more coordination. Look for longer-lived sessions that can survive context switches, as well as multi-agent “swarms” that divide up the work: from scaffolding a plugin to fixing a flaky test and updating documentation. One prompt, then, is, in a manner of speaking, a team with a plan.

For businesses, the distinctions will be trust and control: provenance stamped into every diff, tests generated right alongside as you’re writing code, dependency updates vetted responsibly against policy, and end-to-end logs fit for an audit. Frameworks from bodies such as NIST and known controls like SOC 2 and ISO/IEC 27001 will probably shape how shops roll out agentic coding at scale.

The irony is that Claude Code wasn’t designed on purpose. But by turning intent into shipped changes (and by meeting people where they already toil, in the browser), it has quietly redrawn the line between plans and implementation. The future of coding now looks a lot less like typing and more like dragging. That is not a parlor trick; it’s an operating system for software work.