Apple has come a long way in its ability to understand images with Visual Intelligence, but for practical purposes it still lags behind Google’s Circle to Search. The problem isn’t about flashy demos; it’s about accuracy, context, speed, and how often the tool just gets out of your way and gives you what you were looking for the first time.

Testing head-to-head across stores and social, then finally media, all this time invested by you with months of hands-on use: Circle to Search is still more reliable and more informative. Apple’s system can recognize an object and track down lookalikes, but seldom does it match Google’s knack for surfacing a precise item along with its backstory, specifications, and next steps.

What Each Tool Does, Summed Up for Everyday Use

Circle to Search is an overlay interface that covers anything on your screen. Trace around a shoe in a video, tap to choose some text from an image, translate a menu, or press the music icon to identify a song. It integrates Google Lens features — visual search, text recognition, translation — and Search results and knowledge panels; it works without making you jump between apps.

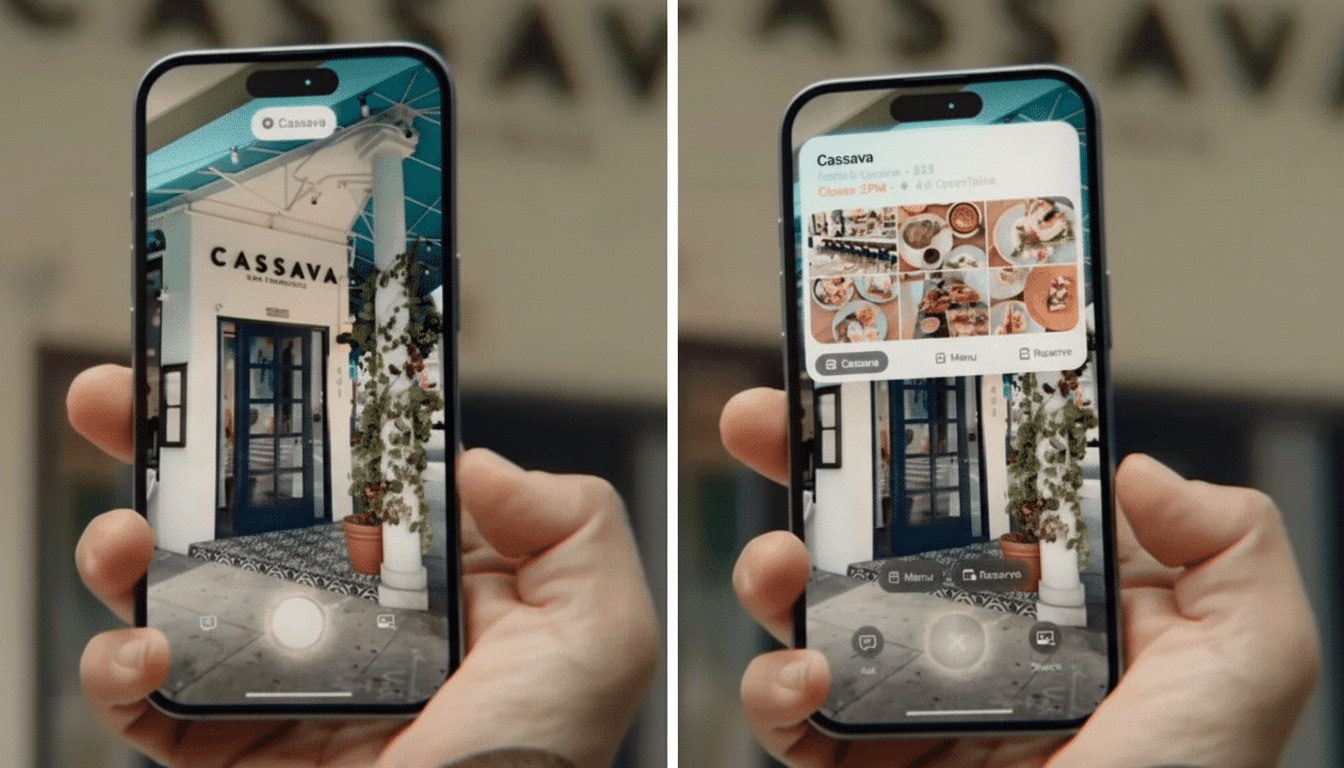

Apple’s Visual Intelligence is built right into iOS and macOS as a part of Apple Intelligence. You can call it up on what’s visible on screen or in Photos to identify objects and find similar images, and you can pass off requests to ChatGPT with consent. It’s neatly integrated and privacy-forward, but many tasks still result in image carousels rather than grounded answers.

Performance in Real Use Across Common Tasks

Shopping test: I circled a trail runner in one review video. Circle to Search knocked it out of the damn park — not only guessing the exact model, but coming up with a picture and revealing “midsole compound, stack height, weight, availability” at retailers both expert and lay. Visual Intelligence provided a grid of nearly identical shoes and a couple storefronts, helpful but I still had to guess which one was the correct match.

Social test: A reel featured a mid-century lounge chair. Google’s overlay identified the specific model and designer, provided historical context, and fetched price ranges from trustworthy retailers as well as auction listings. Apple’s relied heavily on interchangeable-looking furniture and Pinterest-style inspiration boards with scant attribution.

Media test: I paused a live performance on a social app. With a tap on the circle, held near the playing music note, Circle to Search listened and identified the song and suggested where to stream the full set and any related tracks — without having to leave said app. Visual Intelligence treated the frame as a photograph, and offered me more stills from the concert — not helpful for finding out what the next track was going to be.

Magnitude of Answers and Context in Practice

Google’s edge isn’t simply recognition; it’s the density of context. Circle to Search has a tendency to blend together exact product IDs, technical specs, sizing notes, and repair guides with shopping links and community reviews. It also bleeds into math solving — derivations, physics diagrams, and translation — Google has heavily promoted alongside Lens and Search upgrades.

Apple’s Visual Intelligence is good with what, not so hot with what next. The ChatGPT handoff might solve some of this, but it introduces friction, and there are many questions that don’t seem inherently complicated — e.g., “what model is this camera and what lens fits it?” — loop back to a gallery of similar images. In other words, it knows but doesn’t reason reliably.

Availability and Ecosystem Limits That Matter

Scale matters. Google has said Circle to Search is rolling out widely across recent Android flagships from Google and Samsung, with public targets in the hundreds of millions of devices. Lens itself processes billions of visual searches a month, according to the company, providing Google with a powerful source of feedback to improve its results.

Apple gates Apple Intelligence features, including Visual Intelligence, to devices with A17 Pro or M‑series chips and at first to a restricted language set. As there are fewer users and fewer edge cases, that narrower footprint means slower hardening in the real world as well. The experience can be fantastic with supported hardware, but the ceiling doesn’t help much if few people can reach it.

Privacy and Reliability Considerations for Users

Apple’s privacy architecture is a feature. Its Private Cloud Compute methodology and on-device processing also reduce exposure of data, while the ChatGPT handoff is opt-in and clearly presented. Google blends on-device and cloud processing, and it has published its own safety commitments for Search and Lens. Users should balance their preferred privacy posture — but in moment-to-moment tasks, reliability still trumps all.

The Bottom Line on Visual Search Right Now

Visual Intelligence is improving, but the Circle to Search tool does more to answer questions, with a richer context, in fewer clicks. For Apple to come back, it will need broader device support, deeper reasoning fueling suggestions beyond just lookalikes, and quick-access utilities such as translate and music ID integrated directly into its overlay. Until then, however, Google’s visual search remains the one to beat.