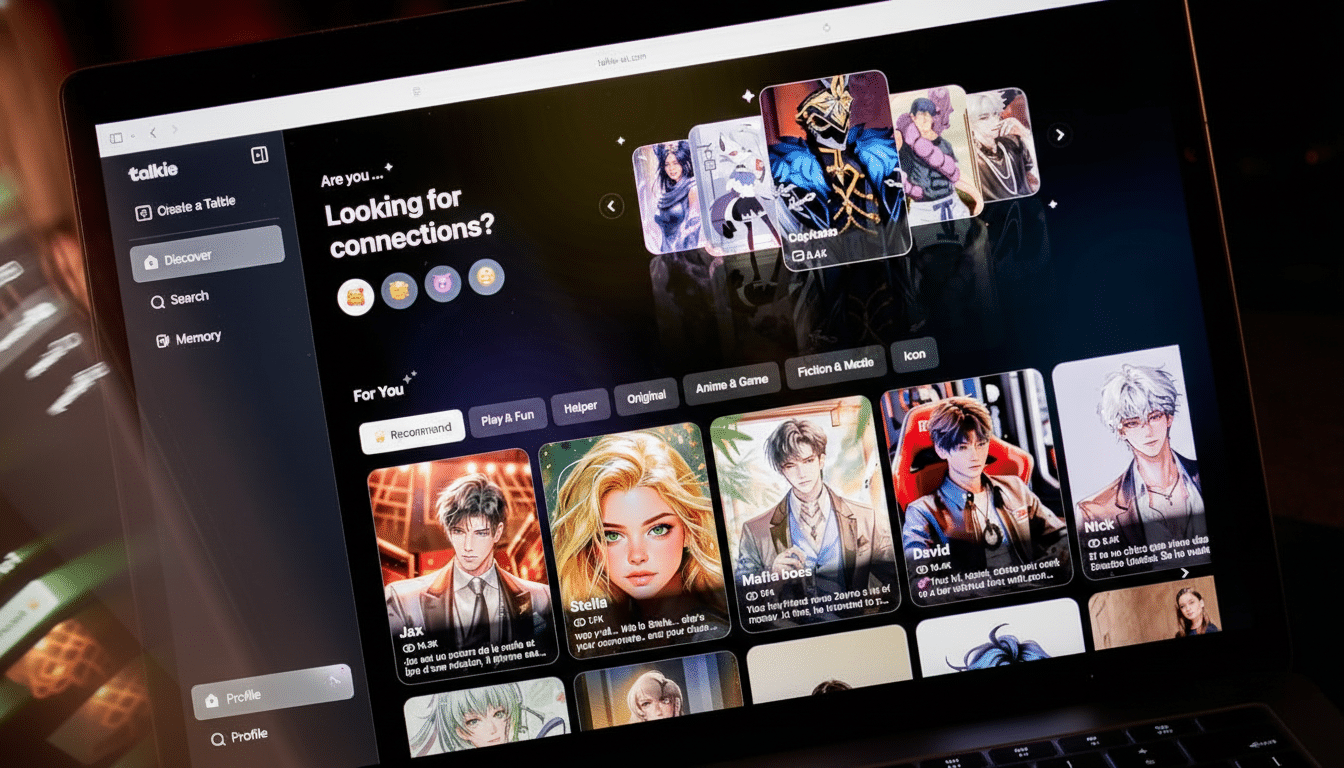

China is taking steps to control the impact of AI-powered emotional companions on people’s mood, releasing a draft regulation that identifies psychological risks such as dependence, addiction and sleep disturbances — an area most governments have left up to tech companies. The proposal sent out by the Cyberspace Administration of China (CAC), summarized in a translation reported by CNBC, would require that AI services intended to replicate human personalities — like chatbots — meet a certain standard of “emotional safety.”

Catering for affect: unlike broad content moderation regimes, this system focuses on affective interaction. Suppliers of chatbots and avatar-style systems would have to screen out harmful conversations, identify susceptible users and escalate serious cases to trained human moderators and — in the case of minors — notify their guardians.

What the Draft Rules Do to Address AI Emotional Risks

The CAC proposal applies to any “human-like interactive AI service” — text, images, audio and video alike. It requires strong age verification and guardian consent prior to a user being able to utilize companion-style bots, as well as clear reminders throughout the conversation that users are talking with non-human systems. Providers would have to screen for signs of emotional dependence or compulsive use, with limits and interventions already in place.

On the content side, systems would not be allowed to produce gambling-related content or obscene or violent material and would also not engage users in dialogue about suicide, self-harm or other topics seen as potentially harmful to a user’s mental health. Importantly, the bill demands escalation protocols: if chats convey a sense of threat, AI must transition to human moderators; for underage users, platforms are obligated to report concerns to guardians.

The approach builds on China’s current stack of AI and online safety measures, which also includes the 2023 Interim Measures for generative AI services and older deep synthesis rules. Those frameworks emphasized provenance, security reviews and content controls; the new proposal drives deeper into regulating the emotional dynamics of human–machine interaction.

Why Emotional Safety Is the New Fault Line

According to Turkle, the AI companions are made to seem responsive, empathetic and always there — all qualities that can create dependence. Researchers who specialize in “affective computing” have long warned that the technology needed to simulate a romantic relationship could similarly be used to nudge behavior and manage mood even more effectively than through advertisements or social media. China’s regulators are taking aim at these affective risks in a manner that presents them as the analogical counterpart, painted in the same public-health terms as game and short-video “anti-addiction” controls.

There’s real-world reason people are worried. Italy’s data protection authority temporarily prohibited the Replika chatbot for minors in 2023 due to concerns about the well-being of children and data protection. In a high-profile separate case in Europe, a man died by suicide following lengthy conversations with an AI chatbot and there have been calls for crisis-protocol standards. Mental-health organizations, such as WHO and national suicide-prevention organizations, have called for technology companies to put in place safe escalation and a referral of resources whenever users introduce self-harm.

The stakes are high in China’s digital universe. According to the China Internet Network Information Center, China is home to more than 1 billion internet users, and youth connectivity rates are extremely high. Change details about how companion AIs navigate risky dialogue (or encourage long-term engagement) and you’ve scaled that effect up to millions of sensitive human interactions.

How China’s Plan Fits Into the Global Picture

The world’s regulators are circling around similar issues, but few other proposals get as squarely at emotional impact. The European Union’s AI Act prohibits manipulative AI practices and places tight boundaries on the use of emotion recognition in workplaces and schools, indicating wariness about inferring emotions from data. The U.K. Online Safety Act prods platforms to reduce harms from suicide and self-harm content with child-specific guardrails, such as recommendation risks.

Disclosure elements and access to crisis resources in chatbots have been covered through inconsistent state laws and voluntary frameworks within the United States. California has moved forward with requirements for clearer labeling that users are talking to AI and stronger safeguards when chat goes toward self-harm, though enforcement and coverage remain the subject of debate among academics at places like Stanford and Georgetown’s Center for Security and Emerging Technology.

China’s draft lands at the nexus of these trends but goes a step further, requiring providers to look for emotional dependence itself — not just illegal content — and intervene.

What It Means for AI Vendors Building Companion Bots

That will be a big operational lift for companion-style services like the divorce apps to large-model chat apps and avatar influencers in Chinese platforms. Identifying emotional risk with confidence cannot be achieved by simple keyword filtering; it takes nuanced behavioral signals, vigilant tuning and significant human-in-the-loop review. Over-strict filters can irritate good actors, while over-lenient systems stand to miss important times.

Age verification and guardian alert requirements pose privacy and enforcement challenges, especially for cross-border services. Developers could be required to record the context of conversations for safety audits, increase crisis training for moderators and rebuild engagement loops that currently encourage time-on-task and intimacy — commercial features that are sometimes at odds with addiction guardrails.

If adopted, the rules would establish an international benchmark: emotional safety as a tangible, enforceable obligation in AI design. For users, that might manifest in clearer disclosures, more prominent crisis resources and fewer late-night exchanges spinning out into dangerous territory. For the industry, it’s a push to build systems that not only steer clear of toxic outputs but also actively safeguard psychological well-being.