Annoying WordPress plugin bug: from confusing to solved in less than an hour with ChatGPT—no $200/year enterprise tier, tokens or special add-on needed. On a $20 plan and code-focused VS Code workflow, I tracked down a setting that would not persist, shipped a patch, then demonstrated an entirely separate host-level caching issue where the site looked “unprotected” even after the fix.

For sole maintainers, issues that are hard to reproduce can consume days. This one did not, for the AI functioned like a senior pair programmer: it asked for the right artifacts, questioned assumptions, and pointed to a specific diagnosis path.

How the debug session unfolded in less than an hour

The user’s bug report was straightforward: toggling a privacy control didn’t actually stick. The usual suspects — theme compatibility modes and local caching — didn’t help. I pulled the code into VS Code and tasked ChatGPT with reasoning about why it failed, rather than trying its best at guessing.

It had been asked to export the settings of the plugin prior to suggesting alterations. That request mattered. It was a way to pixel-peep the reality of what I had actually persisted for configuration as opposed to the mental schema in my mind. I wrote the JSON, fed it in, and the AI pointed to a state-management pattern that would gate saving when a defensive feature becomes activated.

The proposed patch reworked the “save” process to avoid breaking on conflict and add a small migration to normalize former values. I reproduced the author’s setup and verified that it would still work with this solution in place, as well as checked for no negative effects in neighboring features. Phase one done, fast.

When the bug was out of the code and in the cache

Only the site still appeared exposed. Some pages were shielded; others weren’t. With plugin-level caching disabled, the discrepancy was fishy—and it smelled like an upstream cache. ChatGPT suggested a low-friction test case: append a trash query parameter to the affected URLs (e.g., ?ai_probe=1) to force a new fetch.

That switch flipped the result. URLs with the parameter were protected, originals weren’t — typical host or CDN caching. The plugin was never aware of the request, so it had no opportunity to apply privacy. The solution wasn’t code; it was policy. The AI composed a brief support ticket for the hosting provider, with an attachment providing “proof of diagnosis,” some lines about purging or bypassing caching for authenticated routes and headers to honor.

Protection worked so long as the host had that layer disabled. The woman whom the user was hostile to got her private site back, built for free by me, and I saved my sanity going forward.

The Cost Question, and What You Truly Need

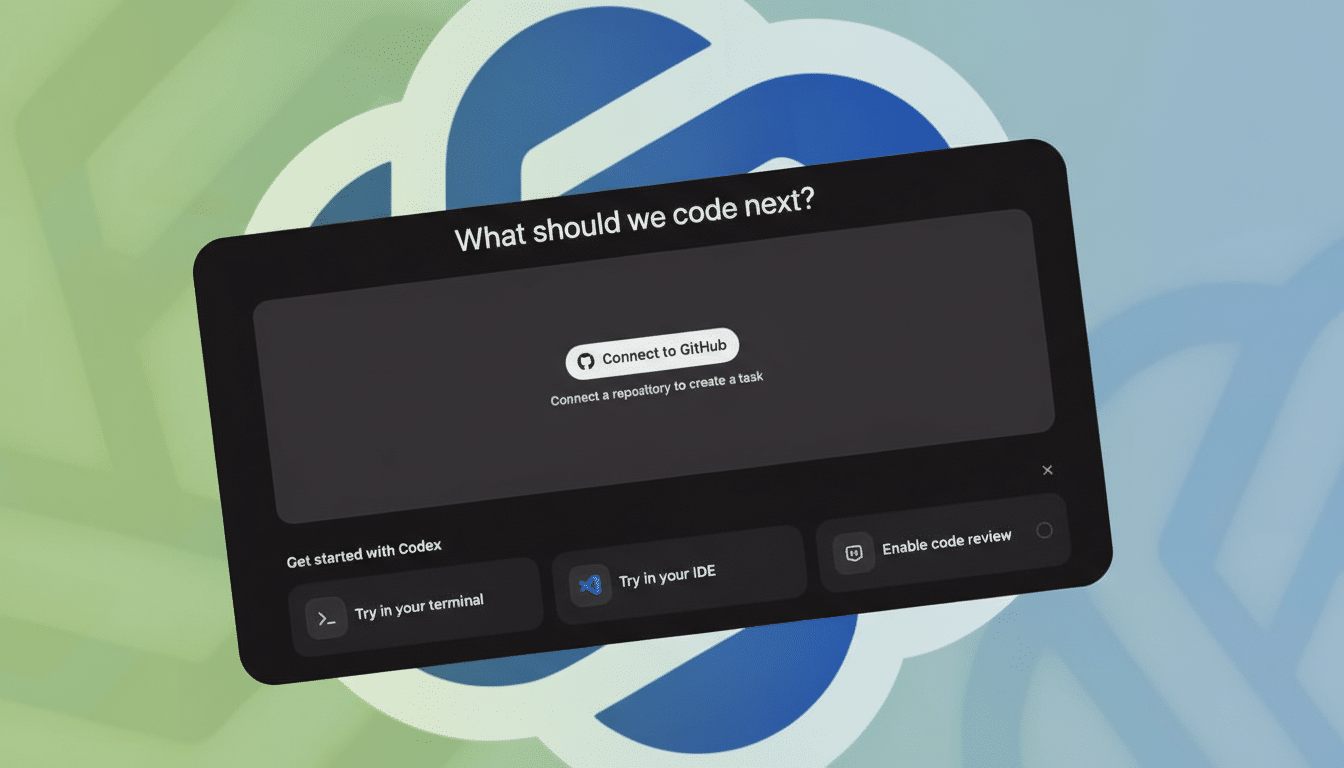

And this entire loop was run on a simple $20 plan of ChatGPT. No $200 subscription to nowhere, no enterprise seat. For short bursts — bug hunts, tiny features and writing a technical note — rate limits and latency were a non-issue. If you are a larger shop or shipping team, the value proposition would lean back toward lower in the list on this page.

The productivity claims aren’t vibes only. GitHub’s AI pair programming research showed that developers were 55% faster at completing tasks in controlled experiments, and the Stack Overflow 2023 Developer Survey states that more than 70% of developers are already using or planning to use AI tools while coding. Developer Ecosystem reports by JetBrains also see increasing use of AI assistance directly in an IDE, a sign that it’s not a novelty but simply best practice.

A Reusable Playbook For Plugin Debugging With AI

Gather real signals first. Ask users to reproduce, give exact steps, describe their environment and please provide a settings export. Too technical? Provide them with a pre-written request they can run or send.

Give the model context. Give the config blob and more detailed symptoms. Ask it to find things that could block persistence or skip a guard, and propose a minimal patch with comments and safe migrations.

Reproduce locally. Mirror the user’s stack as closely as you can, test before and after patch, and add a regression test if you have a harness. Keep the feedback loop tight: brief prompts, tangible results, iterate.

Probe the network. When the behavior is different across pages, you can try cache-busting parameters and compare responses. Tell your AI to write a diagnosis note for the hostname based on a test, an expectation, what was tested and any cache directives or paths that should have exceptions.

Why This Is Important to Indie Solo Developers

Indie maintainers do not get a rotating on-call team. An effective AI assistant is a crunched grind: part debugging, part code reviewer, and when necessary, part technical writer. It shrinks the runway from “I think I know what’s wrong” to “here’s the patch and hosting ticket.”

The takeaway is refreshingly simple. You don’t have to spend $200 to get real value. With guided prompts, a reproducible setup and a readiness to try what the AI suggests rather than worship it, you can turn an ugly support ticket into a quick win — and keep shipping without breaking your budget.