OpenAI has once again added what was considered a core privacy feature for users of ChatGPT: the ability to delete past conversations permanently. The move comes after a court-ordered preservation mandate tied to ongoing copyright litigation was lifted, allowing the platform’s standard deletion behavior to return across most customers. There’s a major caveat, however: previously preserved logs are still available to the parties in the case.

What changed during the court preservation order period

OpenAI briefly was forced to keep chats that users had selected for deletion after a preservation order was entered in a federal case in which the company had been sued when it was claimed that large language models reproduced copyrighted news articles. The order, from the United States District Court for the Southern District of New York, was intended to prevent any potential evidence from being lost while the lawyers argued over how much evidence should be turned over in a vast discovery dispute that has dragged on since August.

The new joint order entered by US Magistrate Judge Ona Wang, however, has now released OpenAI from that preservation responsibility. With the constraint lifted, if you delete a conversation from your account, that deletion again enables OpenAI to delete associated logs from its systems pursuant to its routine practices. The change was first spotted by industry watchers and quickly embraced by those concerned about privacy.

The conclusion is simple: users get control over how long their ChatGPT logs should be stored. For a person who uses AI tools to brainstorm, research, or draft sensitive material, the ability not just to erase that content but also to ensure it’s gone can be more than a convenience; it can be an important privacy safeguard.

Who was affected, and who was excluded from retention

The preservation order was broad. It applied to ChatGPT accounts on Free, Plus, Pro, and Team plans and covered a significant number of API users. Enterprise and Edu customers were not part of the order, nor were API customers with Zero Data Retention contracts. That reflects OpenAI’s tiered data practices, with enterprise offerings tending to have more stringent retention controls by default.

Scale is a part of the story. OpenAI has previously claimed over 100 million weekly active users for ChatGPT, and the developer ecosystem around the API spans from startups to large software vendors. A broad preservation hold for that many users complicates how operations are run—and raises concerns about how long sensitive prompts will still be available. Reversing the deletion hold simply brings expectations back to what many users expected for privacy.

What happens to earlier archived logs from litigation

The expiration of the order is not retroactive. Conversations that were captured and preserved under the preservation order continue to be available as part of the litigation. In other words, the delete button functions as it should going forward, but that which has already been saved is not removed from the litigation record.

That nuance is important for risk planning. Legal experts often advise clients that they cannot claim attorney-client privilege or any similar protection for what they share with consumer AI tools. OpenAI’s leadership has sounded a version of that reality, warning on the site that user chats may become discoverable in litigation. The smart thing is still: don’t put any data in that you cannot afford to have published.

How to permanently delete chats in ChatGPT now

You can delete conversations individually or clear broader history within settings. If you use the API, check your organization’s policies and controls.

- Delete a single chat: open the conversation, click the menu near its title, and select Delete.

- For a broader clean-up: go to Settings > Data Controls, then manage or clear your conversation history.

- For API usage: verify your organization’s data retention policy and enable logging controls as needed (for example, Zero Data Retention mode or equivalent logging filters).

Keep in mind that deleting chats is separate from training preferences. The control to turn off Chat History & Training regulates how your content may be used for training models, while deletion controls the longevity of the chat from your account and systems beyond initial operational requirements. Enterprise and education tiers generally provide stronger guarantees and administrative controls; consult your contract or documentation for details.

The larger privacy context for AI assistants

The episode highlights a broader tension in AI governance, and that is the collision of legal discovery with product telemetry and user expectations of privacy. Regulators such as the EDPB and the UK Information Commissioner’s Office have noted the importance of transparency and purpose limitation with respect to AI data. In practice, that means platforms have to plainly describe retention windows, training defaults, and deletion outcomes.

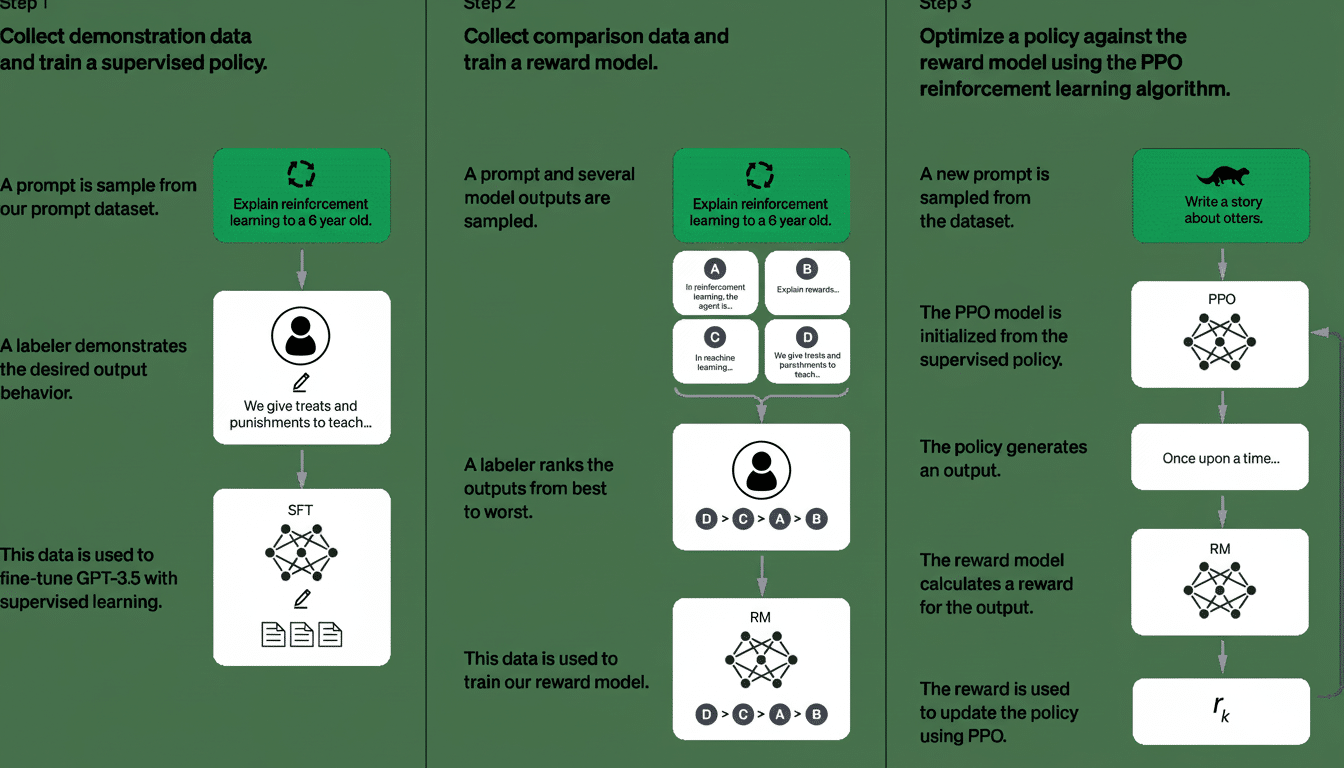

It also reflects a technical truth: once data is used to train or fine-tune a model, it’s hard to scrub off your fingerprint. This is often dealt with by default excluding some streams from training, or honoring opt-outs by providers. OpenAI’s controls, along with restored deletion, head in that direction, though the most cautious rule to follow for now is to treat anything you paste into a chatbot as information that could be read by others in edge-case scenarios and even court cases.

For users, there is a message of cautious optimism. Permanent deletion has returned, which is good news if you believe in privacy and trust. Combine it with disciplined data hygiene, a clear idea of how your plan deals with retention and training, and you’re a step closer to the privacy posture that you had in mind when you hit Send the first time.