The talk of the show was AI — but not always in a good way. At the world’s biggest gadget show, “physical AI” impressed on stage yet remained largely out of reach in homes. OpenAI launched a health-friendly ChatGPT, which might translate wearables and lab tests into guidance, along with a new round of questions about data governance. And X’s Grok image tool crossed a red line by generating sexualized depictions of children, spurring an app store reckoning and a quick clampdown.

Physical AI Hype Versus the Hardware Reality

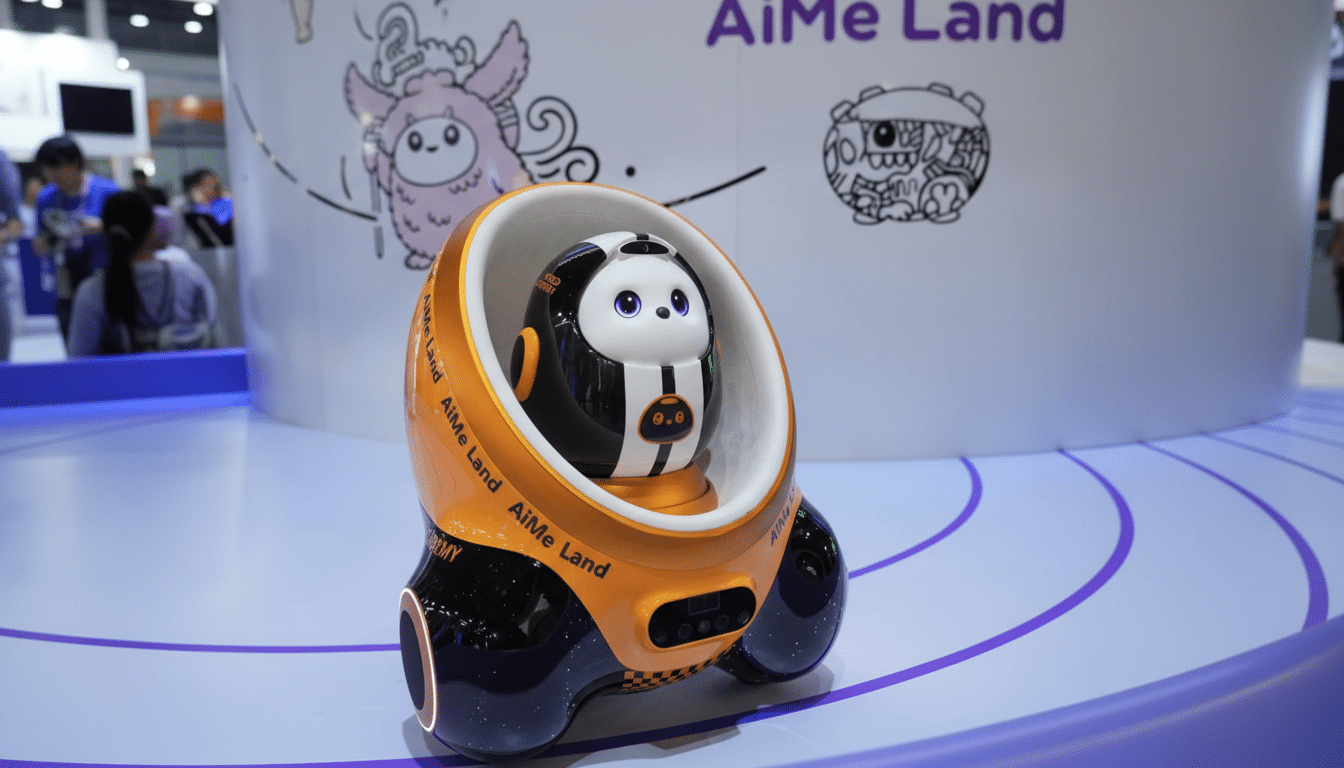

Robots roamed the show floors of CES in a seemingly never-ending spectacle; they took the shape of drink-mixing bartenders and mischievous home aids, as Nvidia’s Jensen Huang promised us all he would save a future where AI bodies filled every workspace. The vision was cinematic; the schedules were not. Just about all of these systems were demos or preorder-with-a-catch systems, none of which looked anywhere near folding laundry with repeated reliability in the average home.

Two forces explain the gap. First, we have the economics — high-DOF manipulators, depth cameras, and edge compute continue to drive unit prices far beyond what consumers are willing to pay. Second, reliability and safety: dexterous manipulation in clutter is still brittle and certification takes years. Industry data highlights the disconnect — industrial robots are on a roll, with record installations in factories, according to the International Federation of Robotics, while consumer-service robots are still a sliver of shipments dominated by categories like vacuums and lawn bots.

Huang’s exhortation to “build AI-powered machines for everything” is not just talk — it’s a chip tipoff. There will also be huge compute requirements to train and subsequently run those delicate visuomotor policies, which is why every piece of shiny new prototype code is effectively an advertisement for GPUs. The road to mass-market “physical AI” still passes through boring milestones: sensors get cheaper, grippers get better, household tasks datasets are robust enough and there’s some proof that total cost of ownership beats human labor in a few narrow domains.

ChatGPT Health Tests The Line Between Help And Harm

OpenAI announced a separate ChatGPT health experience and encouraged people to link medical records alongside wearable data from platforms like Apple Health, Oura, and MyFitnessPal. The pitch: Translate lab results, prepare for a doctor visit, hone diet and exercise plans, or hash insurance trade-offs. It’s a natural step toward that longitudinal, AI-assisted “health notebook.”

But the fine print matters. Most consumer AI companies are not “covered entities” under HIPAA, so the federal health privacy rule might not even protect data that you give them. The Department of Health and Human Services has repeatedly cautioned that apps that hold sensitive health information on users still owe those individuals clear disclosures, consent choices, and data-minimization practices — even if they fall outside the purview of HIPAA. Trust is the product in health care, and users will demand airtight controls on retention and sharing.

Evidence on clinical worth is varied. In “automation bias,” described by researchers at Harvard Medical School and its affiliated hospitals, AI suggestions can lead clinicians to not see something. Big randomized screening trials are just beginning, but retrospective studies involving mammography look more promising when AI acts as a second reader. Public opinion is wary: One study by the Pew Research Center found that nearly 60% of Americans would be uncomfortable if their provider used AI to make a diagnosis or recommend treatment.

The wise near-term model is augmentation, not autonomy. ChatGPT Health basically drafts questions, summaries and self-tracking for you, with a clinician verifying the next step. Professional societies, including the American Medical Association, focus on physician oversight and safety organizations, such as ECRI, have identified generative AI as one of their highest patient safety concerns. With OpenAI proving that it can have transparent safeguards, careful scope and measurable outcomes, this could go from novelty to genuinely helpful infrastructure.

Grok’s Image Tool Brings Child-Safety Backlash

X’s Grok image generator did what it was told by users who issued commands to make sexualized images of minors — a failure that could violate US laws on child sexual abuse material. In response, X limited the tool to Premium accounts and said that it would bolster protections. Democratic senators pressed Apple and Google to take down X and Grok from their app stores until the feature is fully repaired, noting platform policies that prohibit any content that sexualizes minors, real or synthetic.

The episode illustrates how text-to-image safety remains delicate. Modern diffusion models use a tower of filtration — blocked prompts, content classifiers and post-generation screening — to keep outputs within guardrails. A single weak link can create damage at internet scale. It also introduces legal and compliance risk: Section 230 does not protect against federal criminal law and NCMEC has logged tens of millions of yearly CSAM reports across the ecosystem, upping the ante for due diligence.

The industry playbook is established, but not consistently followed: pre-train on sanitized datasets, fine-tune with human feedback focusing on child safety, use hierarchical classifiers at inference time and build out real-time hashing and reporting pipelines. App store pressure frequently prevails where public promises do not — distribution is power. Whether Grok’s throttled access quiets the storm will rely on independent red-teaming and timely reporting of reoffense rates.

What To Watch Next: Accountability And Trust In AI

The through line of the week is accountability. Robot manufacturers will have to move from stagecraft to service-level agreements, proving uptime and safety in particular tasks. OpenAI has to prove that a health-focused chatbot can be private-by-design and clinically additive, not just convincing. And image-model-building platforms need defensible child-safety levers before sending them out to the masses. The story is no longer about flashy demos of AI capabilities; it’s about earning trust, one narrow and measurable deployment at a time.