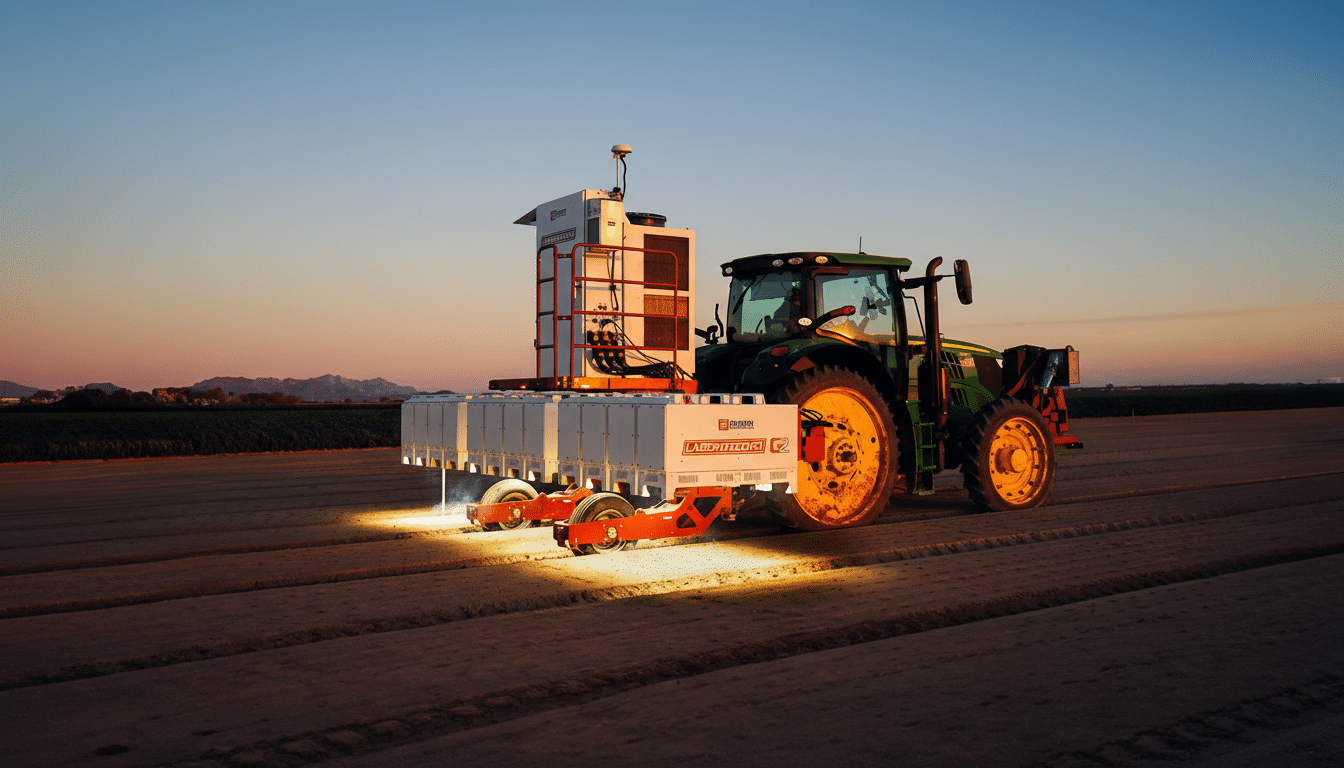

Carbon Robotics has introduced a new foundation-style computer vision model that can detect and identify plants in real time, advancing the state of “green-on-green” perception in agriculture. The Large Plant Model, or LPM, underpins the company’s LaserWeeder system and lets growers instantly distinguish crops from weeds without retraining cycles that used to take a day or more.

The launch marks a shift from narrow, field-by-field models to a broadly generalized plant model trained on an unprecedented breadth of farm imagery. It’s designed to help farmers make faster, more precise decisions on what to remove and what to protect, a capability with direct implications for yield, input costs, and sustainability.

How the Large Plant Model Works in Real-World Fields

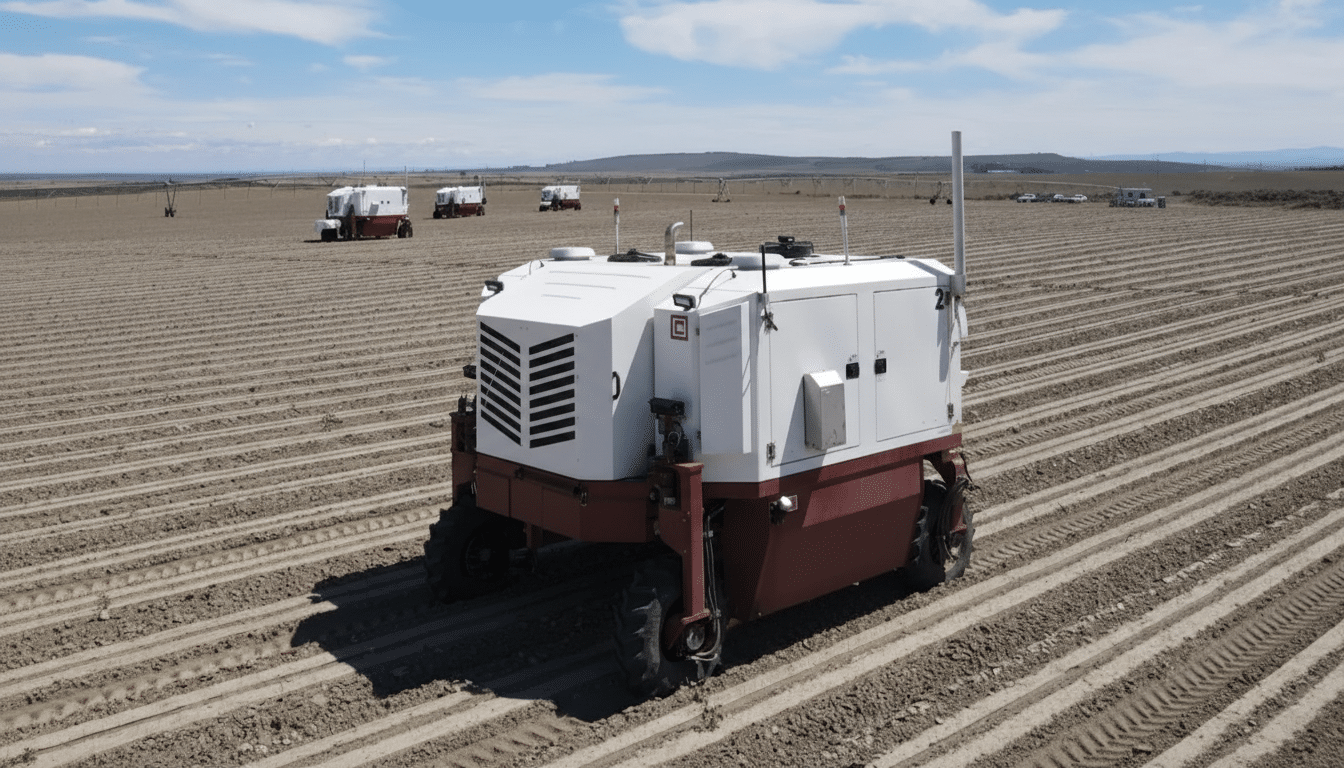

LPM is trained on more than 150 million labeled plant images and data points captured by Carbon Robotics machines operating on over 100 farms across 15 countries. That diverse corpus spans lighting conditions, soil types, growth stages, and regional species variability, enabling the model to generalize to plants it has never seen before.

Previously, updating a model to target a novel weed could take around 24 hours of labeling and retraining. With LPM, growers can select examples from the robot’s onboard image stream and immediately instruct the system to kill or protect, essentially performing zero-shot recognition in the field. The company is rolling out LPM to existing robots via a software update through its Carbon AI platform.

Practically, that means the LaserWeeder can maintain crop safety while adapting to shifting weed pressures within a single pass. The model’s understanding of plant morphology and structure, not just texture or color, is key to avoiding crop strikes at speed.

Why It Matters for Weed Control and Sustainability

Weed management is one of farming’s most persistent cost centers, and herbicide resistance continues to spread. The International Herbicide-Resistant Weed Database and the Weed Science Society of America have documented hundreds of resistance cases across major crops, eroding the efficacy of traditional chemistries and pushing costs higher.

Vision-guided weeding offers a non-chemical alternative that targets individual plants. Whereas other systems focus on selectively spraying herbicides, Carbon’s approach uses high-powered lasers to thermally kill weeds, aiming to reduce chemical use and mitigate drift and residue concerns. For growers seeking organic or regenerative practices, this can be a straightforward fit.

Labor efficiency is another driver. Mechanical or hand-weeding crews are increasingly hard to source during peak seasons. A perception system that reliably differentiates species in real time can keep machines moving faster, with fewer stoppages for reprogramming or manual validation.

Data Engine and Deployment Across Global Farms

LPM’s performance is anchored in a flywheel: every field pass adds labeled examples from new geographies, growth stages, and weed pressures, which are then folded into the model. The company says the dataset has now reached a scale where the model can infer species, relatedness, and structural traits even from plants it has not explicitly seen.

The robots run inference at the edge, processing high-resolution imagery from multiple cameras while traversing rows. Farmers interact through a user interface that surfaces fresh plant images; selecting what to target or preserve updates the robot’s behavior immediately, without a cloud retrain cycle.

Competitive Landscape and Benchmarks in Ag Robotics

Computer vision in row-crop management is not new, but generalizing across species and seasons remains the hard part. John Deere’s Blue River Technology popularized green-on-green selective spraying, with Deere reporting up to 66% reductions in certain herbicide passes for its See & Spray systems. Startups like FarmWise and Bilberry have also pursued mechanical or targeted spraying approaches, respectively.

Carbon’s differentiation rests on laser-based weed termination and a plant model trained as a broad, species-aware foundation. If LPM consistently delivers zero-shot accuracy across fields and months, the operational benefit is significant: fewer model rebuilds, less downtime, and a faster path from scouting to action.

Backers and Roadmap for Carbon Robotics’ LPM

Carbon Robotics has raised more than $185 million from investors including Nvidia NVentures, Bond, and Anthos Capital. Founder and CEO Paul Mikesell, who previously worked on large-scale perception systems at Uber and on Meta’s Oculus team, began developing LPM soon after the first LaserWeeders shipped.

Near term, the company plans to keep tuning the model as the fleet ingests more data. Longer term, a robust plant model could extend beyond weeding into tasks like early stand counts, inter-row thinning, and disease scouting, provided the perception layer maintains species-level precision without extensive retraining.

The central question now is durability: can LPM sustain high accuracy as weed populations shift, crops mature, and lighting and soil conditions change? If the answer holds across seasons and regions, growers may finally get a scalable, chemical-free precision tool that learns as quickly as the field evolves.