Bandcamp has moved to bar AI-generated music and audio from its marketplace, drawing a firm boundary at a moment when synthetic songs are flooding streaming charts and sparking legal fights across the industry. The company said it wants fans to trust that what they buy on the platform is made by humans, and it wants artists to know their work will not be crowded out or imitated by machine-made clones.

What Bandcamp’s AI Music Ban Covers and Prohibits

The new guidelines prohibit tracks created wholly or in substantial part with generative tools and explicitly bar AI used to mimic an artist’s voice, likeness, or signature style. That language goes beyond disclosure rules and into a hard stop: if a model did the heavy lifting, the file is not welcome. The policy also signals that Bandcamp will act on impersonation and style-copying complaints, areas that have been flashpoints for voice clones and unauthorized pastiche.

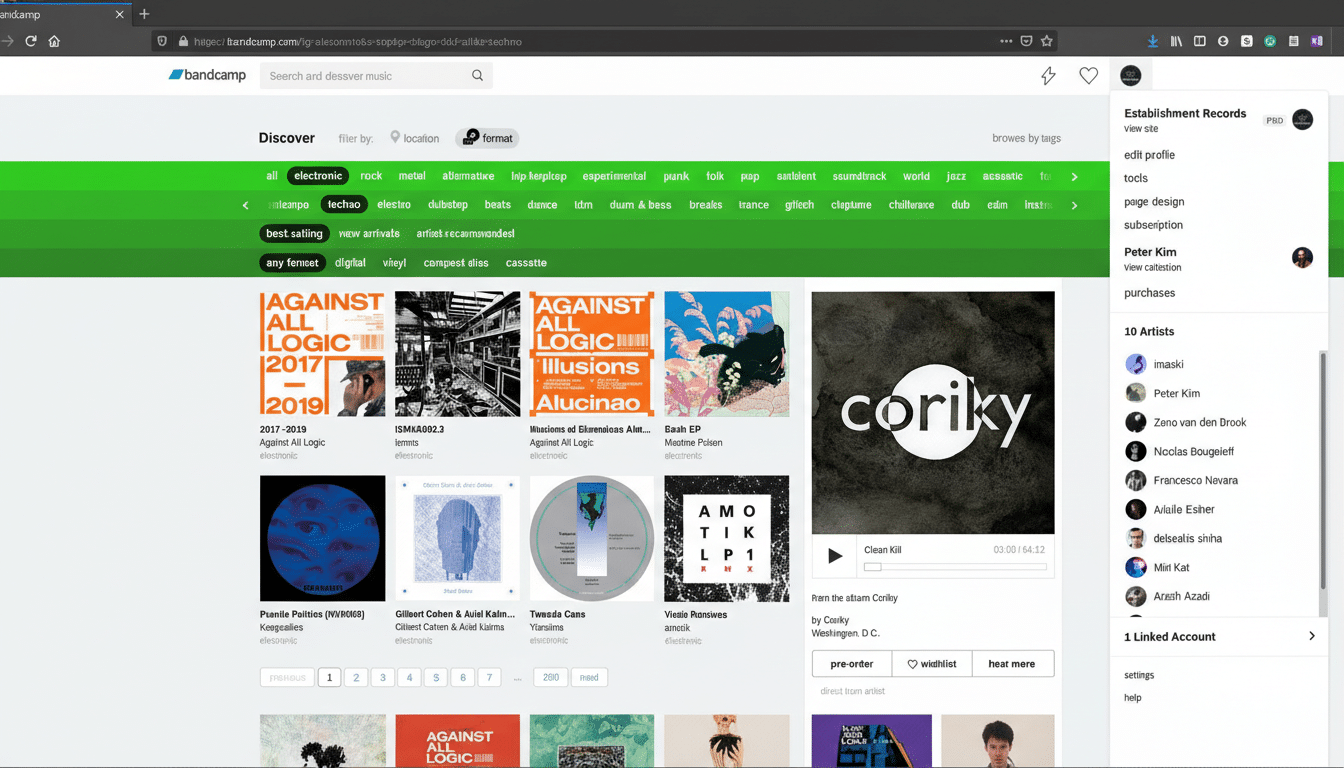

Practically, the move aligns with Bandcamp’s identity as a marketplace for direct sales—downloads, vinyl, cassettes, and merch—rather than a streaming platform that monetizes volume. The bet is simple: people come to Bandcamp to support human creators, and the commercial demand for AI tracks on a store built around fandom is minimal.

Why Bandcamp Is Drawing A Hard Line On AI Now

AI music generators have rapidly improved, and the line between human-crafted and machine-fabricated songs has blurred. Tools like Suno can produce radio-ready tracks from prompts, and synthetic recordings have already reached major playlists and chart placements on services like Spotify and on Billboard rankings. That acceleration poses reputational risk for platforms that trade on authenticity and discovery.

Recent examples illustrate the stakes. A Mississippi creator using the persona Xania Monet turned AI-assisted poetry into a viral R&B hit, reportedly securing a multimillion-dollar deal with Hallwood Media. The episode energized investors and alarmed many working musicians who see unlicensed training and style appropriation as existential threats.

Legal and financial crosswinds shaping AI music platforms

The law remains unsettled. Major labels including Sony Music Entertainment and Universal Music Group have sued Suno, alleging its systems were trained on copyrighted catalogs without authorization. In a separate high-profile decision, a federal judge found that a leading AI company could train on copyrighted books even as the acquisition of those books was unlawful, levying damages in the billions that still amount to a small fraction of the firm’s valuation. These rulings hint at a future where training may be tolerated while data sourcing practices draw penalties—a nuanced outcome that leaves music rights-holders with limited clarity.

Despite the uncertainty, capital continues to pour into the space. Suno recently closed a large Series C round led by Menlo Ventures at a multibillion-dollar valuation, with participation from Hallwood Media. The gap between venture enthusiasm and rights-holder pushback has widened, putting distribution platforms in the awkward middle. Bandcamp’s policy removes that ambiguity on its own turf.

How Bandcamp’s policy differs from other music platforms

Many large services are experimenting with labeling synthetic content or limiting voice cloning rather than imposing outright bans. YouTube has rolled out disclosure requirements and new takedown pathways for deepfaked music content. Some streamers have targeted bot-driven uploads and manipulation while allowing AI-assisted creation that meets their policies. Bandcamp’s stance is cleaner and more restrictive: no AI-made tracks, and no AI-driven impersonation.

That clarity could become a selling point for independent artists and labels who want a human-only marketplace for releases, preorders, and merch bundles. It may also push AI music makers to other venues optimized for experimentation or background audio, potentially creating a bifurcated ecosystem.

Enforcement challenges and potential ripple effects ahead

Detection will be challenging. Watermarking standards for audio are nascent, and model output can be edited to evade simple checks. Expect Bandcamp to lean on community reporting, provenance claims from artists, and case-by-case reviews for takedowns. False positives are a genuine risk, especially for electronic music where synthetic timbres and sample-based workflows are normal. The key distinction in the policy is authorship: human-made composition and production tools are fine; model-generated content is not.

If the policy sticks, it could influence licensing talks and platform norms. Independent distributors may follow with stricter definitions of “human authorship,” while major streaming services keep experimenting with guardrails and labels. For musicians, the upside is a clearer channel to audiences who want to pay for human creativity. For AI startups, the message is equally clear: without transparent licensing and provenance, access to certain high-intent marketplaces will be closed.

Bandcamp is making a values-forward, commercially pragmatic wager that aligns with how its community buys music. In a year defined by machine-made hits and courtroom battles, the company has staked out a simple promise to fans and artists alike: what you discover and purchase there will be the product of human hands and voices.