re:Invent, AWS’ signature event, opened with a clear message: the time of passive assistants is giving way to autonomous agents and custom silicon optimized for AI at scale. From the new Trainium chip and on‑prem AI “factories” to an expanded agent platform and model lineup — the company promoted speed, control, and flexibility for enterprises requiring generative AI in order to achieve measurable value.

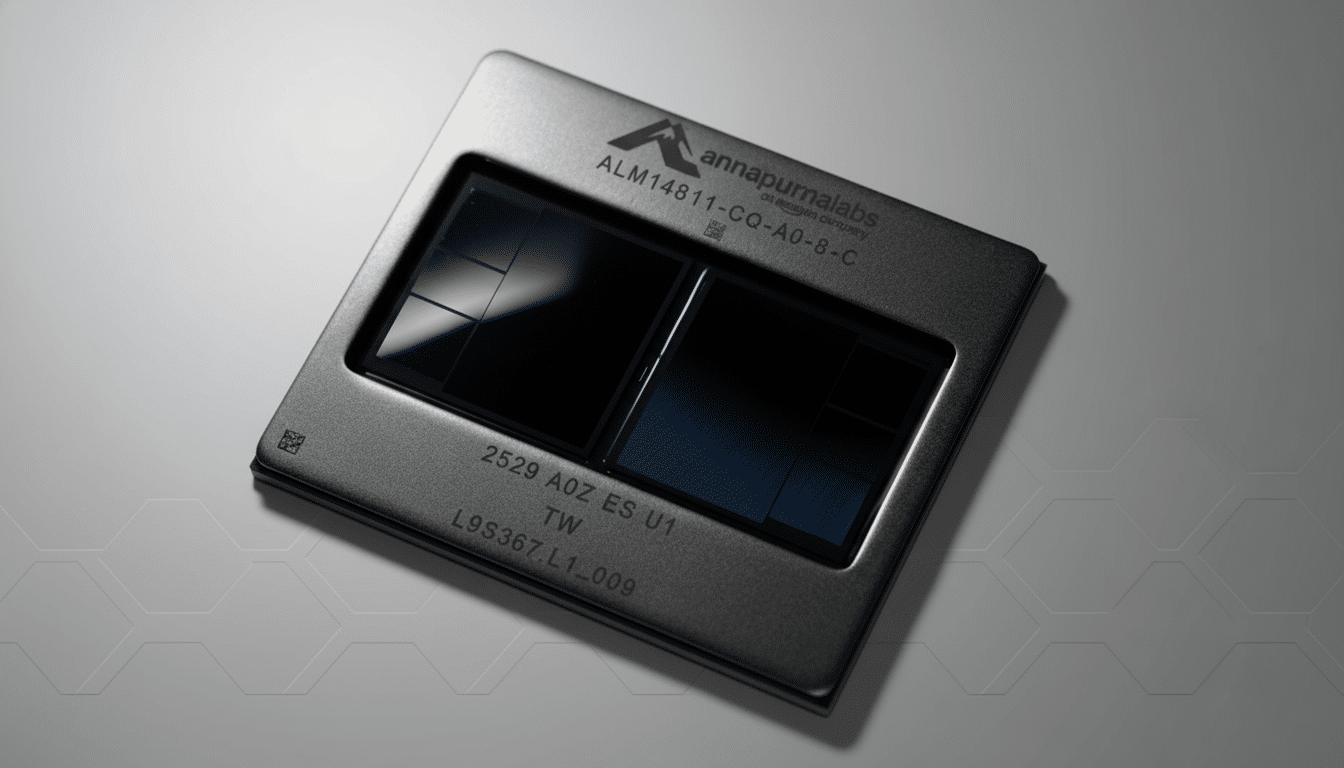

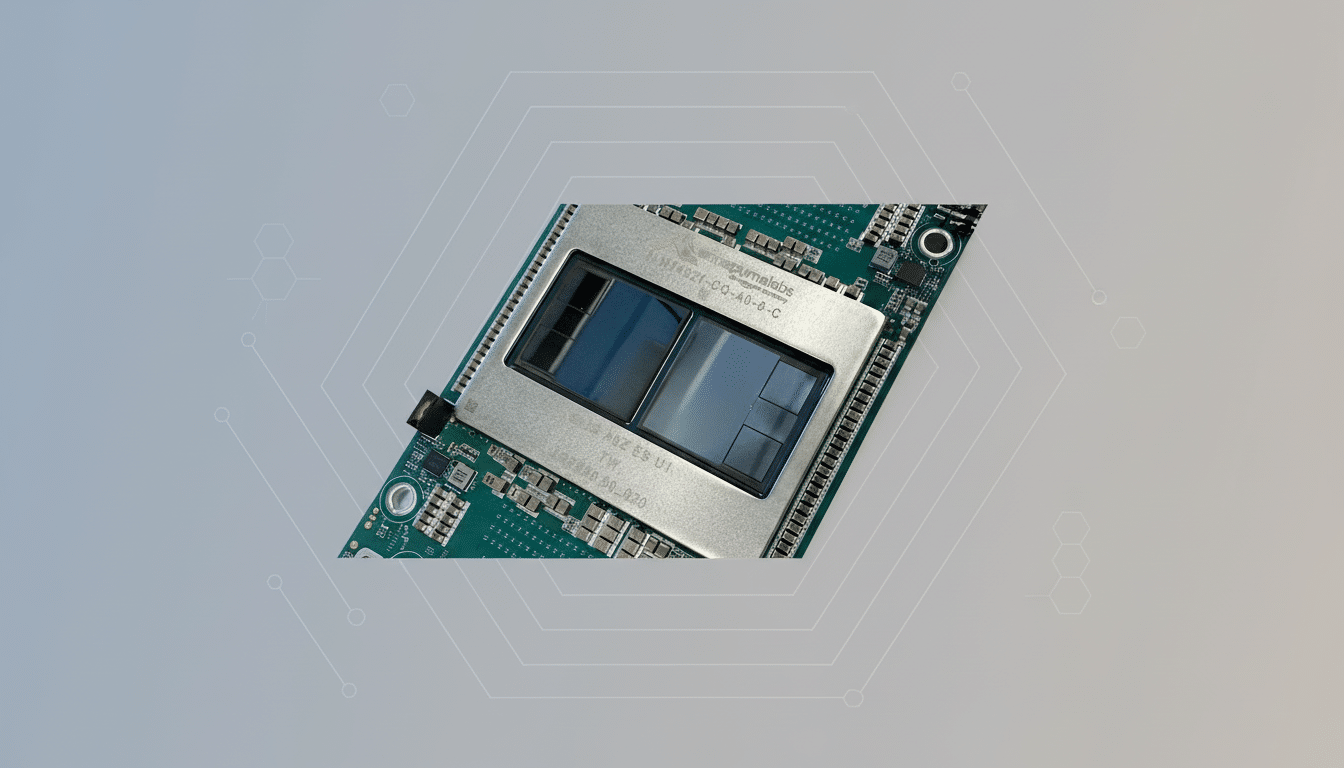

Trainium3 and UltraServer: Performance and Efficiency Push

Trainium3, coupled with a new UltraServer system, was the hardware headliner. Trainium3 can provide up to 4x performance improvement for training and inference while reducing total cost of ownership by as much as 40%, according to AWS. If commercialized, that blend of throughput and efficiency could shorten model development cycles and lower the per‑job total cost of ownership for long‑running workloads.

- Trainium3 and UltraServer: Performance and Efficiency Push

- AgentCore Grows Up with Policy, Memory and Evaluation

- Frontier Agents Begin Real Work with Minimal Oversight

- Nova Models and Nova Forge Focus on Customization

- AI Factories to Take Cloud AI to Customer Data Centers

- Customer Proof Points Reflect Early ROI from Agents

- Why It Matters in the Cloud AI Race for Enterprises

AWS also teased Trainium4, in an overt tip of the hat toward interoperability with Nvidia’s platforms. That roadmap speaks of a pragmatic attitude: businesses want options across accelerators, and AWS plans to play nice with — not kill off — the de facto GPU ecosystem.

AgentCore Grows Up with Policy, Memory and Evaluation

For AgentCore, AWS’s toolkit for constructing AI agents, guardrails and observability that enterprises have requested are being added. A new Policy feature gives teams power to set clear limits on what agents can and cannot say, while audit logs and memory enable agents to recall user preferences and context across conversations.

As a tool to separate the hype from the reality, AWS now makes available 13 built‑in evaluation systems designed for reproducible testing. That sort of standardized testing — for years a best practice in MLOps — ought to help teams keep tabs on regressions and compare agent performance across use cases before deploying at scale.

Frontier Agents Begin Real Work with Minimal Oversight

AWS unveiled three so‑called “Frontier” agents, meant to run with little human supervision. The Kiro autonomous agent, which writes code and learns to navigate a team’s workflows, can run on its own for hours or even days, AWS says. A second focuses on security tasks such as code review, and a third is about DevOps: preventing problems during releases. Preview builds are immediately available to early adopters for a test drive of agentic automation for the software lifecycle.

Nova Models and Nova Forge Focus on Customization

Amazon Web Services released four models in its Nova family — three for text generation and one that supports both text and images. And to knit model selection together with enterprise data, the new Nova Forge service enables customers to select from pre‑trained, mid‑trained, or post‑trained models and fine‑tune on proprietary datasets. The moral of the story: tailor the model to the business, rather than trying to reconfigure your business according to the model.

AI Factories to Take Cloud AI to Customer Data Centers

AWS is offering “AI Factories” that run in a customer’s on‑premises or customer‑deployed facilities for customers who are bound by data sovereignty or latency requirements.

Developed in collaboration with Nvidia, the systems are compatible with Nvidia GPUs and can also feature Trainium3. For heavily regulated industries and governments, this hybrid approach provides a way to bring generative AI without having to move highly sensitive workloads off‑prem.

Customer Proof Points Reflect Early ROI from Agents

Lyft focused on how it harnesses Anthropic’s Claude through Amazon Bedrock to drive an agent that deals with problems between drivers and riders. The company says average resolution time is 87% lower and driver adoption is up 70% this year. These are the sorts of real‑world improvements that CFOs clamor for: reduced time to case resolution, increased user satisfaction, and a decrease in both tickets escalated to humans and costs for support staff.

Why It Matters in the Cloud AI Race for Enterprises

From custom silicon to on‑prem options and agent safety tooling, AWS is going after the pain points that have slowed enterprise AI adoption — cost, control, trust. Analyst firms such as Gartner and Synergy Research have consistently pointed out AWS’s scale advantage in cloud; maintaining that edge in AI will require transforming chip and platform maturation into lower training bills, tighter governance, and reliable automation.

The big open questions are well‑known. Are firms able to consistently verify agent behavior before running them autonomously? Will the gains made by Trainium3 persist across a broad diversity of model types, and not just on benchmarks? And how will AWS reconcile its commitment to an open environment with the gravity of its own stack, as customers use a combination of Nova models and Bedrock‑hosted options and Nvidia‑based workflows?

And for the moment, the trend is clear. AWS is betting that enterprises will want agentic systems with strong guardrails and model portability without fragmentation, and infrastructure that meets them where their data lives. If those bets pan out, the next wave of AI wins will be tallied not by demos pitched but by production tickets closed, incidents averted, and training cycles shaved.