Apple is pausing development of a lower-end version of the Vision Pro, its night-vision-like augmented reality viewer that has been with employees since 2015, to focus on a more ambitious effort by the company: smart glasses that support games and video. The shift reflects a rebalancing of Apple’s spatial computing roadmap: less risk on bulky, high-priced headgear, more emphasis on everyday wearables that look like glasses and rely more on voice, AI, and the iPhone.

Why a Budget Vision Pro Was Put on Ice for Now

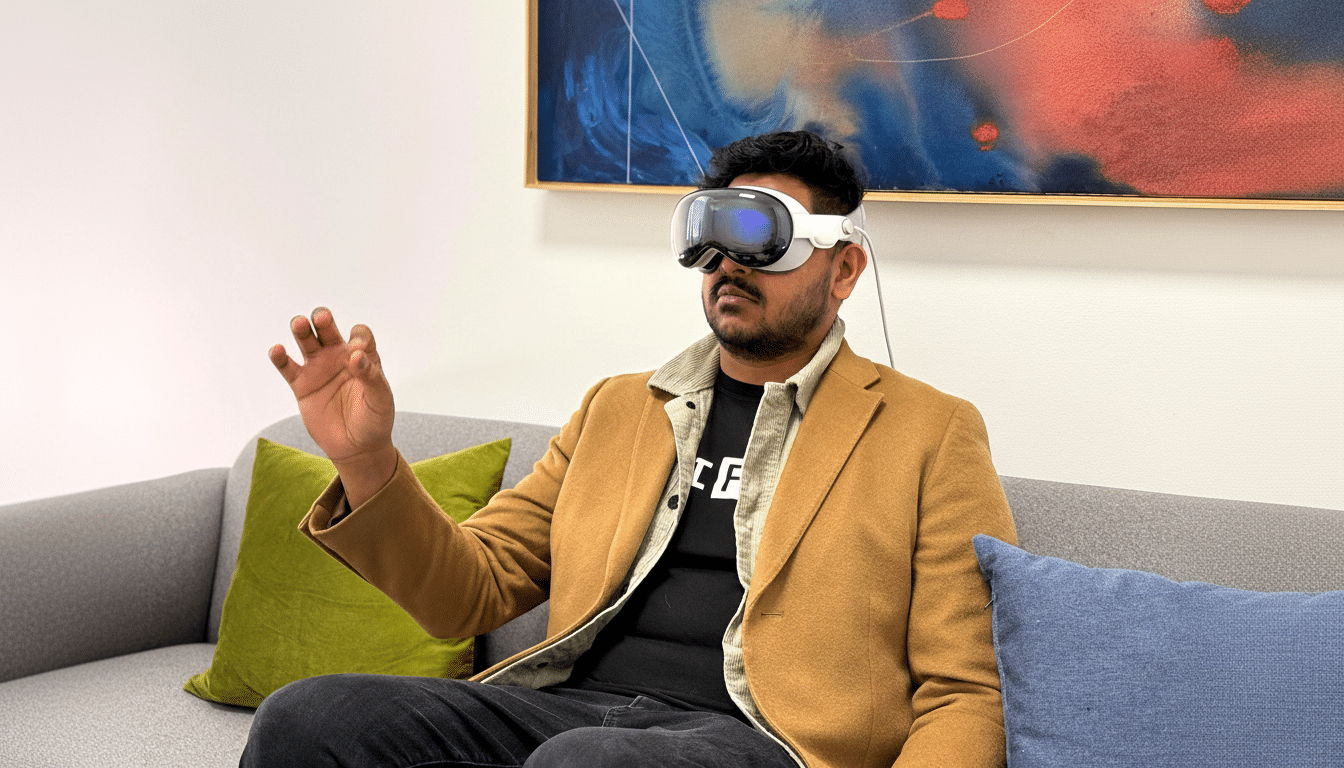

The Vision Pro showed Apple’s technological edge, but price, form factor, and weight kept it from the mainstream. After an initial spate of interest, demand quickly waned, a common trend in the mixed-reality category that companies like IDC have warned is stifled by high price tags, lack of comfort and content. A less expensive Vision Pro had been planned for Apple’s longer-term roadmap to expand the addressable market, but the company is now said to be redirecting its resources and focus toward glasses that are lighter, more wearable, and easier to justify on a daily basis.

As an important note, this doesn’t mean Apple is completely ditching its headset. The article says an improved Vision Pro is in the works—a work-in-progress upgrade version, that is. What’s changing is the prioritization: Resources are moving to a category of device for which there’s a more rational path to mass adoption in the near term and — as we work to build that capacity — a lower bill of materials.

Two Smart Glasses Paths in the Works at Apple

The strategy for Apple’s smart glasses seems to run on two tracks. A model may be display-less and closely integrated with an iPhone, functioning as a voice-first AI wearable with built-in speakers, microphones, and cameras. (Imagine hands-free access to Siri, directions, photo capture, and notifications — without the social friction of a face-mounted screen.) This product is referred to internally as N50 and has been on a fast track.

The second one references a computer glasses-type device with a lightweight display. While not as immersive as a full headset, a gentle overlay would work just fine for glanceable tasks like navigation, messaging triage, translation, and contextual information. Both products would rely on Apple silicon and the company’s foray into on-device AI. Apple has been quietly rebuilding Siri with generative skills and a conversational design, which makes sense when the primary interface is your voice, not a touchscreen.

Design will matter as much as technology. Apple has form with turning components into consumer fashion, and the report indicates there will be a number of styles on offer. Look for a lot more emphasis on “privacy cues” — recording indicators and clear capture controls — learned from previous wearables like Google Glass and Snap Spectacles. Health sensors are also rumored to be in the mix, in keeping with Apple’s multi-year health play across Watch, iPhone, and AirPods.

Racing Meta and the Crucial Wearability Factor for Users

Apple’s pivot sits directly in the lane Meta has been building with its Ray-Ban Meta smart glasses. That product has gained a foothold by doing a few things well — hands-free capture, live streaming, and a voice assistant — without attempting to fulfill the promise of full augmented reality. Publicly, Meta has boasted of engagement that was stronger than anticipated, a helpful proof point that regular people will don glasses if the glasses look like glasses and come with real-world utility.

Competing here means solving for battery life, audio clarity, weight distribution, and social acceptance. What gives Apple the edge is integration with the ecosystem: pairing with iPhones, iCloud, Photos, Apple Music, Maps, and an increasing number of on-device AI features. If Apple runs compute on the phone and inside a custom low-power chip in the glasses, it could cut down on heat and weight while maintaining responsiveness — critical for getting people to wear them all day.

What It Means for Developers and Buyers in Spatial Tech

For developers, this shift points to something more expansive than simply headsets. visionOS would presumably evolve in tandem with new frameworks for voice-first, glanceable experiences that span glasses to iPhone to Watch. Look for Apple to push app developers toward multimodal interactions — speak, see, and tap — with the aid of generative AI for summarization, search, and personal assistance.

For buyers, it’s a signal Apple considers the near-term future of “spatial” as being ambient and assistive rather than immersive. That’s a practical reading of where consumer demand is right now. High-end headsets will continue to be powerful tools for niche productivity and entertainment, but the mass market likes what disappears into everyday routines. Normal-looking smart glasses that cost a fraction of the price of a headset and that make relatively simple tasks faster could be Apple’s next building block to more fully developing spatial computing.

The larger takeaway: Apple is prioritizing wearability, battery life, and voice-first AI over pro-grade headsets, which are still on a slower path to arrive.

And should the company execute, it could very well follow a played-out playbook — arriving late to the party and then scaling the category through design, ecosystem fidelity, and developer incentives. That’s how the smartphone, smartwatch, and wireless earbuds waves ultimately fell Apple’s way. Smart glasses may be next.