Apple has detailed how the App Store will still follow new laws introduced in Texas despite other large technology companies removing restrictions for their users, with Apple’s App Store and software updates taking action to continue protecting children online as of this month.

Those who say they are under 18 will need to be part of a Family Sharing group, which will require parental or guardian consent for downloads from the App Store as well as in-app purchases and other items that go through Apple’s payment system.

What Texas Requires from App Marketplaces Under SB 2420

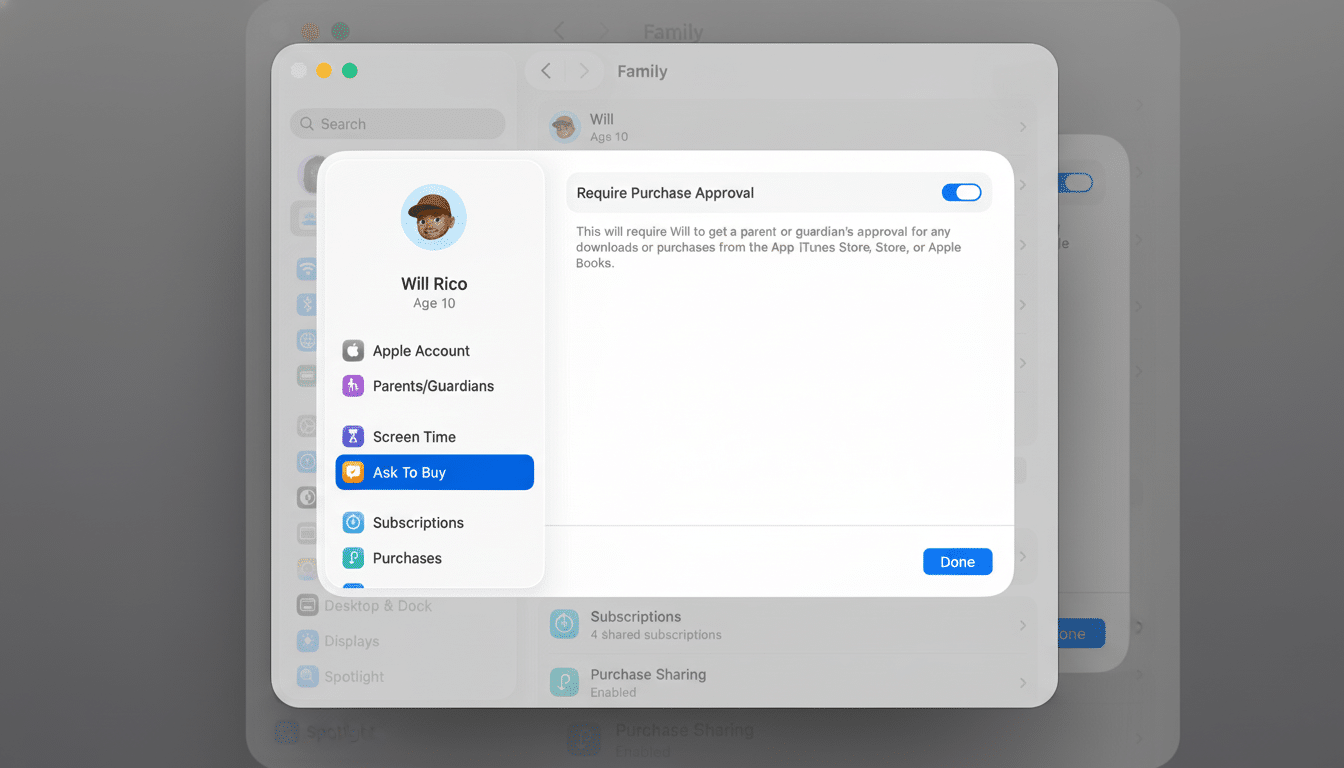

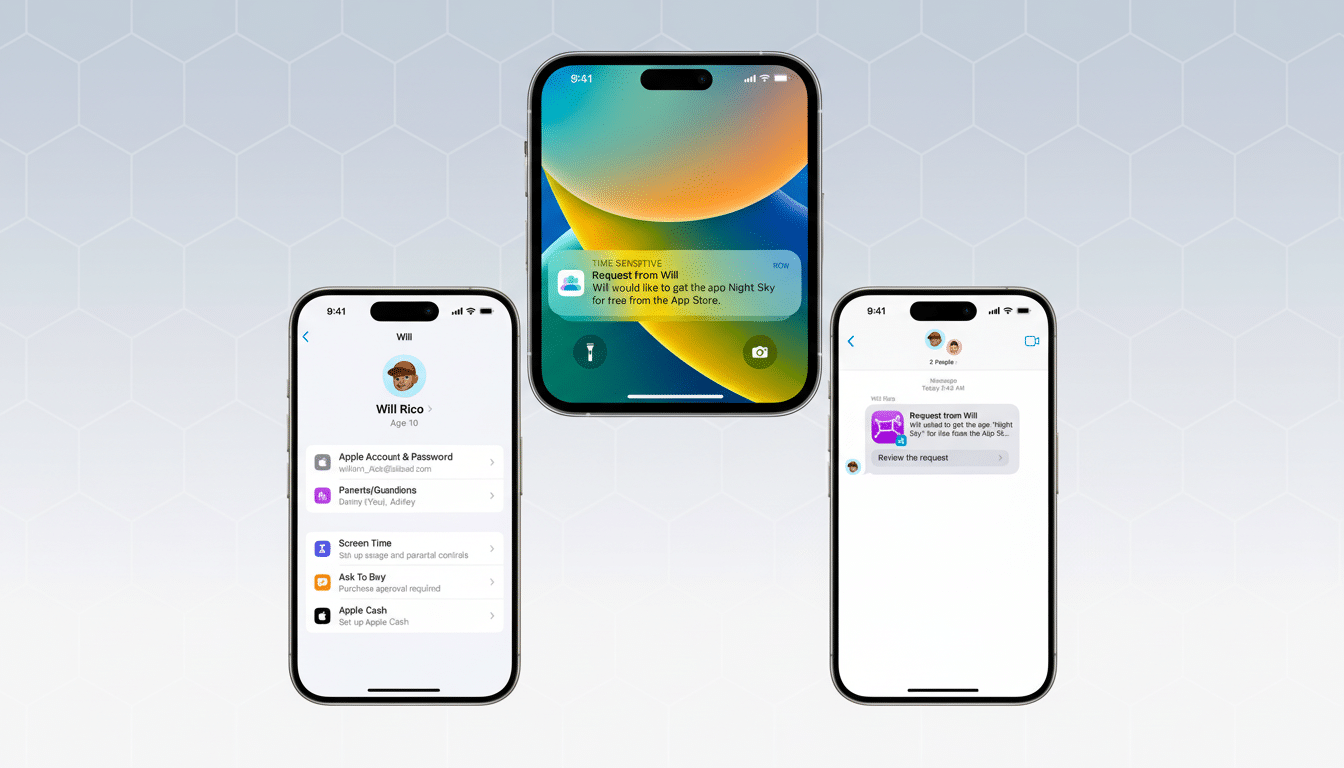

Senate Bill 2420 in Texas seeks to place greater responsibility for protecting young people in the hands of app marketplaces. The headline requirements are simple enough: check a user’s age status at account setup and make sure non-grown-ups have parental or guardian supervision when engaging with the app marketplace. Many families are already using Apple’s “Ask to Buy,” but the Texas framework makes it a legal baseline rather than an optional layer.

The law’s authors say its purpose is to protect children from exposure to harmful content and from unapproved purchases. Detractors argue that sweeping store-level checks could run the risk of gathering sensitive data that even relatively benign apps don’t need — and potentially exacerbate privacy and equity issues by requiring verification with government IDs or credit cards.

How Apple’s Going to Implement Texas Age Verification

To make it easier for developers to modify their services, Apple will also release the Declared Age Range API, which allows an app to identify a user’s age category without sending specific birthday information or identity information. The goal is to provide developers with a uniform Apple-managed signal they can use to customize experiences for minors, minimize exposure to adult content and restrict data collection — without creating their own high-friction age gates.

Apple is also adding more APIs, or application programming interfaces, so developers can re-request a parent’s consent when a product has “material changes,” like if it changes its data practices or introduces features that increase risk. That reflects the best practices under federal rules such as the Children’s Online Privacy Protection Act, which require updating verifiable parental consent when what an app collects or does is materially different.

On the consumer side, it’s intended to fit into those existing account and Family Sharing flows that Apple already has. When users set up an account, they claim to be 18 or older. Minors are encouraged to join a Family Sharing group, which allows guardians to confirm downloads, purchases and in-app subscriptions made through the Apple payment rails.

Privacy Tensions and Industry Pushback on Age Rules

Apple says it is in favor of stronger protections for children but has opposed broad age-verification requirements that would force the collection of personally identifiable information on a wide scale — even when someone is just using an app to turn off the lights. The company says platform-level attestations and privacy-preserving signals are safer than IDs or biometric scans sent to many third parties.

Google, which owns Google Play, has said it plans to implement similar changes for users elsewhere in Texas and other states that enact a similar law. In a public policy blog post, Google’s Kareem Ghanem challenged whether some suggestions supported by big social platforms were passing responsibility for youth safety from the services where risks materialized to app stores that serve as intermediaries.

Civil society groups like the Electronic Frontier Foundation and the Center for Democracy & Technology have long warned that age checks can effectively become identity checks, raising risk of data breaches and locking out users who don’t have traditional identity documents. Texas’ method is effectively a test of whether platform-provided signals can thread that needle: providing high-assurance controls without requiring ID uploads at every turn.

What This Means for Developers and Families

For developers, the first step is implementation. Mixed audience apps will have to make sure that they honor the Declared Age Range signal, restrict sensitive features for kids, and be prepared to request parental re-consent after material updates. The proper in-app copy, eased consent prompts and educated defaults (limiting social discovery or data sharing for teen accounts, for instance) will help minimize friction and facilitate compliance.

There will be a bigger emphasis on parents and guardians in the download and purchase flow. Family Sharing turns into the conduit for minors’ App Store activity, consolidating approvals and ensuring spending is at least somewhat predictable. The upside is that the protections are now stronger; the downside is more prompts when a teen wants to experiment with new apps or make in-app purchases — especially in games and social tools, many of which send updates every few weeks.

Other States and the Way Forward for Compliance

Apple says a similar guardrail that went into place for news organizations based in Texas will be imposed on news organizations from other states with similar requirements, including Utah and Louisiana. That leads to a patchwork in which platform-level tools need to take those state lines into account while maintaining a coherent developer experience — and privacy model for users.

The stakes are high: If Texas’ model is successful — protecting teenagers and generating neither a sprawling identity database nor a fresh round of heated clashes over gender — it could become the template for other state legislatures. Pressure could swing back toward app-level controls and service-specific obligations if it adds friction without lessening harm. Either way, Apple’s privacy-focused APIs and mandatory Family Sharing for minors represent the most detailed, legible plan so far for how exactly a dominant platform would comply with state age-verification laws while avoiding normalizing ID checks every time you download an app.