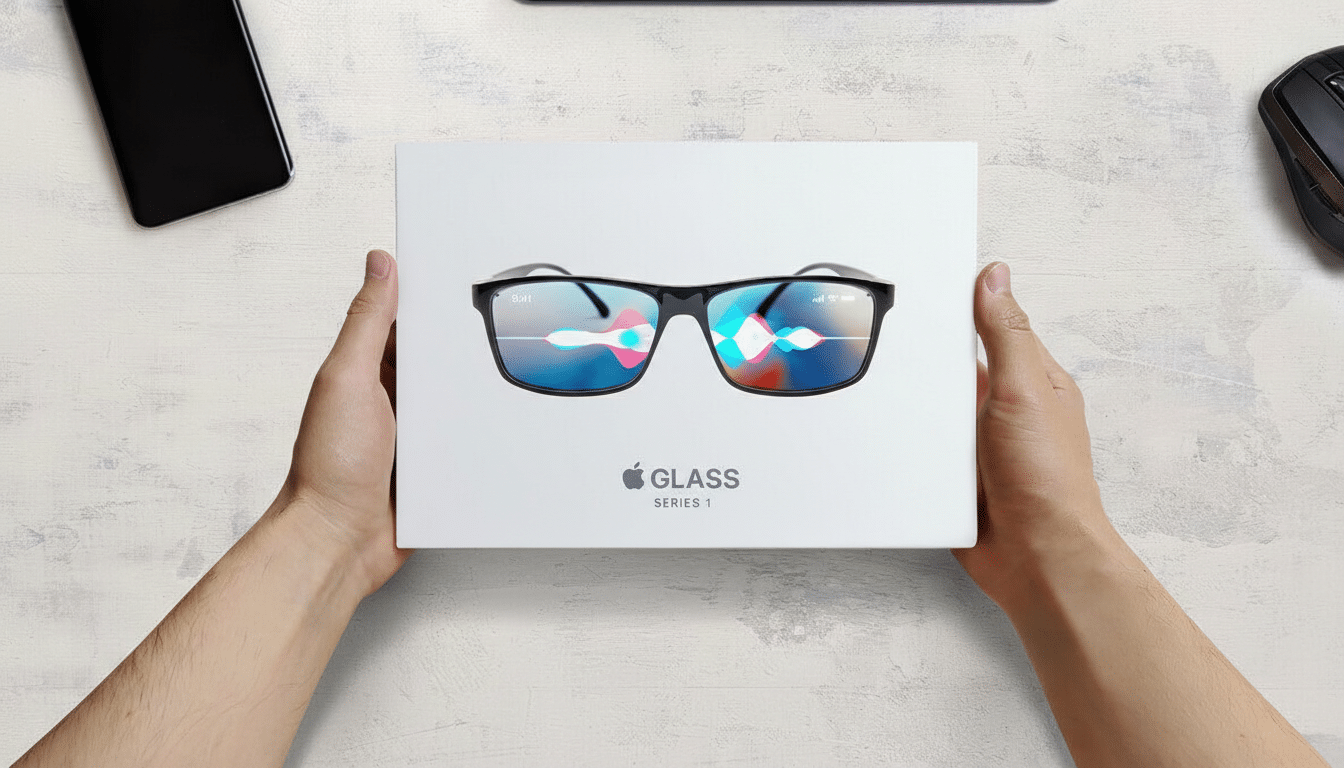

Apple is quietly building a new family of AI wearables — smart glasses with a display, camera-enabled AirPods, and a pendant-style assistant — according to a report from Bloomberg’s Mark Gurman. The devices are said to center on a next-generation Siri, lean on the iPhone for compute and connectivity, and use cameras to power context-aware features rather than photography.

Inside the report on Apple’s emerging AI wearables plans

Gurman reports that Apple’s glasses, internally codenamed N50, are the most ambitious of the trio, pairing a lightweight frame with a built-in display and camera system. He adds that Apple CEO Tim Cook has signaled a sharper focus on AI-first hardware, though any timeline remains fluid and products could still be delayed or canceled.

- Inside the report on Apple’s emerging AI wearables plans

- What AI glasses could actually do in everyday use

- AI AirPods with vision for context-aware features

- A pendant for everyday prompts and quick visual queries

- Privacy will make or break it for camera-equipped wearables

- Competition and timing for Apple’s next AI wearables push

- What to watch next as Apple explores AI wearables

The AirPods concept stands out: earbuds with cameras are rare, and in Apple’s case the goal would be environmental understanding — identifying objects, reading text, or assisting with navigation — not taking photos. The pendant, similarly, would rely on an always-available mic and sensor stack to serve quick prompts and visual queries while offloading heavy computation to the iPhone and cloud.

What AI glasses could actually do in everyday use

Beyond notifications, the glasses would be a canvas for “ambient AI” — live translation, hands-free directions with arrows anchored to the real world, scene descriptions for accessibility, and just-in-time answers based on what the wearer sees. Expect a tight integration with iOS, tapping Maps, Photos, and Wallet data with user permission to provide context without juggling screens.

Technically, Apple has the building blocks: lightweight displays informed by its spatial computing work, low-power silicon derived from H-series audio chips and watch-grade processors, and a maturing toolkit for on-device machine learning. Battery life and heat remain the gating factors for everyday glasses, which is why a phone-tethered design — as reported — is a pragmatic first step.

AI AirPods with vision for context-aware features

Adding tiny cameras to earbuds could unlock subtle but high-impact features. Think posture and workout-form feedback using head cues and visual checks, real-time object detection before a runner steps into traffic, or automatic audio adjustments based on where you’re looking. Apple already uses UWB and head tracking for Spatial Audio; vision would give those features richer context.

A camera in an earbud also avoids the social friction of gaze-directed glasses cameras, but it introduces new engineering constraints around power draw, lens placement, and privacy signaling. Expect prominent capture indicators and strict on-device filtering to reassure bystanders — areas where Apple has historically leaned on hardware-level safeguards.

A pendant for everyday prompts and quick visual queries

Pendants aren’t new — recent attempts by startups highlighted both the promise of hands-free AI and the pitfalls of immature software and battery life. Apple’s advantage would be a polished Siri overhaul, a vast developer ecosystem, and seamless continuity with iPhone and Apple Watch so the pendant augments rather than replaces screens.

Use cases could include rapid-fire reminders, grocery list recognition from packaging, meeting summaries when paired with a phone, or accessibility features like text reading and scene narration. The report suggests the cameras would serve perception and not social sharing, which aligns with utility-first design rather than content capture.

Privacy will make or break it for camera-equipped wearables

Wearables with cameras demand clear rules: visible indicators, processing that defaults to on-device, and granular controls for what is stored or shared. Apple’s track record — from differential privacy to secure enclaves — sets expectations that any ambient AI features will include opt-in data access, minimized retention, and transparent prompts to avoid recording in sensitive spaces.

There’s also the regulatory backdrop. Camera-forward devices must navigate school and workplace restrictions, as well as evolving guidance in the EU and other regions. Building trust for daily wear will require both technical safeguards and consistent policy enforcement.

Competition and timing for Apple’s next AI wearables push

The timing aligns with a broader push into AI wearables. Meta’s latest smart glasses have seen momentum, with reports indicating sales markedly accelerated last year, and Google is expected to advance Android-based XR initiatives. OpenAI and others are exploring assistant-first hardware. Apple, meanwhile, has reportedly pursued a Siri revamp that can lean on external large language models such as Google’s Gemini while keeping user data guarded.

Analysts note Apple’s disciplined spending on AI infrastructure relative to peers, coupled with a massive installed base, gives it room to move only when the user experience is sticky enough to scale. If Apple can pair everyday utility with social acceptability, glasses, earbuds, and a pendant could become the next leg of its wearables business.

What to watch next as Apple explores AI wearables

As with any early hardware program, plans can change quickly. Supplier activity, developer frameworks inside iOS, and Siri capability demos will be the best leading indicators. Bloomberg’s reporting suggests Apple is closer than many expected — but as always with Apple, the final word will be what’s ready, not just what’s possible.