Apple’s long-rumored augmented reality glasses are unlikely to hit store shelves for years, with industry analysis pointing to 2028 as the earliest realistic window. That projection, from Omdia’s latest AR and display outlook, suggests Meta could beat Apple to market by a few months with its own advanced eyewear targeted for 2027.

Why the AR glasses development timeline is stretching

The holdup is less about software vision and more about physics, power, and manufacturing. True AR glasses—thin, light, and socially acceptable—must deliver bright, color-accurate visuals in daylight, all while running 6DoF tracking, spatial mapping, and low-latency graphics on a tiny battery. That trifecta remains brutally hard.

- Why the AR glasses development timeline is stretching

- How display technologies will define the launch window

- Meta and competitors are advancing toward AR eyewear

- Market realities and mounting pricing pressure for AR

- What to expect from Apple’s first glasses

- Apple’s long‑game strategy and why waiting could pay off

Waveguide optics that make glasses-like form factors possible impose major brightness losses, often north of 80%. Displays must start exceptionally bright to remain legible outdoors, but pushing luminance drives up heat and power draw. At the same time, compute has to shrink to a glasses temple without turning into a hot spot on your face. These engineering constraints, not a lack of ambition, are what push timelines out.

How display technologies will define the launch window

Omdia’s forecast revolves around OLEDoS, or OLED on silicon, which packs millions of pixels onto tiny microdisplays. Apple already uses micro‑OLED in Vision Pro, delivering an aggregate 23 million pixels across two panels. For glasses, Omdia expects Apple to adopt dual 0.6‑inch OLEDoS displays—one per lens—to hit acceptable sharpness in a minimal footprint.

Compared with legacy LCoS, OLEDoS is thinner, lighter, and more power efficient—critical advantages for eyewear. But micro‑OLED struggles to achieve the extreme nits needed for bright daylight AR once waveguide losses enter the equation. Another contender, LEDoS (microLED on silicon), promises higher luminance, lower power, and longer lifespan, yet volume manufacturing remains immature. Suppliers from Sony to eMagin and BOE are building capacity, but yield, cost, and brightness targets still dictate pace.

Meta and competitors are advancing toward AR eyewear

Meta’s path underscores the staged approach many are taking. Its Ray‑Ban line popularized on‑face cameras and AI features without full AR overlays. According to Omdia, Meta’s next step—a display‑equipped AR glasses platform—could arrive in 2027, likely leveraging microdisplays before jumping to microLED when ready. Snap’s developer‑only Spectacles and various enterprise‑focused devices show similar sequencing toward lighter, brighter, and more capable eyewear.

Chipsets have improved, too. Qualcomm’s latest XR silicon shows what’s possible for always‑on sensing and low-latency graphics in small thermals. Apple’s advantage is vertical integration: custom silicon tuned for power efficiency, camera and sensor fusion that already anchors iPhone and Vision Pro, and tight software control from visionOS to iOS. Even so, fitting all of that into frames people want to wear all day is the bigger hurdle.

Market realities and mounting pricing pressure for AR

Adoption also counsels patience. IDC and other trackers put annual AR glasses shipments in the hundreds of thousands, far below the VR market and a rounding error next to smartphones. Analyst estimates for Vision Pro’s first‑year volumes landed well under 1 million units, reinforcing the notion that price, weight, and utility must converge before the category can scale.

For mass‑market AR, battery life must stretch to a workday, outdoor visibility must be reliable, and price needs to land closer to a premium phone than a laptop-plus. Those targets pull Apple and competitors toward the same bottlenecks: display brightness and efficiency, wafer‑level yields, and optics that do not compromise comfort.

What to expect from Apple’s first glasses

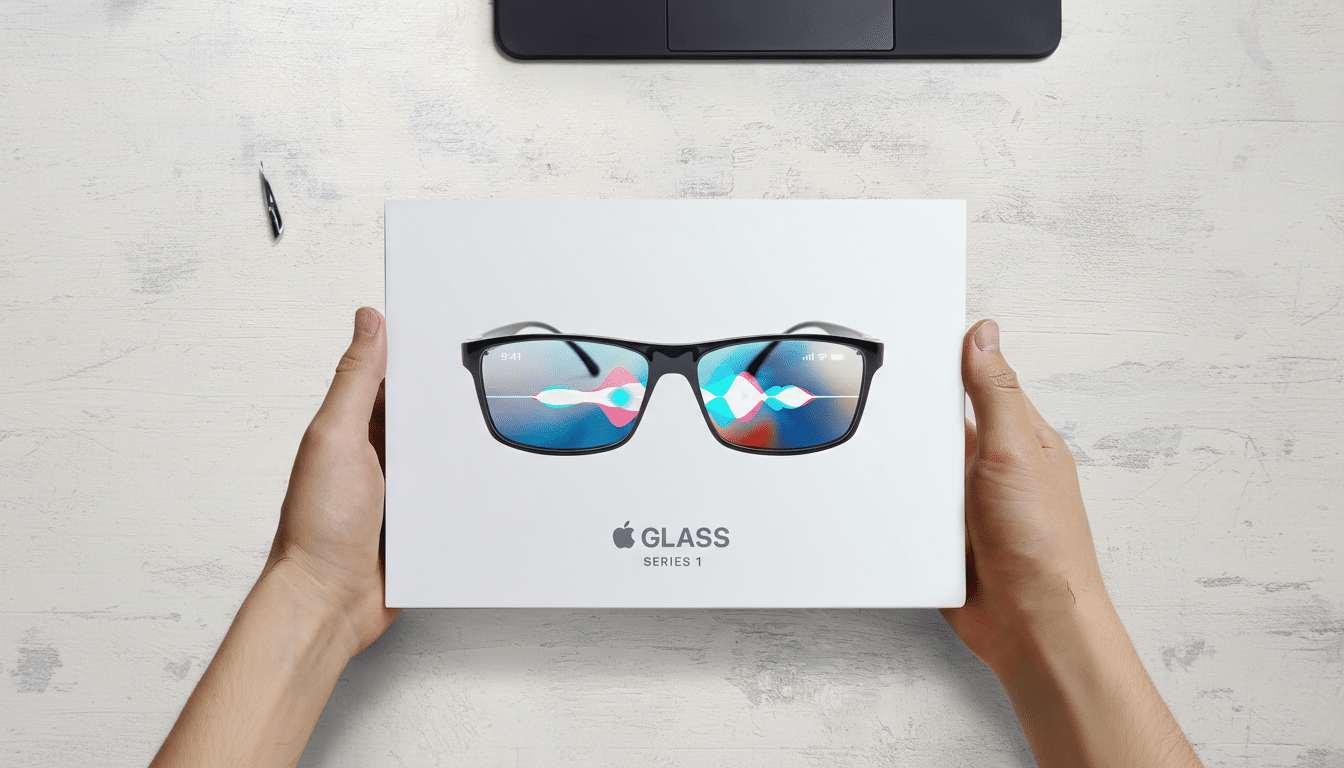

Barring surprises, the first model will likely be a standalone device rather than a tethered accessory. Bloomberg reporting previously indicated Apple shelved a Mac‑dependent concept in favor of an independent product, which aligns with the company’s broader push toward on‑device intelligence and seamless iPhone integration.

Expect dual micro‑OLED displays, a compact Apple Silicon platform tailored for ultra‑low power, and a sensor suite for head, hand, and eye input. visionOS groundwork and the Vision Pro SDK already give developers spatial building blocks, and Apple’s AI stack could enable glanceable assistants, translation, and notifications you can live with in public. The art will be deciding what to leave out to preserve weight, battery life, and privacy.

Apple’s long‑game strategy and why waiting could pay off

Waiting until 2028 may sound conservative, but it lets Apple scale microdisplay supply, mature waveguide optics, and keep thermals and cost in check. It also gives time to seed spatial apps on Vision Pro so day‑one glasses owners find real utility, not demos. If Omdia’s timing is right, Apple’s AR debut will hinge less on being first and more on being the first to feel inevitable.