Amazon Web Services is trying to rattle Nvidia in the AI hardware game with Trainium3, a training chip and system that delivers major performance gains today and an easier migration path into Nvidia territories tomorrow. The company also pre-announced Trainium4, indicating a desire to interoperate with Nvidia’s high-speed interconnect so customers don’t have to re-architect the AI stack to mix and match silicon.

Introduced at the cloud giant’s flagship cloud conference, the acclaimed third-generation Trainium is now found inside a bespoke-built Trainium3 UltraServer and takes advantage of AWS’s proprietary networking in order to scale from a single node right up through gargantuan clusters. The pitch is simple: more speed, more memory, lower energy and cost per model run.

- Trainium3 at a glance: performance, memory, and scale

- Where scale meets efficiency in AI training and inference

- An Nvidia-friendly roadmap for interoperable clusters

- Software and developer experience for AWS Neuron SDK

- Competitive context amid GPU and accelerator rivalries

- What to watch next for Trainium and Nvidia coexistence

Trainium3 at a glance: performance, memory, and scale

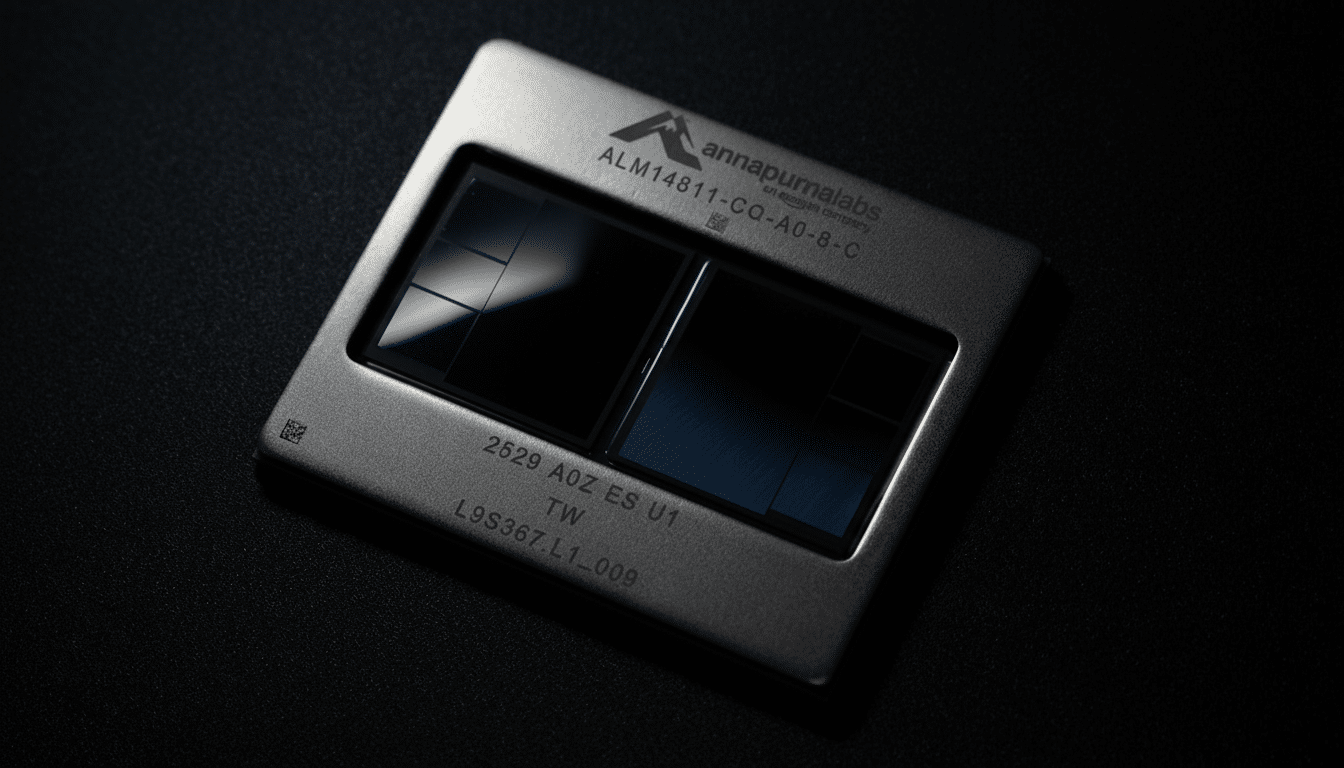

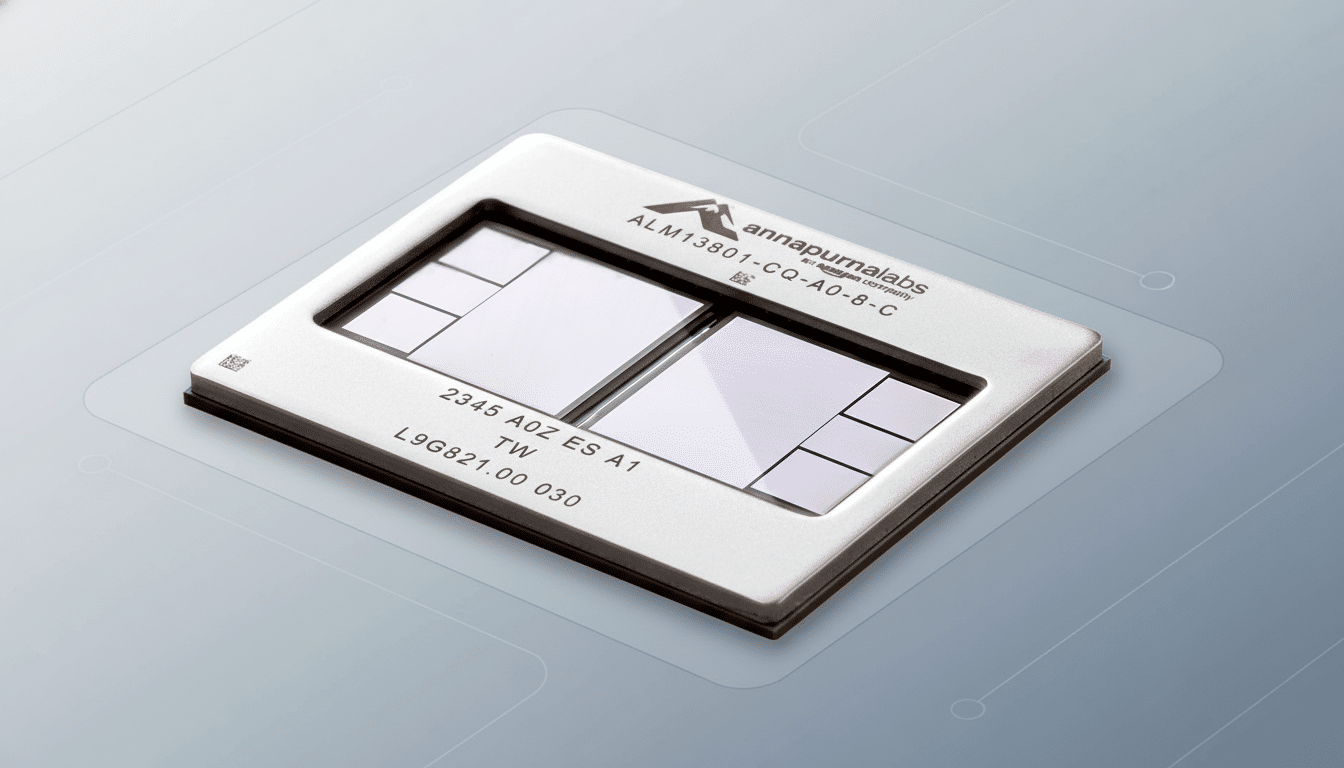

Trainium3 is built on a 3 nm process and focuses on both training and high-throughput inference. AWS notes that this new machine offers over 4x the performance of its previous generation and twice as much memory to support things like LLMs with large contexts and growing multimodal models.

Each train’s engine room is just one UltraServer with 144 Trainium3 chips. Up to a million such chips can be strung together in clusters of thousands, a 10x scale factor over the previous generation and on par with where the industry is headed, Stephenson says: to giant training runs and real-time inference fleets.

Even beyond raw throughput, AWS is climbing down the stack to efficiency. The company contends that Trainium3-based systems are 40 percent more energy efficient from one generation to the next — no small thing as power and cooling budgets come under strain with AI workloads worldwide.

Where scale meets efficiency in AI training and inference

Performance-per-watt is a board-level discussion now. Research groups, including the International Energy Agency, have sounded alarms about data center electricity demand skyrocketing, and hyperscalers are racing to bend that early curve. With AWS’s 40 percent energy efficiency improvement, they are obviously speaking directly to that pressure, having the potential to reduce costs and carbon intensity — equally for AI-heavy customers.

It’s the economics, stupid — as much as the teraflops.

AWS is optimizing Trainium3 for improved $/token in training and inference, specifically for customers who are fine-tuning large models or running retrieval-augmented generation at scale. Among the early users named by AWS are Anthropic and Japan’s LLM Karakuri, SplashMusic and Decart, all of which were named as slashing inference costs using new hardware.

An Nvidia-friendly roadmap for interoperable clusters

The most strategically significant news may be yet to come. AWS previewed Trainium4 and said it will be compatible with Nvidia’s NVLink Fusion interconnect, which permits Trainium-based systems to work alongside Nvidia GPUs within a high-bandwidth fabric. That design is a recognition of reality today: many state-of-the-art AI applications are optimized for Nvidia-first environments.

“CUDA has become the de facto platform for developing AI and high-performance computing applications, enabling developers to create solutions on NVIDIA GPUs,” AWS’s chief evangelist Jeff Barr wrote in a blog post. “The interoperability provided with the new Amazon EC2 P4d instances enables developers to more easily migrate or extend their on-premises HPC workloads to the AWS Cloud.” In practice, it might allow customers to maintain their CUDA-focused pipelines while offloading pieces of training or inference onto Trainium for cost or capacity reasons — a workflow that would stay entirely within the same cluster topology.

There was no launch window available for Trainium4 from AWS, but the direction is clear: constantly moving its own silicon forward while also acknowledging a world in which Nvidia GPUs still remain vital to plenty of teams.

Software and developer experience for AWS Neuron SDK

Hardware by itself won’t take the majority of AI workloads; it’s the maturity and capability in a software stack that will.

AWS has also invested in its Neuron SDK to run frameworks such as PyTorch and JAX on Trainium, alongside tooling for model parallelism and graph optimizations. Look here for more work to come, as it’s the smoother migration pathways from CUDA-centric codebases that make homogeneous clusters a practical rather than theoretical reality.

Targeted: fine-tune workloads on Trainium3, serve certain model families off of Trainium for cost efficiency, and continue to run bleeding-edge training runs on Nvidia when CUDA-specific kernels or ecosystem lock-in makes it the faster lane.

Competitive context amid GPU and accelerator rivalries

The AI compute market is filled with differentiated bets. Google continues to advance its TPU line for 1st-party and some cloud customers; Microsoft has deployed its Maia accelerators; AMD’s Instinct MI300 series is on the rise; Nvidia maintains a lead with its GPU roadmap and software moat. AWS’s action marries scale economics with flexibility, intending to provide capacity when GPUs are scarce and lower the cost floor when they’re not.

Should AWS demonstrate its 4x performance and 40% efficiency advantage in public benchmarks and customer case studies, Trainium3 would become a no-brainer for large-scale inference workloads, as well as many training jobs — with just the most specialized or raw performance-sensitive workloads tied to Nvidia alone.

What to watch next for Trainium and Nvidia coexistence

Milestones include third-party benchmarks, Neuron SDK updates that streamline porting from CUDA and reference architectures that show Nvidia and Trainium working in one fabric. Boasts by foundation model builders and high-throughput inference platforms will be more revealing than peak specs.

For now, Trainium3 indicates that AWS is increasing its bets on homegrown silicon while also flinging the door ever wider for Nvidia-centric AI shops. If Trainium4’s interoperability arrives on time, the next stage of cloud AI won’t be so much about picking one supplier as it will be putting together the right blend for performance, availability and price.