A wide-ranging outage of Amazon Web Services’ domain name system set off cascading failures across the internet on Tuesday, disrupting banks, consumer apps and even, momentarily, some government websites.

The company said the incident had been fully mitigated, but users reported hours of on-and-off errors as traffic slowly came back.

- What Broke and Why DNS Is So Critically Important

- A Concentration Risk Hiding In Plain Sight

- Signals From the Edge and the Core During Outage

- The Big Picture: How This Impacts The World Of Apps And Devices

- How a DNS Failure Spreads Across Networks and Users

- Lessons for Engineering Teams After a Major DNS Outage

- A Pattern of Internet-Scale Fragility Is Emerging

Early impact reports mentioned familiar household names. Access to Coinbase accounts froze, Fortnite matchmaking didn’t work, Signal messages were delayed and Zoom calls dropped. Services owned by Amazon, which include connected home gadgets, sputtered as well — another example of how a single DNS error at hyperscale spreads out into everyday life.

What Broke and Why DNS Is So Critically Important

DNS is like the internet’s phone book, translating human-friendly site names into their IP addresses. If the authoritative layer, where domains are published, is down, users may get errors even though underlying servers might be healthy. Put another way: It’s the difference between your home being there and maps just forgetting how to reach it.

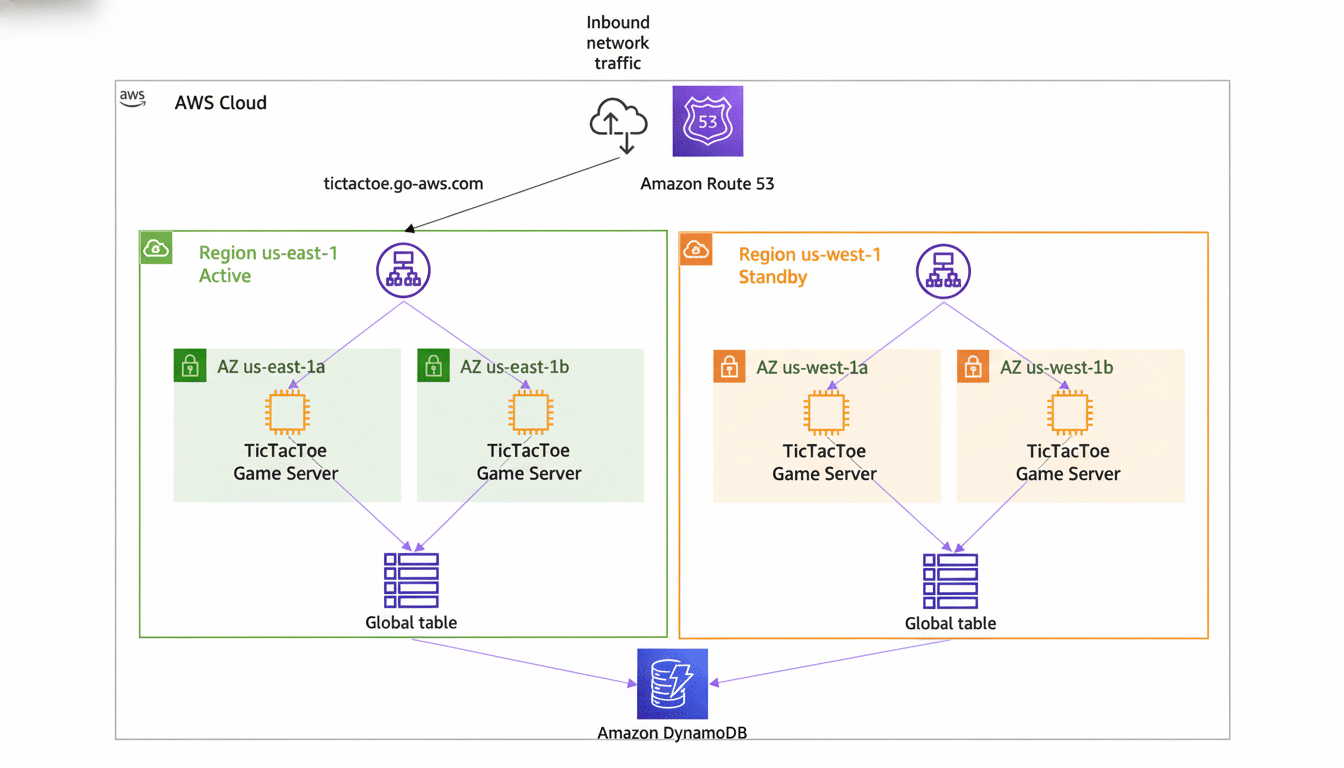

Amazon’s Route 53 and associated DNS features power everything from public internet sites to microservice discovery inside private networks. Recovery is partial and varies geographically: since DNS responses are cached on resolver (DNS) servers worldwide, some users snap back quickly while others wait in their local caches for affected time-to-live (TTL) values to expire.

A Concentration Risk Hiding In Plain Sight

By revenues, AWS remains the biggest cloud provider in the world, holding about a third of global infrastructure market share, according to an estimate from Synergy Research Group. At that scale, DNS becomes a single point of systemic risk. Even businesses not hosted on AWS often depend on Amazon APIs for payments, authentication, analytics, or content delivery, such that a DNS wobble can leave downstream services stranded.

Finally, after years of hard work engineering your architecture to support multi-region and multi-AZ topologies, you still throw most of that away by leaving traffic routing up to DNS. Even if health checks, routing policies, or authoritative name servers do not work properly in the control plane, the data plane may not be reachable — even to your most redundant endpoints.

Signals From the Edge and the Core During Outage

The network telemetry companies who are usually the first to observe these kinds of issues, like ThousandEyes and Kentik, report spikes in DNS failures or increases in timeout ratios when such events happen, while Cloudflare Radar will frequently show traffic anomalies as resolvers revise retry decisions. Consumer-facing dashboards like Downdetector further inflame the issue, showcasing real-time complaint spikes across beloved apps.

Amazon’s Service Health Dashboard noted errors “related to DNS” and later said mitigation was underway. There were lingering problems for some users due to caching of recursive resolvers and stale records in ISP and corporate networks, none of which is unusual when authoritative responses differ.

The Big Picture: How This Impacts The World Of Apps And Devices

More than just marquee consumer apps, the outage revealed delicate links in enterprise workflows. SSO didn’t work automatically, so build pipelines couldn’t pull dependencies and webhooks never triggered. In e-commerce, users’ checkouts timed out when payment gateways failed to resolve API endpoints. IoT fleets, from cameras to smart locks, missed heartbeat connections and banged clumsy alerts in the faces of on-call operations teams.

For banks, a small DNS outage can cut you off from being able to look up accounts or confirm transactions. Healthcare portals and public-sector websites that rely on cloud-hosted identity or content services experienced varied levels of availability, which underscores the importance of DNS to access crucial information.

How a DNS Failure Spreads Across Networks and Users

Recursive resolvers will ramp up retries when authoritative name servers are slow or returning errors. Clients back off, connection pools fill up, and fail-fast mechanisms may not always be tuned for DNS edge cases. The consequence is an amplification effect: more questions chasing fewer good answers.

Caches must repopulate, even after the underlying cause is addressed. Services with a long TTL take the longest to recover. Mobile networks and enterprise resolvers frequently impose their own caching rules, thus elongating the “tail” of the event long after a control-plane fix.

Lessons for Engineering Teams After a Major DNS Outage

Adopt dual-provider authoritative DNS. Operating primary and secondary zones across separate vendors also minimizes the blast radius when one control plane goes astray. Confirm that health checks and failover policies work all the way across providers and regions.

Tune TTLs to optimize between performance and agility. Shorter TTLs can mean fast recovery at the cost of high query rates; long TTLs dull transient hiccups but impede problem response. Critical points—login, payments, APIs—often deserve conservative TTLs with careful capacity planning.

Design out-of-band fallbacks. Run a minimal status page and runbook on different DNS, CDN, and cloud providers. Pre-train multiple-CDN paths and perform regular origin failover testing. Hard-coding IPs is not “fixed”; cloud IPs are as fluid, and when you do that, fresh outages follow.

Monitor for DNS-specific signals. Monitor SERVFAIL and NXDOMAIN rates, as well as resolver latencies. Bake DNS chaos drills into reliability programs so teams can rehearse degraded-resolution situations just as they do server failures.

A Pattern of Internet-Scale Fragility Is Emerging

This echoes previous internet-wide collapses due to interconnected dependencies. A large vendor recently brought down Windows systems with a faulty endpoint security update, causing airports and businesses to grind to a halt. A separate DNS outage at a major edge provider previously took down high-traffic sites. Different causes, same lesson: closely intertwined platforms can fail in remarkably similar ways.

The larger lesson isn’t that you “can’t trust the cloud”—it’s that for systems to be resilient, you need diversity at the control plane as well as in the data plane. For an ecosystem where a few platforms carry outsized weight, Tuesday’s DNS outage is another signal to spread risk before the next domino falls.