An elementary security mistake at AI toy maker Bondu exposed more than 50,000 chat transcripts from children, according to security researchers who say a simple Gmail login unlocked a portal filled with kids’ private conversations. The discovery, first reported by Wired, underscores how fast-growing “smart” toys can mishandle sensitive data even as they promise safer, parent-friendly features.

How the exposure happened via Bondu’s parent portal

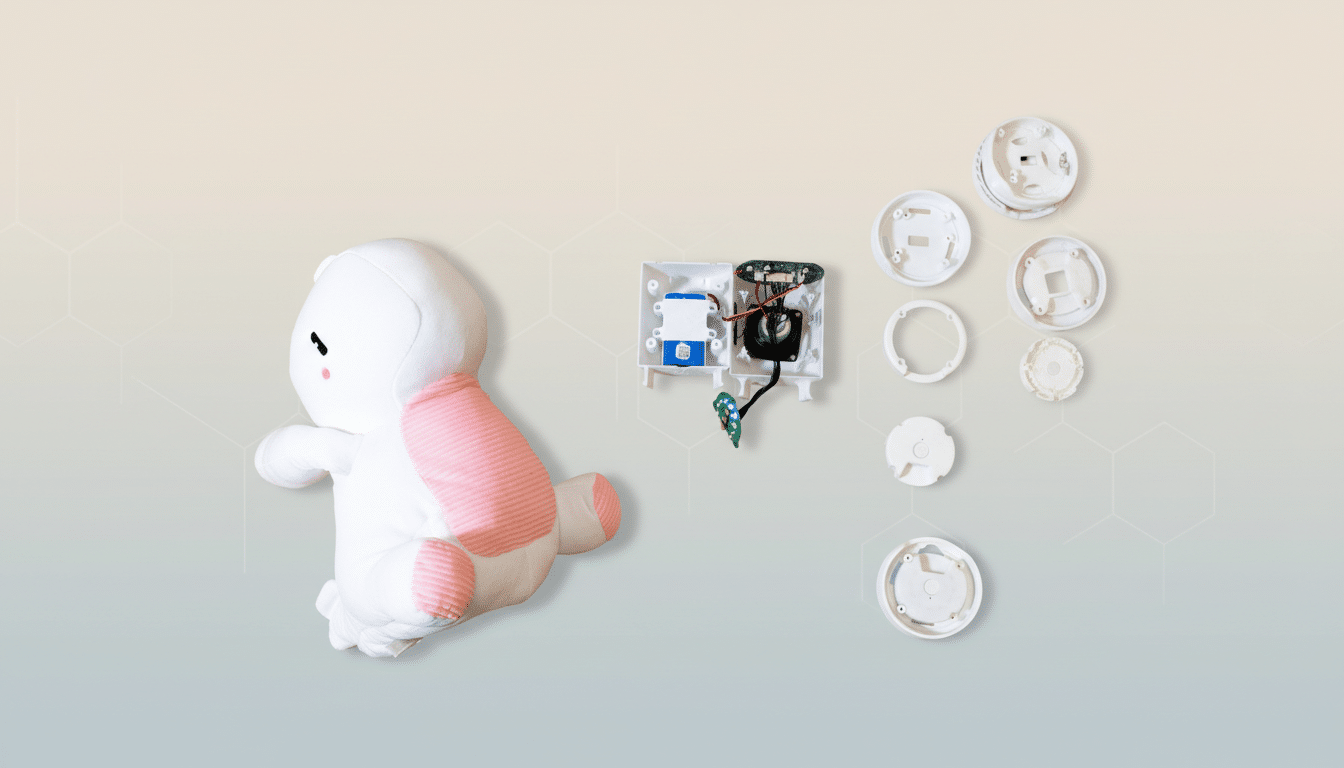

Security researchers Joseph Thacker and Joel Margolis examined a Bondu plush toy after a neighbor asked whether it was safe. Their test didn’t require hacking tools or insider access—just a Gmail account. The company’s web portal, intended for parents to review conversations and for staff to monitor performance, accepted general logins and surfaced transcripts from across the platform instead of restricting access to a single household.

- How the exposure happened via Bondu’s parent portal

- What data was at risk in children’s leaked chat logs

- Company response and questions after the reported leak

- Why AI toys pose unique privacy risks for families

- Regulatory scrutiny is rising for AI toys and privacy

- What parents can do now to protect children’s privacy

Once inside, the researchers said they could scroll through thousands of exchanges between children and the toy’s AI, a trove that should have been siloed by family at minimum and protected behind robust identity verification. This kind of misconfiguration is exactly what security frameworks warn against: overbroad access paired with weak authentication.

What data was at risk in children’s leaked chat logs

The transcripts reportedly included children’s names, birth dates, and references to parents, siblings, schools, and daily routines—context-rich details that can be exploited for social engineering. While Bondu said it stored only text transcripts and automatically deleted audio clips after short intervals, text on its own can reveal patterns, locations, and relationships that are highly sensitive when aggregated.

The researchers also raised concerns that the toys may rely on third-party AI services from providers like Google or OpenAI, potentially extending the data’s footprint beyond Bondu’s systems. Even if names are removed, conversational snippets can retain identifying context—an issue privacy advocates have highlighted repeatedly in the age of large language models.

Company response and questions after the reported leak

After being alerted, Bondu took the portal offline within minutes and relaunched it the next day with stronger authentication, the company told Wired. The CEO said the issue was resolved within hours and that an internal review found no evidence of access beyond the two researchers.

Quick containment is good practice, but important questions remain. Has Bondu conducted or commissioned an independent security assessment? Are transcripts encrypted at rest and in transit? What are the data retention limits, and do parents have a clear path to request deletion? Answers to these questions matter more than any single patch because they reflect the company’s overall security posture and privacy governance.

Why AI toys pose unique privacy risks for families

AI toys are built to elicit candid conversation, which means they can capture details traditional toys never touched. When those interactions migrate to the cloud for processing, the privacy stakes rise. The OWASP IoT Top 10 lists weak or missing authentication and excessive data exposure as recurring problems; this incident aligns with both risks.

There is precedent for harm. The 2015 breach at VTech exposed data tied to 6.4 million children, prompting an FTC settlement over children’s privacy violations. Two years later, CloudPets drew scrutiny after researchers reported that an exposed database and poorly protected storage left over 2 million voice recordings accessible. These cases show how quickly “cute” can become “critical” when security is an afterthought.

Regulatory scrutiny is rising for AI toys and privacy

In the U.S., the Children’s Online Privacy Protection Act restricts how companies collect and use data from kids under 13 and requires verifiable parental consent, clear disclosures, and reasonable security. The FTC has repeatedly signaled it will enforce COPPA against connected devices. In Europe, GDPR adds strict limits on processing children’s data and empowers regulators to levy substantial fines for lapses.

Policymakers are also focusing on AI-specific risks. In California, Senator Steve Padilla introduced a bill seeking a four-year pause on sales of interactive AI toys amid mounting concerns about harmful outputs and data use. Independent watchdogs, including nonprofit reviewers and academic labs, have urged manufacturers to adopt privacy-by-design, minimize data collection, and avoid sharing children’s data with downstream AI vendors.

What parents can do now to protect children’s privacy

If you already own an AI toy, review the companion app and portal settings. Turn off cloud logging where possible, use a pseudonym for your child, and avoid sharing birthdays or addresses in the profile. Ask the manufacturer how long it keeps transcripts, whether third-party AI providers process conversations, and how to request data deletion. Treat the device like a live microphone: power it down when not in use and apply firmware updates promptly.

For manufacturers, the lesson is straightforward: default to least-privilege access, require strong identity verification for parent portals, encrypt transcripts end to end, and be transparent about third-party processing. For parents, caution and questions are the best defenses until the industry’s security practices catch up with the intimacy of the data these toys collect.