As agents, Copilots, and AI-native apps hurry to ship on momentum alone (and begrudgingly retrofit the definition of product-market fit under founders’ feet), urgency-seekers are coming from within the industry. The technology frontier advances weekly, buyer expectations are consolidating, and budgets are transitioning from experiments to line-of-business bets. For AI startups, the directive is clear: demonstrate sustained value in core workflows and demonstrate that spend is durable, not just curiosity-driven.

Define PMF for AI’s Moving Target and Core Workflows

With AI, product-market fit is not so much a cool model demo but an estimated desired output for a specific job to be done. Models will get better and commoditize; the workflow you possess is the moat. Anchor on a high-frequency pain point, instrument the result, make the product irreplaceable even if you change your underlying model. The Stanford AI Index 2024 cited over $67B in private AI investment in 2023, but many are still stumbling through deployments. Winners aren’t the best model wrappers; they are the best workflow owners.

- Define PMF for AI’s Moving Target and Core Workflows

- Measure Usage That Sticks and Proves Real Adoption

- Follow the Money and Margins for Durable Spend

- Construct Moats Outside the Model with Data and Distribution

- From Wedge to Platform Through Adjacent Expansion

- Operational Readiness and Trust for Enterprise AI

- What PMF Looks Like in Action for AI Workflows

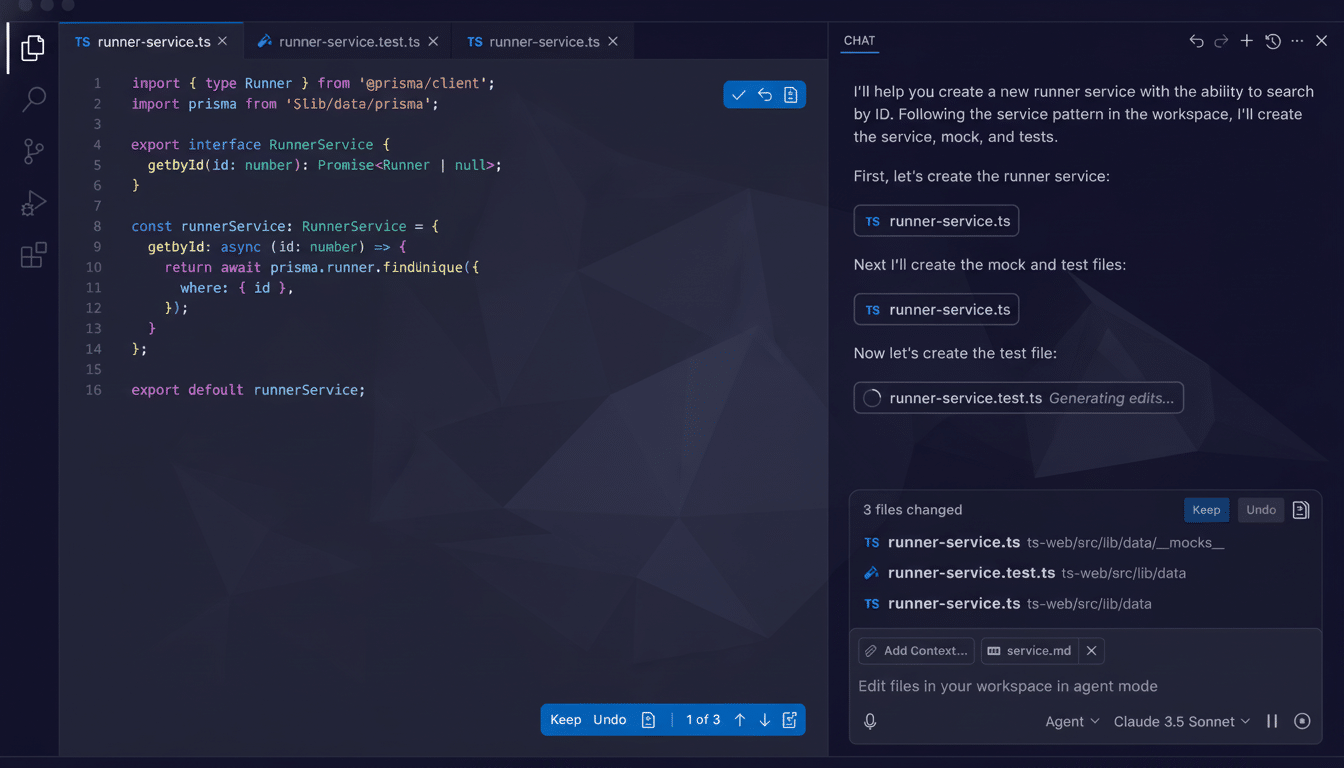

One helpful pattern: measurement at the task level. GitHub said in 2023 that developers worked more quickly and were more satisfied using Copilot when it was integrated directly into the IDE, versus being a separate tool. The takeaway: depth of integration and time-to-value trump raw model horsepower.

Measure Usage That Sticks and Proves Real Adoption

The same classic engagement and retention metrics still stand, but be sure to adapt them to the reality of AI. Track DAU/MAU for productivity tools, weekly active seats for enterprise, and cohort retention curves that flatten instead of slide—especially around 8–12 weeks. Refer to the Sean Ellis “very disappointed” survey; if ≥40% of your core users say they’d be very disappointed if you disappeared, you’re likely at PMF. To wrap the numbers in a narrative, you can associate them with qualitative interviews describing whether the product is now part of users’ default workflow.

Instrument time-to-first-value: can someone new to your product get the outcome you promised them in less than 10 minutes for self-serve or two weeks for enterprise pilots? Keep track of duplicate value moments per session and the fraction of sessions that resulted in a finished job. If usage clusters around one feature, embrace it—it could be your wedge.

Follow the Money and Margins for Durable Spend

Durability of spend is becoming the tell. Purchases that move from “innovation” budgets to CXO or line-of-business budgets imply your product now is a core part of the business. Look for multiyear contracts and enterprise-wide rollouts, along with seat expansion attached to workflow edicts rather than pilot programs. On revenue quality, SaaS benchmarks from firms like Bessemer indicate that best-in-class net dollar retention is frequently above 120%; not all early AI apps will achieve that, but expanding a user base from team to department often signals strong PMF.

Mind unit economics early. Inference costs can become COGS dominant, so keep an eye on model and infrastructure spend as it pertains to gross margin. Caching, prompt optimization, model distillation, retrieval augmentation, and right-sizing models by task help lift margins. Long-term, target margins approaching 70% and CAC payback ~12–18 months depending on ACV. Your value is outperforming cost inflation of tokens if usage increases while margins also expand.

Construct Moats Outside the Model with Data and Distribution

Model advantage fades away; data and distribution remain. Favor proprietary or permissioned data loops that get better every time quality improves, workflow depth which reduces switching, and channel partners integrated where the job occurs. Compliance can be a moat: SOC 2, HIPAA, or financial data controls remove friction and extend your lead.

Be model-agnostic. A routing layer that chooses between models in response to cost, latency, and accuracy insulates you from provider vagaries. Develop and evaluate a harness for continuous monitoring; resources such as Stanford’s HELM and community frameworks inspired by OpenAI Evals can help develop task-specific benchmarks. Measure not correctness, but consistency and stability over time to avoid silent regression.

From Wedge to Platform Through Adjacent Expansion

Begin with a sharp wedge that delivers yes-yes ROI, and then grow adjacently. Healthcare provides an easy example; ambient clinical documentation tools, like those offered by Nuance, have reported reducing time spent taking notes by about 50%, paving the way to order entry and coding assistance. The same playbook applies in finance, legal, and customer support—win one repetitive workflow then generalize across the process map.

Design multi-player value. When individual contributors and managers and executives all win—speed for ICs, quality + analytics for managers, risk & cost control for execs—expansion is a thing that happens of its own accord, not one that you force.

Operational Readiness and Trust for Enterprise AI

Trust is a component of PMF in AI. Offer audit logs, data residency options, and human-in-the-loop controls in cases where the stakes are high. Publish model- and system-level SLOs for latency and reliability. Run red-team reviews and record hallucination rates on relevant tasks; share your mitigation playbook with customers. When support tickets move from breakage to “how do we do more with this,” then that’s the sign you want.

What PMF Looks Like in Action for AI Workflows

Retained cohorts that are leveling off (20–30% seat growth in 3–6m), ≥40% “very disappointed” answers; with GRR >90%, NDR between 110 and 130%; CAC payback tightening up as organic/product-led expansion kicks in; gross margins getting better as your volume grows and you get some inference leverage.

Most important, buyers shift your line item from pilot to plan—and the product becomes the way work is done.

AI doesn’t eliminate product-market fit; it sets a higher standard.

Nail a repeatable result, harden the economics, and get a line item in the operating budget. That’s the signal that rises above the din of demos.