Artificial intelligence is transforming the way software gets delivered, but it’s not simply about replacing developers with robots. Google’s 2025 DORA research reveals that AI enhances results for disciplined high-performance engineering teams and is toxic to those which are undisciplined. In that sense, AI can function as an organizational amplifier — bringing strengths and weaknesses into sharper focus.

The recent DORA survey, carried out by Google Cloud’s DevOps Research and Assessment team, polled around 5,000 software professionals from across industries and complemented the data with over 100 hours of interviews. It’s one of the most far-reaching looks we have seen at how AI is transforming developer workflows, productivity, and dependability at scale.

AI as an organizational amplifier for engineering teams

Among developers working with AI, adoption is now near-universal, the report found, with a double-digit rise since 2017 to approximately nine in 10 engineers using AI tools at work. Engagement is, on average, about two hours a day, but usage varies widely — many developers go to AI “every so often,” but fewer depend on it consistently.

The outcomes are nuanced. Roughly 80% of respondents say there’s increased productivity, but only 59% see improvements in code quality. About 70% trust AI output, with a sizeable minority of doubters. DORA’s takeaway: teams equipped with strong engineering systems turn AI assistance into actual performance gains, while teams lacking those systems often face rework, instability, and coordination overhead.

Real-world dynamics explain the split. On teams that have enough code review, automated testing, and a sense of ownership, AI suggestions speed up boring tasks and allow for more energy to be dedicated to harder ones. In organizations without those guardrails, similar AI-suggested changes can fall through with minor flaws that require firefighting later. The tool is the tool, but its system of entries isn’t.

Speed Versus Stability Is A False Dilemma

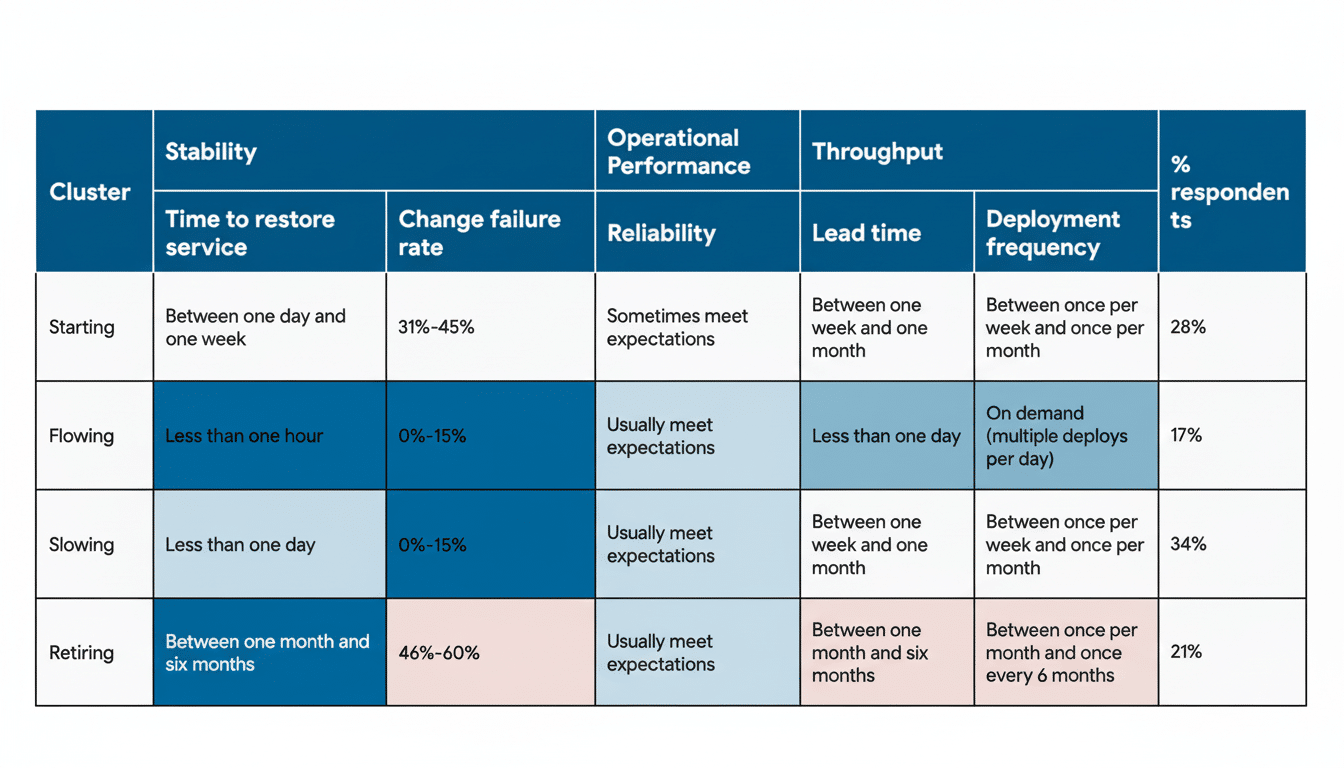

Analysis of team archetypes by DORA shows that high performers do both fast delivery and fast recovery. The report features groups like “harmonious high-achievers” and “pragmatic performers” in the top tier, which belies the tradition that performance improvement is a mathematical trade-off between reliability and velocity. With good practices, AI speeds up both.

This corresponds to the traditional DORA metrics of deployment frequency (DF), lead time from commit to deploy, change failure rate (CFR), and mean time to restore. Among high performers, they are better at all four, indicating that AI simply lowers friction but not risk when incorporated into mature workflows. Teams with poor processes tend to experience the reverse—faster commits, slower recoveries.

Seven AI engineering practices winners stand by

DORA presents successful adoption of AI as a systems problem, not a tool problem. The report highlights seven foundational practices that can bend AI’s impact in a good direction, and stresses an emphasis on organizational capabilities over feature lists. In this year’s data, two stood out: platform engineering and value stream management.

There is broad adoption of platform engineering, with approximately 90% of firms investing in internal platforms to consolidate tools, automation, and shared services. In places where platforms are strong, AI takes away toil and context switching; in places where they are weak, it creates yet more handoffs and local optimizations that don’t move the overall outcome.

Value stream management (VSM) is a force multiplier. By plotting how work flows from idea to production, leaders can apply AI horsepower to bottlenecks that matter — such as slow reviews, flaky tests, or painful releases — and turn local gains into organizational-level improvements. Without VSM, AI tends to speed up the wrong work, promoting hidden constraints downstream.

Here we see two practices that predictably associate with better AI outcomes (Human-Adaptive Design and Pain & Effortful Data), but beyond them, the ones that most reliably correlate with higher AI maturity are the classics: good CI/CD, automated testing at multiple levels, diligent code reviews, strong incident management response, secure-by-design patterns for source code, and high-quality documentation.

How leaders should respond to AI-driven engineering change

- View AI adoption as an organizational transformation.

- Prioritize internal platforms, automation, and observability.

- Set clear guardrails around AI-generated changes — required reviews, security scanning, traceability, and rollback plans.

- Make incentives line up to system outcomes, not just individual throughput.

Do use the core DORA metrics as a scoreboard to measure the impact of your AI: Is lead time decreasing but not at the cost of an increase in change failures? Has turnaround time increased and launch recovery improved? Combine these with qualitative signals — developer happiness, cognitive burden, and knowledge sharing — to make sure that improvements are sustainable.

The takeaway from the 2025 report by DORA is straightforward: AI is no escape from the spirit of engineering. It’s a force that makes good teams stronger and bad teams weaker. Construct the system first — AI can amplify it later.