AI has become the novelty that became a default item in software teams. According to GitHub’s Octoverse research, more than nine out of 10 developers are now using AI-powered tools; in controlled studies, assistants and collaborators have been shown to complete coding tasks up to 55% faster. The trend is further reflected in the Developer Survey from Stack Overflow, which shows that the majority of developers already use or plan to use AI in their workflow.

It sounds most straightforward in regulated sectors, where voices from the likes of Allianz Global Investors, Lloyds Banking Group, Hargreaves Lansdown, SEB and Global Payments refer to AI as a “force multiplier” — if packaged with strong governance and rolled out beyond coders. Here are five actionable steps for capitalizing on this new reality.

Build Guardrails into Platform Engineering

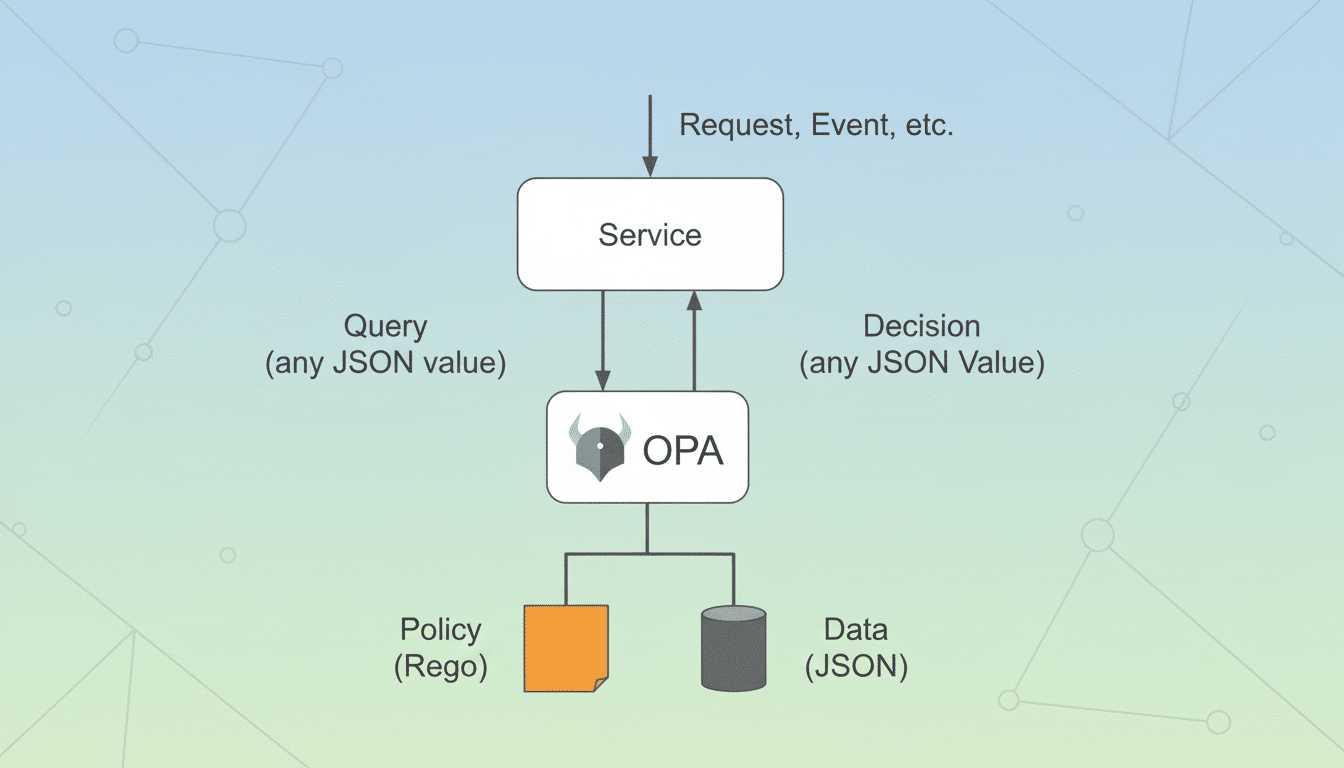

Move from ad hoc guidelines to guardrails in the platform itself. Policy as code, with tools like Open Policy Agent and Rego, allows you to encode security, compliance and architectural standards into pipelines, clusters and service catalogs.

Think of it as a non-blocking copilot for governance (the system “nudges” developers with very clear feedback, records decisions so they can be audited by humans later, and only hard-blocks when the risks are material). What I’ve observed is that when guardrails such as secrets scanning, dependency policies and environment controls are no longer visible defaults, governed teams report faster delivery.

Combine those controls with automatic tests and coverage thresholds. Hargreaves-style blueprints — pre-approved templates that have testing and scanning baked in — reduce cognitive load and make time for real innovation.

Extend AI Past Coding Towards the Entire SDLC

The large gains come when AI reaches across planning to production. High-performing teams utilize assistants to distill requirements, suggest architectures, author tests, triage flaky pipelines, review pull requests and draft change tickets backed by full evidence trails.

Enterprises such as Lloyds Banking Group are shifting from “AI helps me code” to “AI accelerates the pipeline.” That means let’s instrument value streams and call out bottlenecks, and let AI take on high-friction exercises like documentation tasks, incident timelines and root-cause narratives.

DORA research has long connected automation and fast feedback with better performance: AI extends those principles by eliminating wait time between handoffs, and enabling work to continuously flow.

Governance and Data as First-Class Priorities

AI ups the ante for privacy, safety and provenance. Take a design-for-compliance approach: scrub prompts and outputs for secrets and personal data, enforce data residency, log prompts, responses and model versions for traceability.

Before deployment (and ongoing regression), use an evaluation harness to compare models and prompts against golden datasets, bias checks, and safety tests based on the NIST AI Risk Management Framework and the OWASP Top 10 for LLM applications. Keep a repository and route confidential work to accredited sources with SLAs.

Financial firms racing for a tech-first reading of the law prove that codifying norms is better than annual checklists. When shiny new responsibilities hit your desk, cut them into machine-enforceable policies so compliance can scale with velocity.

Invest In AI Fluency For Everyone’s Role

AI isn’t just for developers. Tribesmates like me in the Security, Audit, QA, SRE and Product teams receive value from assistants that summarize evidence, suggest controls or draft reports. Firms such as Global Payments are fostering fluency through recurring demos, internal “university” sessions and safe sandboxes on which to hone on-the-spot techniques.

Establish clear playbooks: when to use chat, when to ask for code, how to reference sources and how to test outputs. Promote small, frequent show-and-tell sessions to spread wins and share pitfalls soon.

Measure adoption and results, not enthusiasm. Monitor cycle time, fault rates, on-call burden and documentation completion to understand where AI really shifts the needle.

Developing Agentic Systems Safely and Effectively

As emergent systems age, developers will compose and orchestrate workflows instead of hand-crafting every line. This portrays engineers as conductors who oversee agents that author the code, tests and migrations — then validate and harden it.

Practice “trust but verify”: use property-based tests, contract tests, static analysis and reproducible builds. Sign artifacts, keep single-source-of-truth SBOMs, and gate releases on automatically checked evidence so a thousand AI-crafted lines are as secure as 10 code-reviewed ones.

Be realistic about experience levels. Senior developers should ideally be reviewing this code and guiding newbies with free-form “vibe coding,” along with reducing risk to learning through structured prompts and starter templates.

AI is now the subtext of all modern software work. The teams that embed guardrails, proliferate AI across the SDLC, harden governance, increase fluency, and design for agentic workflows will turn hype into sustained velocity—while keeping regulators, customers and engineers confident in the outcomes.