Apple’s newest App Store Awards highlight one thing unmistakably: artificial intelligence is no longer an add-on feature, it’s the glue that binds together many of the year’s most-loved apps. Without also naming something like a standout AI app of the year, Apple promoted products whose central experiences are being progressively influenced by machine learning — and we’re left with quite an observable sense of where mobile software is going.

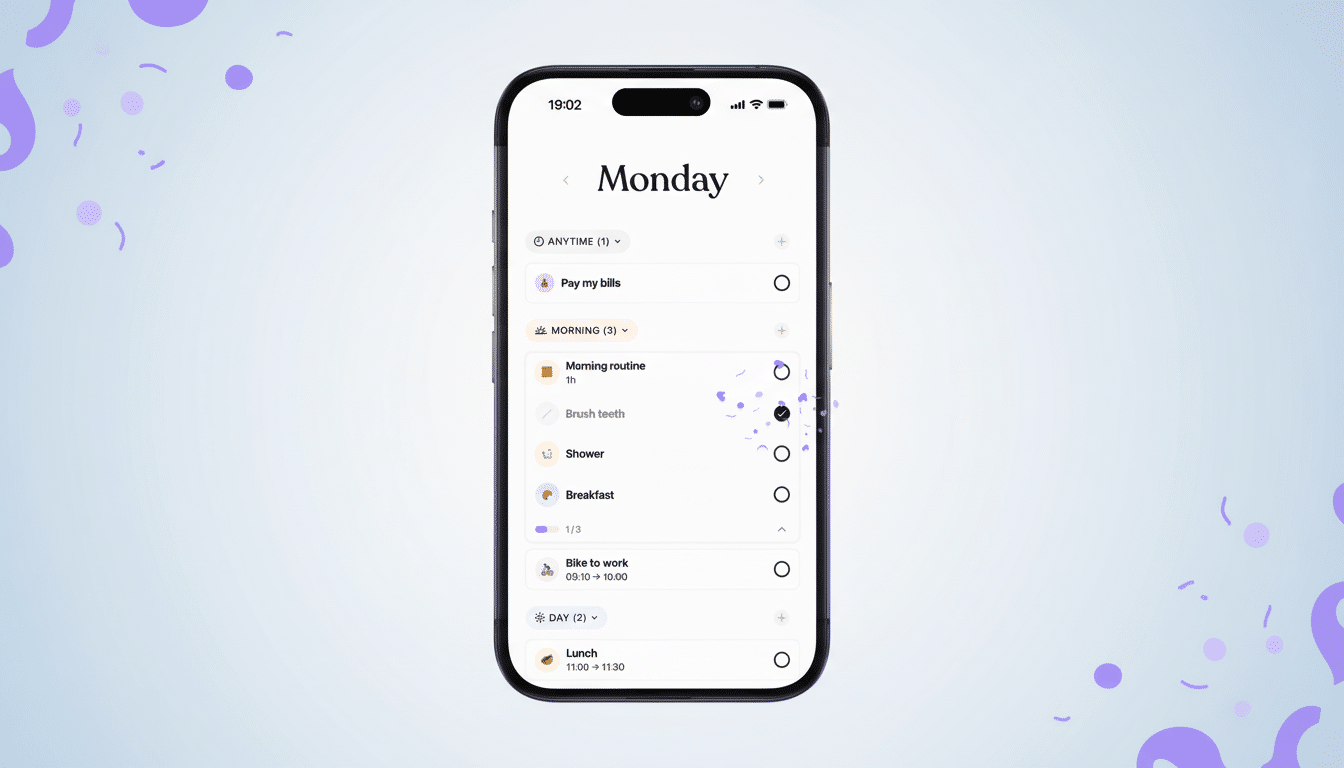

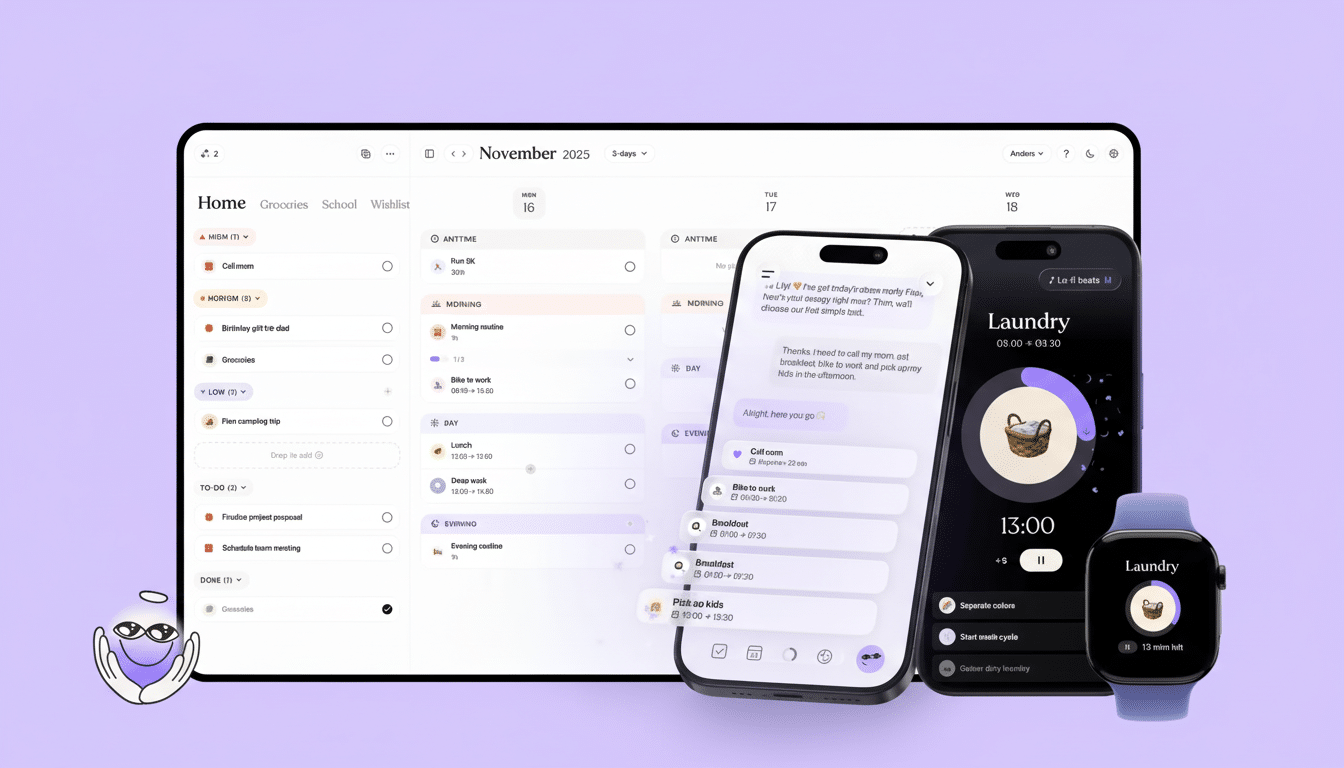

On iPhone, visual planner Tiimo was named the best app for its system which turns to-do lists into timeboxed plans, with AI-powered duration predictions and sequencing. Detail took the prize for best on iPad by automating the drudgery of editing, from trimming silence to punch-in zooms and captions. Two honorees for Cultural Impact, StoryGraph and Be My Eyes, demonstrate the range embodied by AI — one models reading tastes to make book recommendations, while the other offers blind and low-vision users spoken descriptions of their surroundings. Contrary to many other apps, including native Apple and Google apps, Strava’s app on your wrist utilizes an assistant to translate it into plain-language insights for millions of athletes.

AI Is the Silent Theme of This Year’s Winners

Apple still hasn’t declared a chatbot champion, but the winning roster unmistakably reflects a new wave: AI as a feature, not a product. That’s a pragmatic shift. Consumers are not asking for models; they’re asking for outcomes — a better schedule, a cleaner cut, a clearer recommendation, an easier world. The awards reflect that framing.

Tiimo is a great illustration because of its “AI with guardrails” approach. Instead of promising magical productivity, Tiimo gives bounded, explainable suggestions — estimating how long each step might take and slotting them onto a visual timeline users can edit. That transparency and control align with Apple’s own recommendations for responsibly designed AI features.

Detail’s “Auto Edit” is similarly rooted there. Silence detection, smart reframes, and automatic titling draw on well-known computer vision and speech processing techniques to condense what would be hours of manual work into a few minutes. The overall effect isn’t to eliminate the creator, but to raise the floor so a lot more mediocre shit can make its way into the world.

StoryGraph relies on pattern-matching to find your next book, and there’s a lesson here: taste modeling done well can be more interesting (and useful) than randomness generators with invasive chat support. Meanwhile, Be My Eyes is AI’s most direct social good. Its assistant uses vision-capable models to describe surroundings and objects, a real-world tool that cuts down on volunteer dependence but also keeps a human handoff in place when necessary.

And Strava reveals AI at the edge. Its summaries break down pace splits, elevation and heart rate variability into digestible coaching cues — exactly the sort of lightweight, high-frequency analysis that lends itself to on-device or carefully scoped inference for speed and privacy.

Aligned With Apple’s Expanding On-Device AI Push

The awards come at a time when Apple is scaling its system-level AI work under the umbrella of Apple Intelligence, combining on-device models with private cloud compute. The winners are the reflection of that design center. They favor clarity over novelty and keep the user in control, an orientation that suits AI features you can see, edit and override.

Privacy is a throughline. Apps that can scope models to a user’s library, calendar or workouts — without spraying data everywhere around servers — are better positioned in Apple’s ecosystem. Look for additional winners to boast about on-device processing of sensitive work, with cloud assist left for the heavy lifting: multimodal vision or transcription-heavy editing.

Apple also showcased winners across Mac, Vision Pro, Apple TV and Apple Arcade to emphasize how AI-assisted experiences are continuing to proliferate across form factors — from spatial computing interfaces to living room media. In gaming, the immediate return isn’t NPC chat; it’s smarter difficulty curves, adaptive tutorials and streamlined asset pipelines that shrink development cycles.

The Market Signal Determining The Picks

Third-party data backs the shift. Data.ai said last year global consumer spending on mobile applications surpassed $170B, and creation, productivity, and health are among the categories that are experiencing double-digit growth where AI features can be a differentiator. Sensor Tower has also followed wide adoption of vision and assistant features in mainstream apps, not just standalone AI titles.

Developers are also catching on to the economic math. Lightweight, task-specific models can be executed locally and inexpensively and handed off to cloud models only when a clear payoff is visible. That hybrid approach keeps latency to a minimum and margins intact, making the subscriptions still feel “worth it” without inflated inference costs.

What to Watch Next as AI Shapes Everyday Apps

Anticipate even more “invisible AI” in next year’s contenders: planners that pre-organize your day across devices, editors that rough cut live as you film, accessibility tools that describe a space when you enter it and fitness summaries that adjust to your recovery without manual tagging.

The lesson of Apple’s awards isn’t that AI prevailed; it’s that AI is all but an entry fee. The best apps don’t blast you with this; they just put it to work, making a few small moments smoother while still letting users drive the bus.