I stress-tested a $20 AI coding assistant from inside my editor and zipped through about a month’s worth of backlog in half a day. All refactorings, UI polish, test fixes and a new subsystem landed. The promise is real. But the fallout is no less: hidden usage caps that could put on the brakes mid-change, leaving your code stranded in place.

The $20 rocket boost

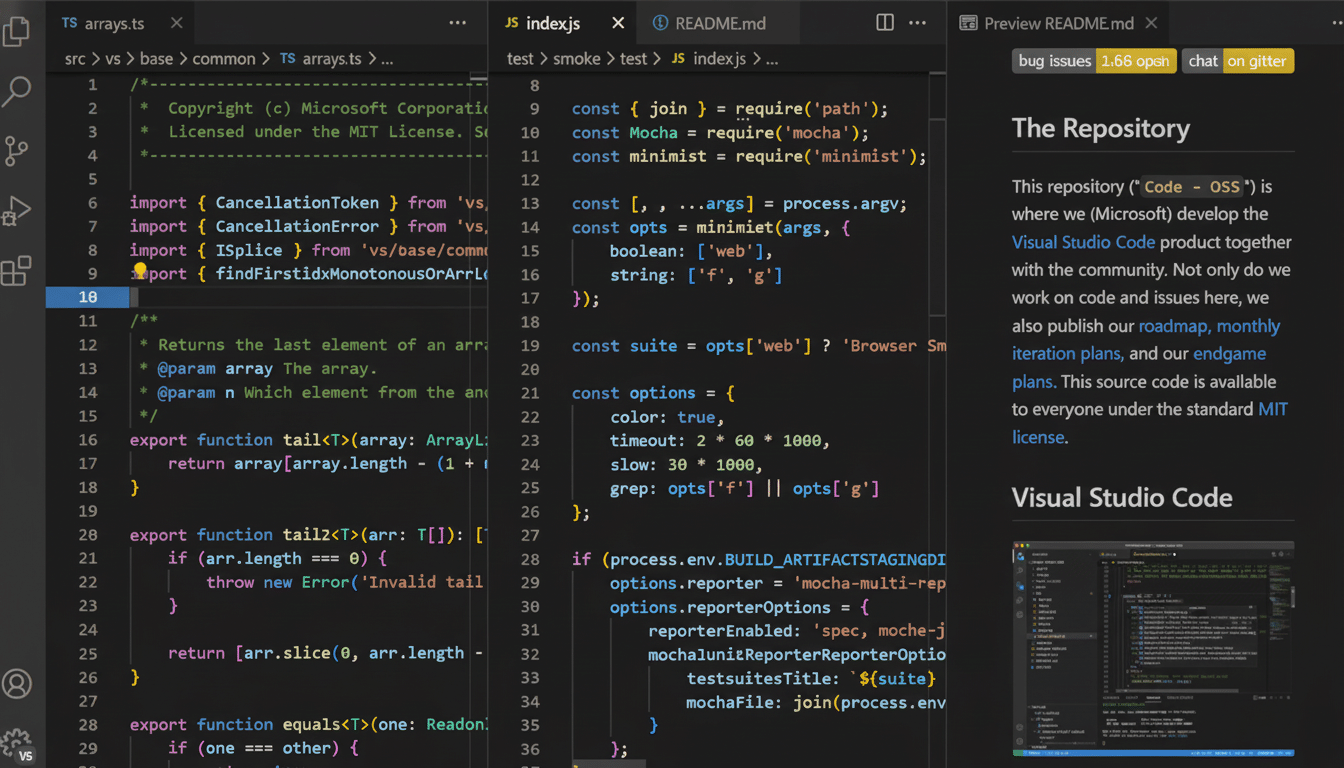

Ultimately, plugging an AI pair programmer into VS Code—through native extensions or AI-first IDEs such as Cursor or Windsurf</a test that applies the same function twice to each of their inputs:Cursor Driver in #if”:”d.com/pair”}) and it feels like strapping a turbo to your workflow. Simple but painful questions become directed prompts: how would you clean up a landing page’s CSS; how to instrument an infamously buggy form; how to scaffold out settings export-import UI; stub out the flow for a new feature, iterate until it falls into place.

These aren’t hypothetical gains. GitHub says developers complete their work up to 55% faster when using AI pair programming, and its research indicates that AI is capable of authoring a significant portion of mundane code. The McKinsey analysis projected double-digit productivity lifts in software development once generative AI is woven into the daily work of teams. In practice that means weeks can telescoped down to hours — if you can maintain momentum.

Where the wheels come off: concealed restrictions

The hitch comes when you rely on that $20 plan for long, heavy sessions. The majority of coding AIs gate usage via tokens and rate limiting. Go over the limit and you’re put on a cool-off, which can be for minutes but sometimes much longer. And the cut-off can come in the middle of a run, as soon as you’ve sanctioned sweeping changes across multiple files.

That’s a scary scenario: incomplete edits, no summary of what you’ve completed and what remains and a corrupt repo. Some clients don’t even show a live quota meter, so you get no warning at all. You leave guessing what the change in, wasting time slepping diffs and finding flow again. The time you “saved” disappears in uncertainty taxes.

Why interruptions are more damaging than you thought

Flow state is a delicate thing to possess. Once you shift from code-solving to mystery-solving — tracking down where transformations are half-applied, reconciling imports, rethreading context — the mental tax becomes steep. The assistant’s major boon is context: It remembers the architecture you were describing, the constraints you had in place and what was flowing through your head.

Hard limits sever that thread. The model “forgets” your long-run plan, and you find yourself paying the context tax all over again in the next session. On complex repos, that reset is a more significant drag than any individual bug fix or CSS tweak.

The math on the cost you’re actually running

Bursts, a $20 tier, is great: A few deep sessions in any billing cycle can take a hayloft of toil into retirement. If you require daily, multi-hour throughput, plan for larger quota higher tiers. Throughout the ecosystem, developers report spending hundreds of dollars a month when stacking tools — an advanced general model from one provider, a coding-specialist model from another and then subscription fees for an AI-native IDE.

It is not lavish when compared with salary expenses. If A.I. can make one engineer have the throughput of two on some tasks, a few hundred dollars a month is easy to justify. But to students, hobbyists and solo builders, price creep and the vagaries of rate-limits can be the difference between getting something up or shipping nothing at all.

Make the cheap plan work: battle-tested strategies

Work in small, atomic slices. Task the AI to modify one module, class, or UI section at a time. Big-bang refactors absorb more tokens and are subject to cutoffs in the middle of their execution.

Branch ruthlessly. Commit before each AI apply, then dd REVIEW THE DIFF. When you hit a limit, back up immediately to the last not spelunked partial edit.

Constrain the blast radius. Tell the assistant explicitly which files it is allowed to touch and which part should be left untouched. Require explicit plans before execution.

Front-load context once, reuse it thereafter. And have a system prompt that includes architecture notes, invariants, coding standards and test conventions. Plop it at the beginning of every fresh “thread” to cut down on the “re-explaining.”

Schedule tokens like a budget. Time-box intense sessions, round generation with manual review, and refrain from starting long edits near the end of a block of work.

Automate guardrails. Lint, types and tests in pre-commit hooks catch nonsense early. Snapshot tests and contract tests detect unintentional regressions introduced by overeager edits.

Who should upgrade — and who shouldn’t

Upgrade it if your income or deadlines depend on uninterrupted throughput, or if you frequently ask the model to work through many files. Vendors like OpenAI, Anthropic and GitHub offer predictable quotas and enterprise guardrails for teams building production systems.

Keep being frugal if you’re building prototypes, learning or shipping side projects. The $20/month tier is great for UI polish, glue code, tests and structured refactors — if you keep changes small and confinement rules tight.

Bottom line

A $20 A.I. coding plan can be almost a cheat: hours of drudgery disappear; whole features emerge in the time it takes to drink your first cup of coffee. The sole pitfall is the mysterious ceiling — rate limits that can dump you out mid-edit and torpedo your momentum. Think of the helper as a power tool, not a magic wand: plan your cuts, brace your work and determine whether you’d rather pay for stability or engineer around its boundaries.