I spent about 60 intense hours pair programming with ChatGPT Codex on real projects, shipping features and fighting the rough edges. The net: when applied with restraint, Codex is a catalytic teammate. When indulged, it is an imaginative wrecking ball. Here are the 10 hard-won secrets I wish I knew on hour one.

Context: GitHub’s research has found that AI pair programmers can assist developers in completing tasks at around 55% faster rates, and McKinsey expects generative AI to automate 20% to 30% of software development activities. Those are real, tangible gains — if you establish the ground rules and drive the session like a senior engineer.

- 1) Thin-slice the work, not the PRD, to avoid brittle changes

- 2) Design the UI first, but without wireframes or logic

- 3) Ask for minimal diffs and branch-based work to stay safe

- 4) Use and enforce modularity and file hygiene for clarity

- 5) Add a project guardrail file (AGENTS.md) for guidance

- 6) Provide visual context with screenshots and DOM snippets

- 7) Control the context window with concise, durable handover notes

- 8) Capture all the big ideas, but no scope creep during build

- 9) Treat tests as the contract, not an afterthought, every time

- 10) Treat the AI like a junior developer and actively coach it

- Bonus workflow tips that consistently save me hours

1) Thin-slice the work, not the PRD, to avoid brittle changes

Big monolithic prompts that dump the whole product spec cause misunderstandings, and sweeping, brittle code changes. Break goals down into thin, testable slices: “Add setting X,” “Persist it,” “Render it,” “Wire it to logic,” “Write unit tests.” Ship one slice and go. It also continues to have trouble with context and rollbacks.

2) Design the UI first, but without wireframes or logic

Have Codex draw out the interface with no persisted or business logic. Then iterate on spacing, hierarchy and behavior. On my sprints, Codex saved me hours of grinding on CSS layout. Only once the UI felt right did we slap on state, events, and backend calls. SoC is your velocity factor.

3) Ask for minimal diffs and branch-based work to stay safe

Codex tends to over-edit. If you want “generate a minimal diff,” “only touch these files,” and “provide a plan before changes,” specify them. Use feature branches, commit after each step, and leave local history in your editor. Chuck the patch if one goes sideways. Tiny deltas always win over heroic rewrites.

4) Use and enforce modularity and file hygiene for clarity

Left to its own devices, Codex will inline CSS and JavaScript directly into the open file (and make an already complex web of files even more unmanageable). Be explicit: “Output JS to /assets/js, CSS to /assets/css; import with modules; split along domain lines.” The result is maintainable code that humans can reason about when the AI isn’t in the room.

5) Add a project guardrail file (AGENTS.md) for guidance

Retain durable guidance in a top-level AGENTS.md: directory conventions, coding style, framework version, test dependencies, and “never touch” areas (such as vendor packages). Treat it like a CONTRIBUTING.md for your AI teammate. And because it’s persistent, you fritter away less time restating constraints and more time building.

6) Provide visual context with screenshots and DOM snippets

Codex is quick in CSS once it “spies” and catches the problem. Provide annotated screenshots of bugs in layout. When selectors are being persnickety, copy and paste the relevant HTML from your browser’s inspector. This cuts through ambiguity and makes meandering text prompts into picket-fence, high-signal guidance.

7) Control the context window with concise, durable handover notes

Context can drift and bloat during long sessions. Ask Codex for a summary of the current context (goals, tech stack, key decisions, known trade-offs) and open threads of this coding. Begin new chats with that description. I also add handovers to a WORKLOG.md so any other collaborator—or the AI—can rehydrate state immediately.

8) Capture all the big ideas, but no scope creep during build

Codex enjoys suggesting features in the middle of a task. Park them in a backlog and don’t implement them right now. Attention attracts ideas; most of them are perfectly fine, they are just ill-timed. Product discipline — finish the slice, then triage — keeps quality high and regression risk low; a lesson that’s clearly borne out in surveys within Stack Overflow’s developer community.

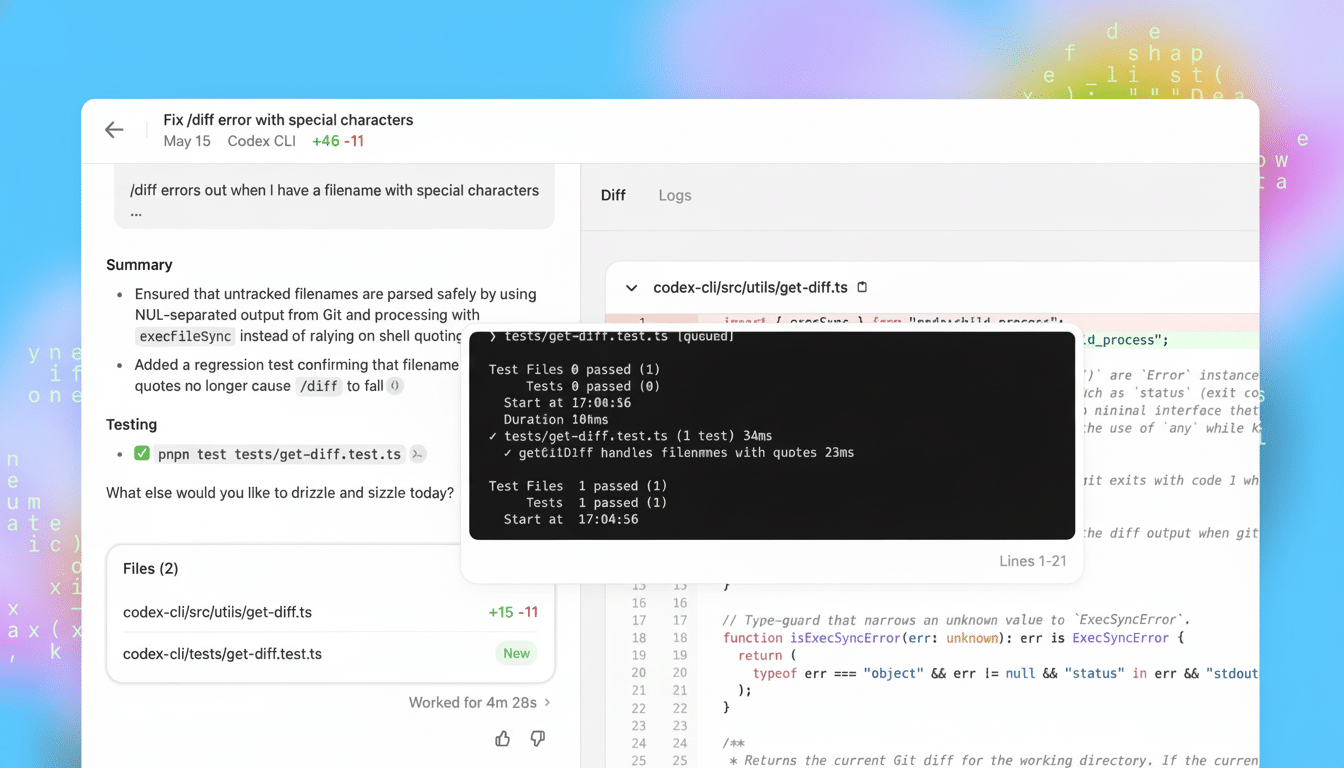

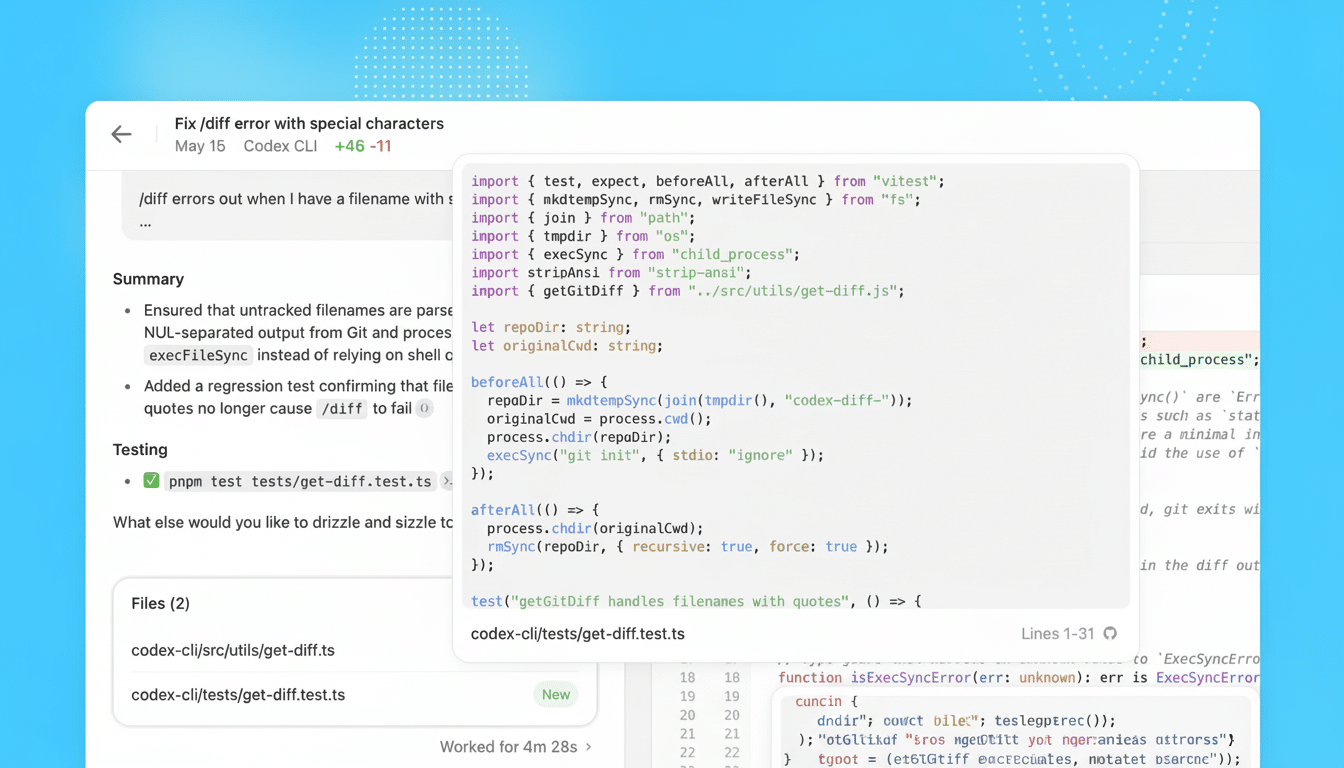

9) Treat tests as the contract, not an afterthought, every time

Ask Codex to generate unit tests, integration tests and fixtures before modifying core logic. Require coverage targets and linting. Let it comment about what is being tested. If the AI changes its behavior, demand that it update the suite. Tests are guardrails that keep “creative” refactors from shattering user expectations.

10) Treat the AI like a junior developer and actively coach it

The most rapid loops occurred when I needed a plan: “Outline steps, list files to touch, propose function signatures, then wait for approval.” This is a reflection of real code reviews and reduces rework. If it seems dangerous, adjust it together. The aim is not deference but purposeful, auditable progress.

Bonus workflow tips that consistently save me hours

Back up often, build guards into main with branch rules, and automate formatting to avoid churn. Keep the requests brief, refer to line numbers, and don’t paste your entire stack trace. If you run into rate limits, queue changes and batch requests instead of doing massive dumps; it’s nicer to the model and your repo history.

Done this way, ChatGPT Codex provides real leverage: faster scaffolds, faster UI iteration, and fewer yak-shaves. But the human is still in the driver’s seat, defining scope, setting standards, writing tests and making trade-offs that turn generated code into working software.